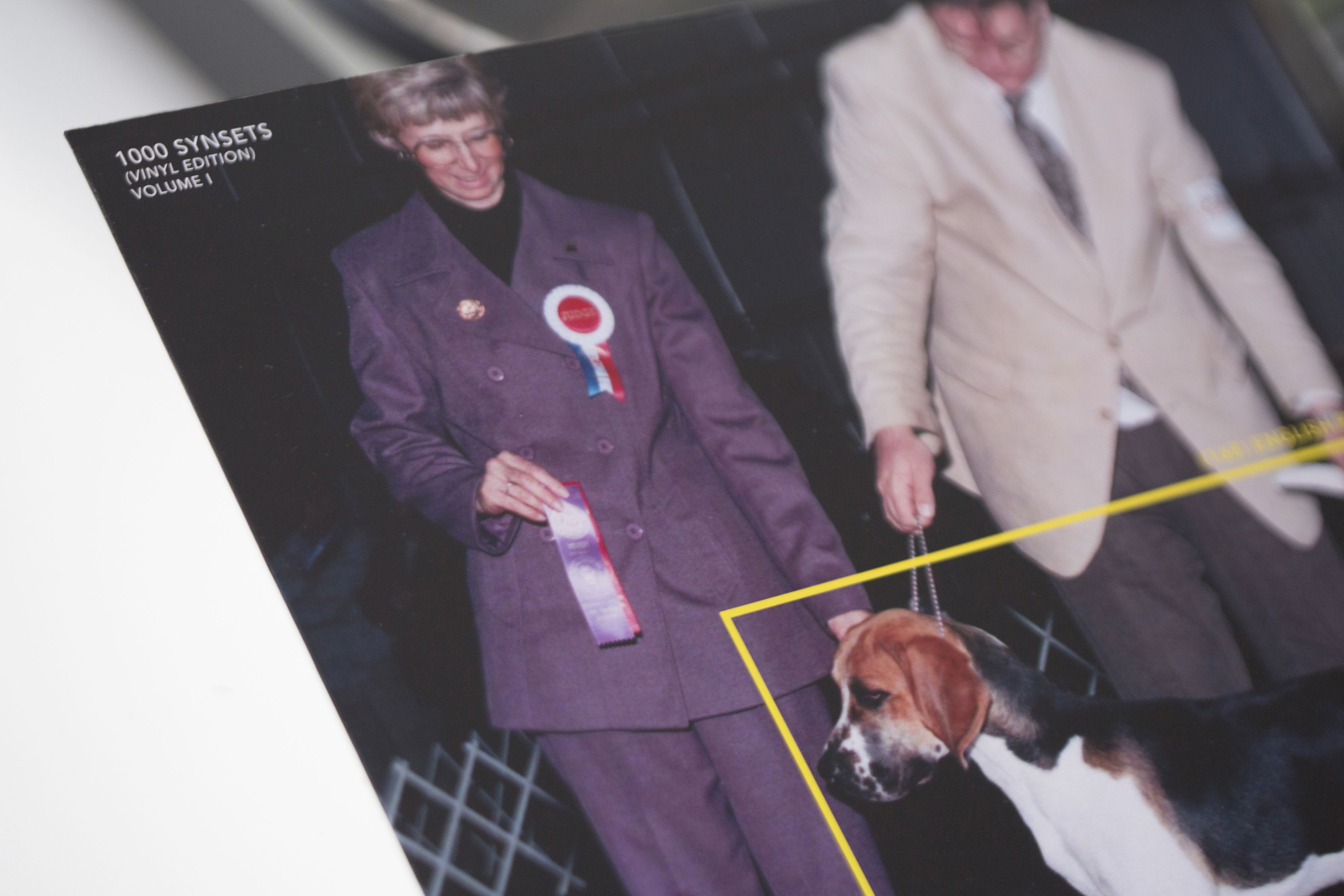

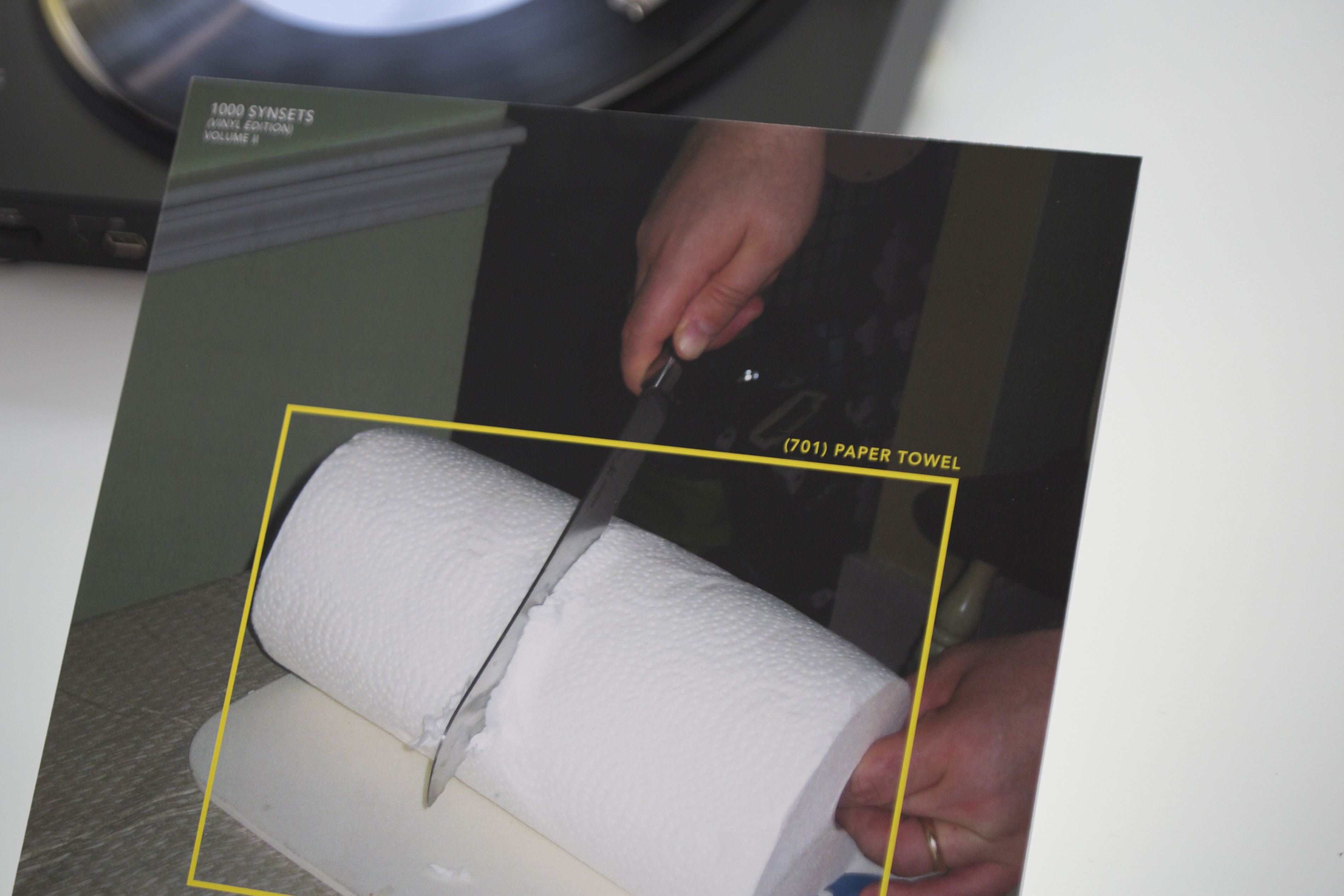

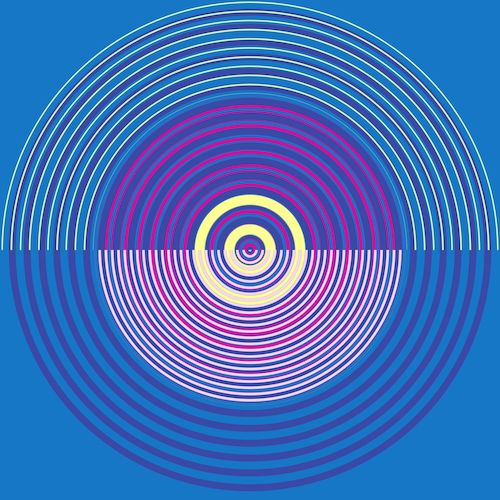

1000 Synsets (Vinyl Edition)

Javier Lloret Pardo

2019

Initiated in 1985, Wordnet is a hierarchical taxonomy that describes the world. It was inspired by theories of human semantic memory developed in the late 1960s. Nouns, verbs, adjectives and adverbs are grouped into synonym sets also known as “synsets.” Each express a different concept. ImageNet is an image dataset based on the WordNet 3.0 noun hierarchy. Each synset was populated with thousands of images.

From 2010 until 2017, the ImageNet Large Scale Visual Recognition Challenge, or ILSVRC, was a key benchmark in object category classification and localization for images, having a major impact on software for photography, image searches and image recognition. In the first year of the ILSVRC Object Localization Challenge, the 1000 synsets were selected randomly from the ones used by ImageNet. In the following years there were some changes and manual filtering applied, but since 2012 the selection of synsets has remained the same.

1000 synsets (Vinyl Edition) contains these 1000 object categories recorded at the high sound quality, which this analog format for audiophiles and collectors allows. This work highlights the impact of the datasets used to train artificial intelligence models that run on the systems and devices that we use on a daily basis. It invites us to reflect on them, and listen to them carefully. Each copy of the vinyl records displays a different synset and picture used by researchers participating in the ILSVRC Challenge to train their models.

Artist Statement

In my artistic practice I explore transitions between visibility and invisibility across mediums.

In recent years, my interest in transitions of visibility led me to conduct research on a series of related topics that became strongly connected with my work. These include out-of-frame video narratives, digital steganography, tactics of military deception, and lately, Deep Learning systems and training datasets.

Biography

Javier Lloret is a Spanish/French artist and researcher based in Amsterdam. He holds degrees in Fine Arts (Gerrit Rietveld Academy, Amsterdam), Lens-Based Media (Piet Zwart Institute, Rotterdam), Interface Cultures (University of Art and Design of Linz, Austria) and Engineering (Pompeu Fabra University, Barcelona).

His work has been exhibited in Ars Electronica Festival (Austria), TENT (Rotterdam), ART Lima, Enter 5 Biennale (Czech Republic), Madatac (Spain), Santralistambul (Turkey), and in the AI Art Gallery from the NeurIPS Workshop on Machine Learning for Creativity and Design 2020, among others.

He has been a core tutor at Willem de Kooning Academy (Rotterdam) and at Piet Zwart Institute (Rotterdam), and guest lecturer at the Guangzhou Academy of Fine Arts (Guangzhou) and Ontario College of Art and Design (Toronto).

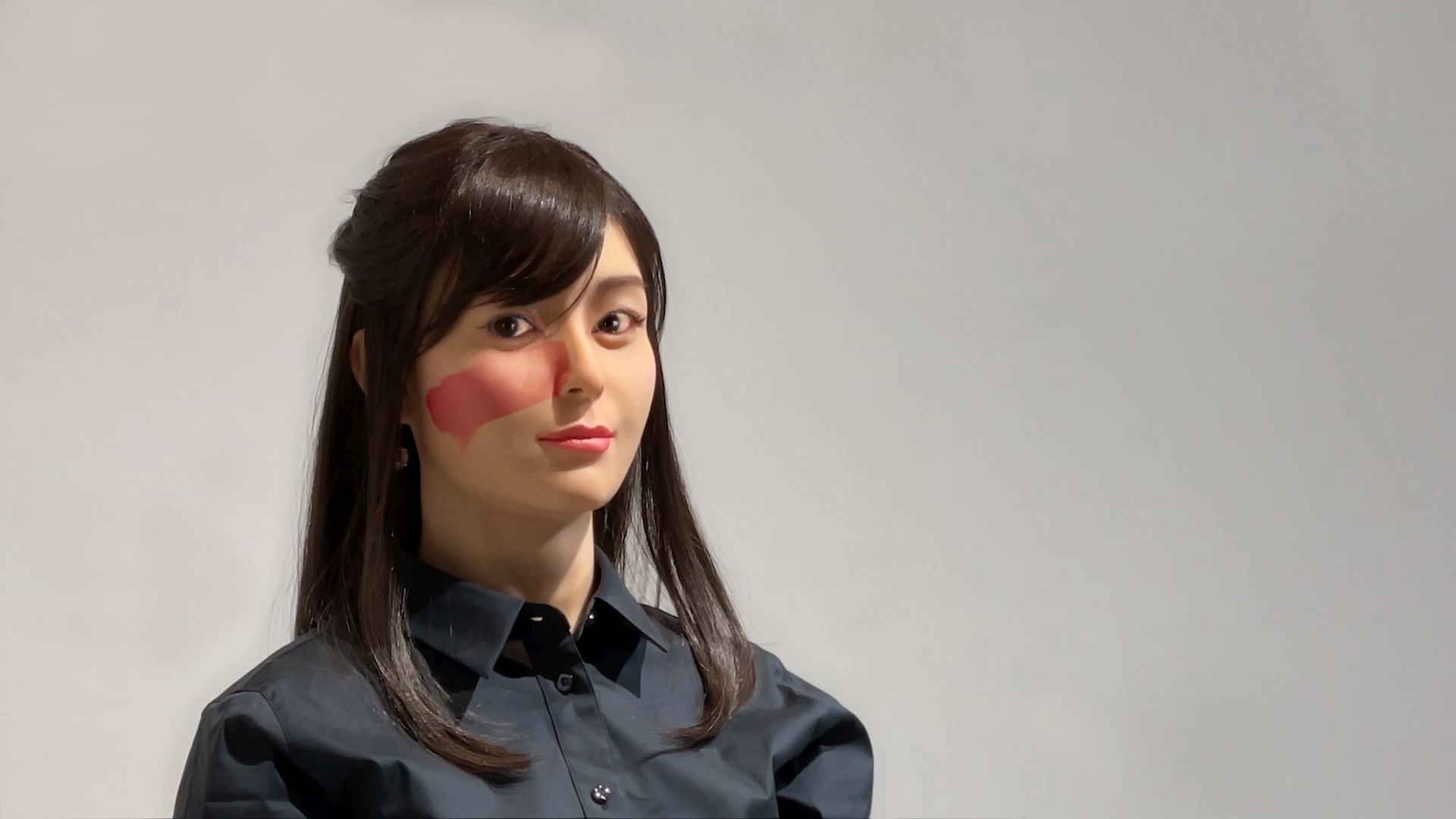

Actroid Series II

Elena Knox

2020-21

Actroid Series II is a group of stills, composite photographs, video portraits and interactions that foreground the potential uses of humanoid robots for AI monitoring and surveillance.

Focusing primarily on the Japan-designed Otonaroid, a model of female-appearing android meant for reception and simple conversation purposes, the works concern how we render restrictions more comfortable by giving social systems a human face.

In making many of these works, Knox invited acquaintances in Japan to sit as "eye models.” Most often, the face ascribed to newly-available technologies is a young, pleasant, female face. So, Knox has given the robots “the male gaze:” her eye models are male. Male eyes look out from the artificial female face, in images of patriarchal surveillance.

The series continues Knox’s pioneering embodied critique of the aesthetic evolution of the service gynoid (e.g. Actroid Series I). It focuses on the social future of fembots, drilling down disquietude about companionship and loneliness (The Masters), surveillance (The Host, ご協力お願いします;Your Cooperation is Appreciated; The Monitor), exploitation (Existence Precedes Essence), domestic familiarity (Unproblematic Situations in Daily Communication), and emotional manipulation (Mirror Stage, Figure Study).

This series is a collaboration with artist Lindsay Webb. The featured robots are created by Ishiguro Lab, Osaka, Japan.

Artist Statement

My work stages presence, persona, gender and spirit (存在感・心・性・建前) in techno-science and communications media. I amplify human impulses to totemism, idolatry, and fetishism, by which we attempt to commune with parahuman phenomena, and to push back against our ultimate loneliness in the galaxy.

Biography

Elena Knox is a media/performance artist and scholar. Her artworks center on enactments of gender, presence and persona in techno-science and communications media, and her writing appears in literary and academic journals. Knox attained her PhD at UNSW Australia in Art and Design with research on gynoid robots. She has been a researcher in Japan since 2016 at Waseda University’s Department of Intermedia Art and Science, Faculty of Fundamental Science and Engineering, Tokyo.

In 2019, Knox participated in the exhibitions “Future and the Arts” at Mori Art Museum, Tokyo, “Post Life,” China tour, at the Beijing Media Art Biennale, and “Lux Aeterna,” at the Asia Culture Center, Gwangju, Korea, amongst others. In 2020 she presented at Yokohama Triennale and Bangkok Art Biennale. In 2021 she will show new work in the Echigo-Tsumari Art Triennial, Japan.

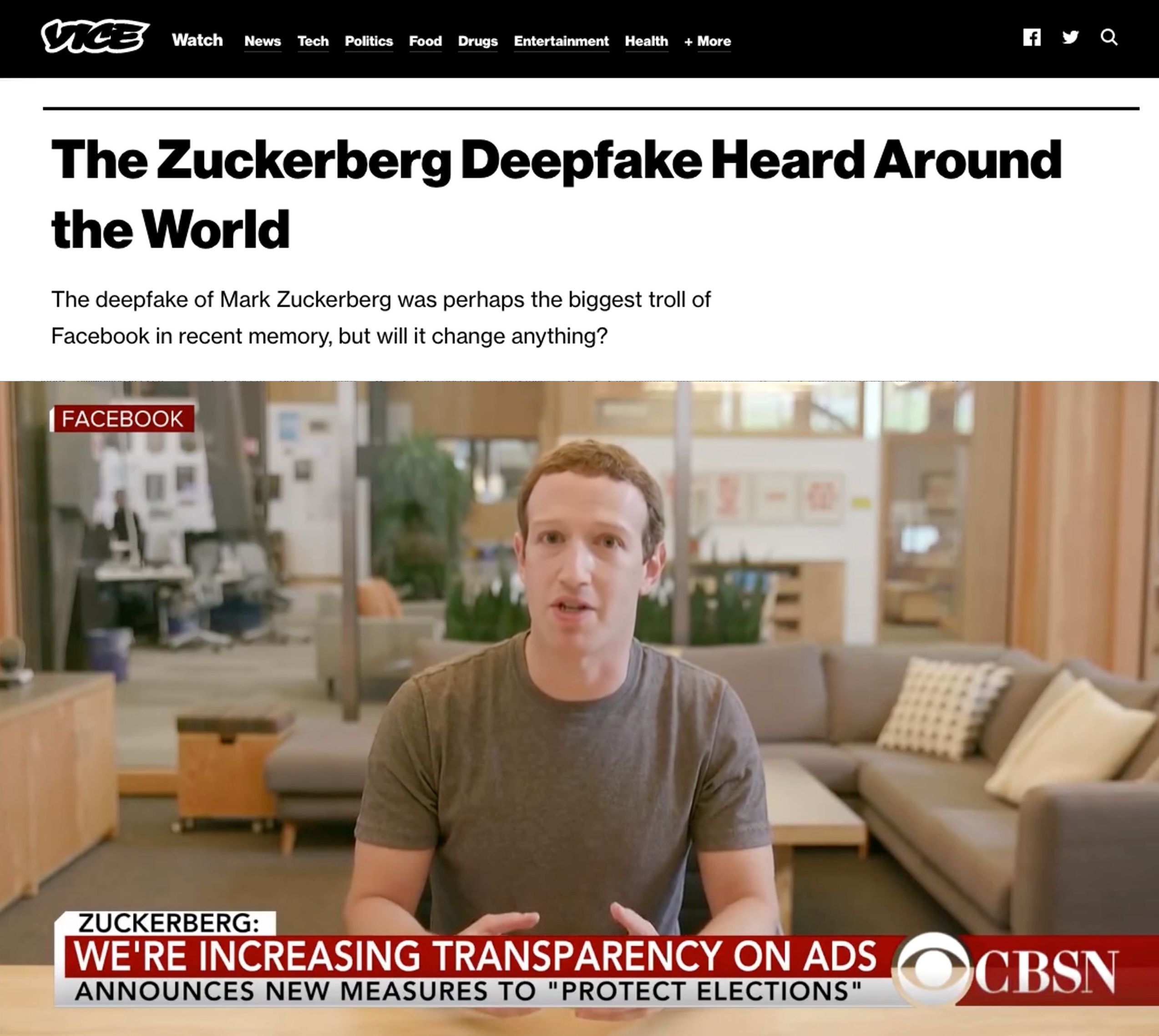

Big Dada: Public Faces

Daniel Howe & Bill Posters

2020

The Big Dada series was first released in conjunction with the Spectre installation in June 2019, and included Mark Zuckerberg, Kim Kardashian, Morgan Freeman and Donald Trump. It quickly went viral and lead to global press coverage and confused responses from Facebook and Instagram regarding their policies on synthetic media and computational propaganda. The Public Faces iteration was created in 2020 and added new character studies including Marcel Duchamp, Marina Abramović and Freddy Mercury.

Artist Statement

A visual narrative comprised of ten AI-synthesised deep fake monologues featuring celebrity influencers from the past and present.

Biography

Daniel Howe (https://rednoise.org/daniel) is an American artist and educator living in Hong Kong. His practice focuses on the writing of computer algorithms as a means to examine contemporary life. Exploring issues such as privacy, surveillance, disinformation and representation, his work spans a range of media, including multimedia installations, artist books, sound recordings and software interventions.

Bill Posters (http://billposters.ch/) is an artist-researcher, author and activist interested in art as research and critical practice. His work interrogates persuasion architectures and power relations in public space and online. He works collaboratively across the arts and sciences on conceptual, synthetic, net art and installation-based projects.

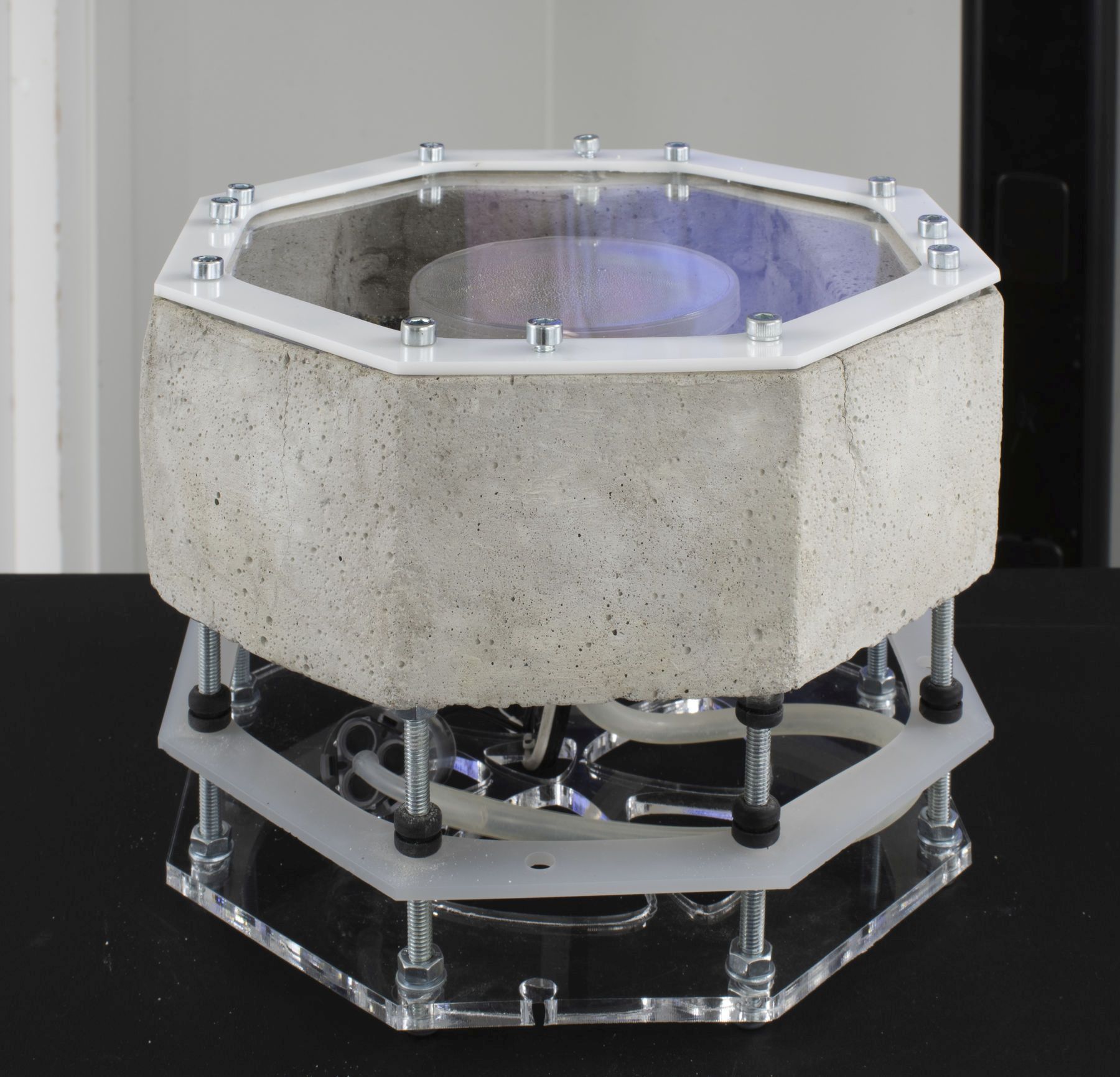

bug

Ryo Ikeshiro

2021

bug is a new sound installation which demonstrates the potential of audio technologies in locating insect-like noises and navigating through spatial audio field recordings using sound event recognition (SER) and directional audio. The work serves as a commentary on the increasing development of related audio technologies with uses in entertainment and advertising, as well as in surveillance, law enforcement and the military.

Spatial audio is the sonic equivalent of 360-video. Usually employing the Ambisonics format, it is capable of capturing audio from all directions. In bug, Ambisonics field recordings from Hong Kong are scanned by a machine learning audio recognition algorithm, and the location in the 3D auditory scene with audio characteristics which are most similar to insect sounds is identified. It is then made audible by parametric speakers emitting modulated ultrasound with a laser pointing in the same direction. The emitted ultrasound is highly directional, creating a “beam” of inaudible sound waves more similar to light than conventional audio. As the waves collide with the interior of the installation space and demodulated, audible sound emanates from the point on the wall, ceiling or floor at which they are reflected.

The work demonstrates an original method for reproducing 3D spatial audio through an alternative application of directional audio technology along with SER. In combination with the use of parametric speakers emitting modulated ultrasound in a process reminiscent of echolocation used in sonar, SER is used in determining the segment of audio to be projected. Thus, the gaze (and ear) of the viewer which determines the audio and video displayed in standard VR and spatial audio are replaced by an algorithm.

It also proposes a novel approach to automated crowd surveillance using machine learning and SER, through bugging and metaphorically locating cockroaches and socially undesirable elements.

Artist Statement

Ryo Ikeshiro works with audio and time-based media to explore possibilities of sound. Techniques of sonification—the communication of information and data in non-speech audio—are harnessed in an artistic context, with algorithms and processes presented as sound to investigate computational creativity and the relationship between the audio and the visual. Comparable processes to sonification are also used, such as ideophones in East Asian languages—words which evoke silent phenomena through sound. In addition, the manifestation through sound and technology of issues of identity and Otherness is explored. His output includes installations and live performances in a variety of formats including immersive environments using multi-channel projections and audio, 360-video and Ambisonics, field recordings, interactive works and generative works.

Biography

Ryo Ikeshiro is an artist, musician and researcher interested in the artistic potential of computation and code as well as their cultural and political dimension. He was part of the Asia Culture Center’s inaugural exhibition in Gwangju, South Korea, and his TeleText art pages have been broadcast on German, Austrian and Swiss national TV. He is a contributor to Sound Art: Sound as a medium of art, a ZKM Karlsruhe/MIT publication, and his articles have been published in the journal Organised Sound.

Chikyuchi

Vincent Ruijters and RAY LC

2021

Chikyuchi—from the Japanese for Earth—are digital pets for the state of our environment. The health of the Chikyuchi’s are in sync with the current state of environmental decline. Chikyuchi also talks about itself by using machine learning on tweeter text to mimic the way humans talk about the environment online.

The two Chikyuchi species are Amazonchi (based on the Amazon rainforest) and Hyouzanchi (based on the Arctic icebergs). One species will be exhibited in Tokyo whilst the other is to be simultaneously exhibited in Hong Kong. What both places share are densely urbanized areas far away from the regions that the Chikyuchis represent.

Visitors on both locations can see each other through a live feed projected next to the Chikyuchis, thereby enabling them to see each other's reactions and interact with each other.

Chikyuchi has both a critical and a speculative layer. The work criticizes the way we have been brought up in an environment that promotes building affective relationships with inanimate consumer products like Tamagotchi, whilst failing to promote empathic bonds with nature.

This has left us with a strong affective relationship with consumerism and pop culture and an apathetic relation to nature. In the midst of the Climate Crisis, we confront, too little too late, a constant stream of fruitless disaster and panic news. This leads to an apathy of action to adequately handle the Climate Crisis. In our case, it is not anthropomorphism embedded in religion, but embedded in a language that our generation grew up with and understands: consumer and pop culture. Thus, consumer and pop culture can become a gameful vehicle to reconnect people with nature instead of disconnecting them with it. In this project we will use play—namely, mimesis—as a means for audiences to make that care-taking connection.

Artist Statement

Climate change and deforestation is changing our planet, and our methods for dealing with them relies on facts and explicit persuasion that do nothing to influence this generation. Chikyuchi attempts to bridge this gap using an artistic intervention. It uses digital technology of the past reimagined for the purpose of caring for our planet. Taking the metaphor of the Tamagotchi device, Chikyuchi combines the physicality of wood with machine-learning GPT-2-based text generation that reflects the implicit effects of behavioral nudging and intrinsic motivation. The cuteness and interactibility of the device, with twitter-based text added, produces implicit methods to affect human actions.

Biography

Vincent Ruijters, PhD. Born in 1988, the Netherlands, and based in Tokyo, Ruijters is an artist concerned with emotion and human relations in the context of contemporaneity. Ruijters contemplates the relation of the contemporary human towards nature. Ruijters obtained a PhD in Intermedia Art at Tokyo University of the Arts. Selected solo exhibitions: “Breathing IN/EX-terior” (Komagome SOKO gallery, Tokyo); Selected group exhibitions: “Radical Observers” (Akibatamabi gallery, 3331 Arts Chiyoda, Tokyo). Curation: “To defeat the purpose: guerilla tactics in Latin American art” (Aoyama Meguro Gallery, Tokyo). Awards: Japanese Government Scholarship (MEXT).

RAY LC, in his practice, internalizes knowledge of neuroscience research for building bonds between human communities and between humans and machines. Residencies: BankArt, 1_Wall_Tokyo, LMCC, NYSCI, Saari, Kyoto DesignLab, Elektron Tallinn. Exhibitions: Kiyoshi Saito, Macy Gallery, Java Studios, Elektra Montreal, ArtLab Lahore, Ars Electronica, NeON, New Museum, CICA Museum, NYC Short Documentary Film Festival, Burning Man. Awards: Japan JSPS, National Science Foundation, NIH.

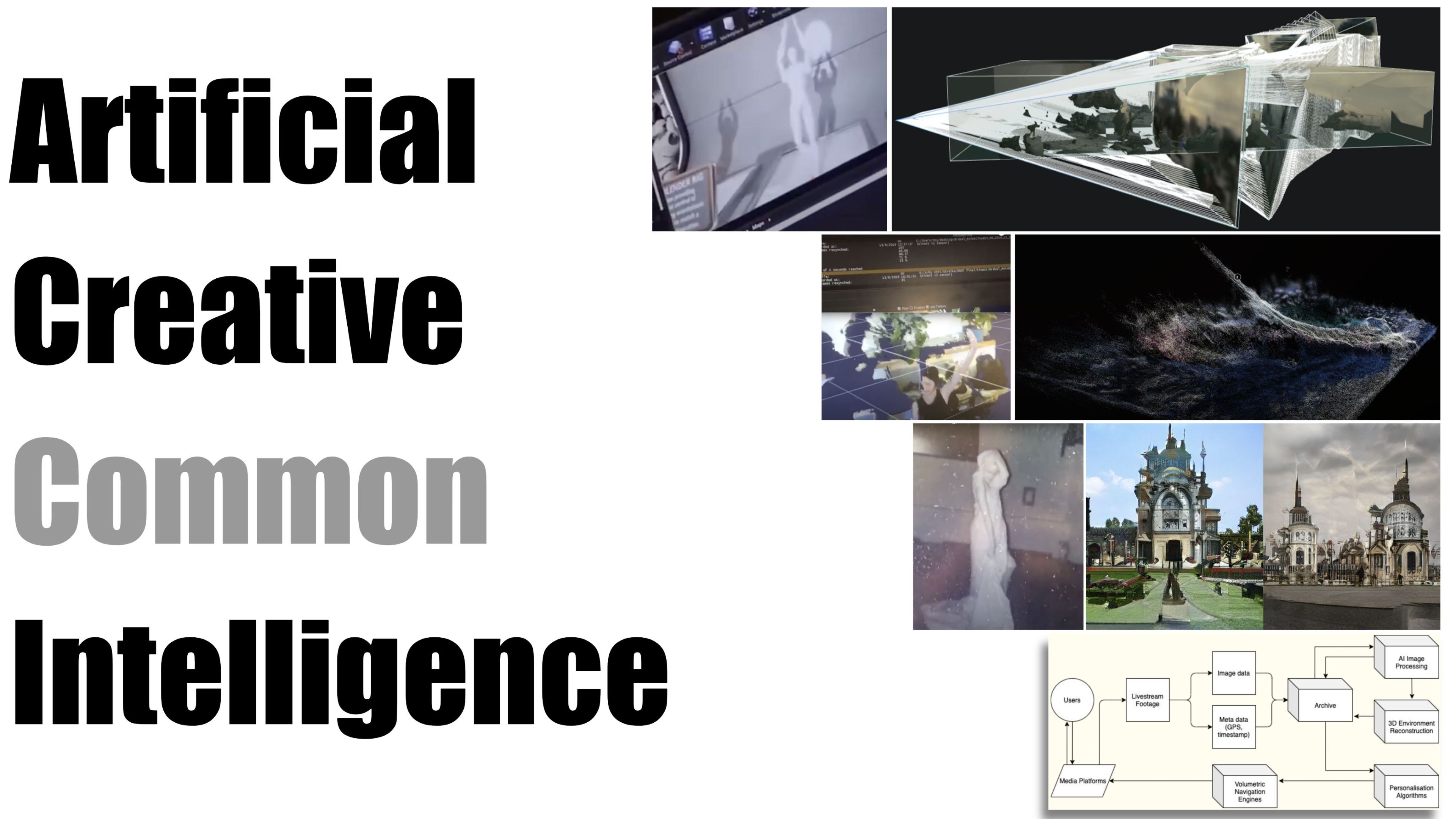

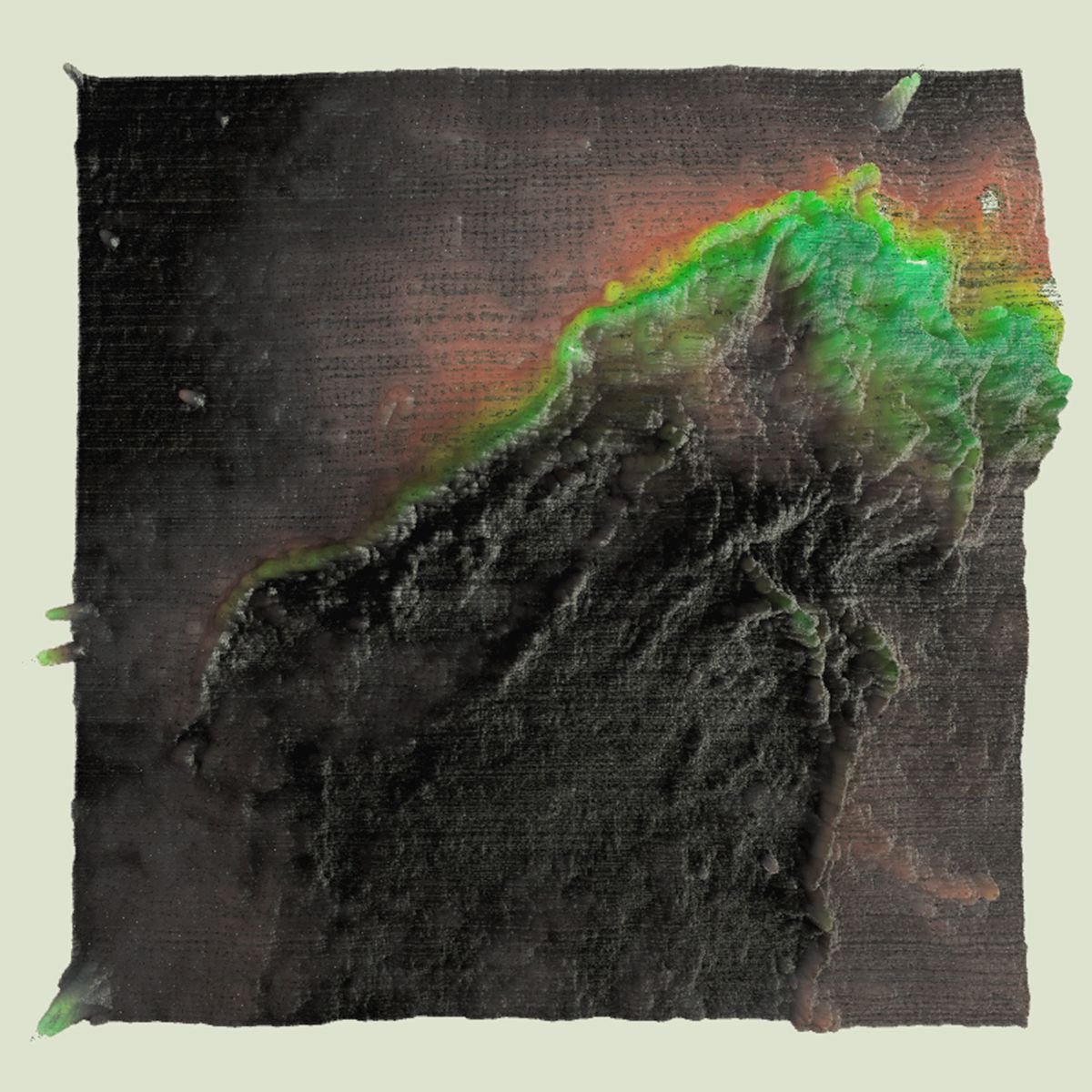

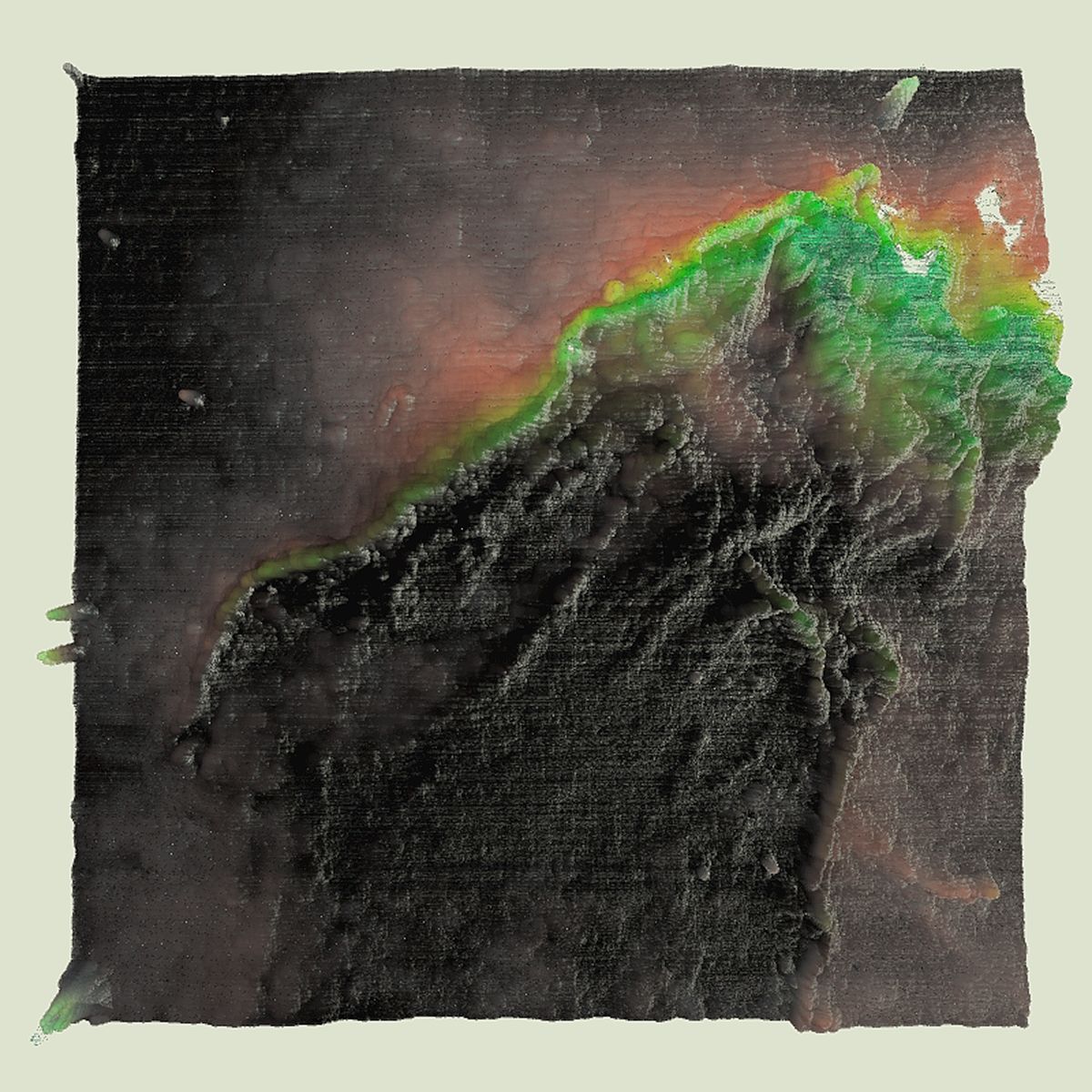

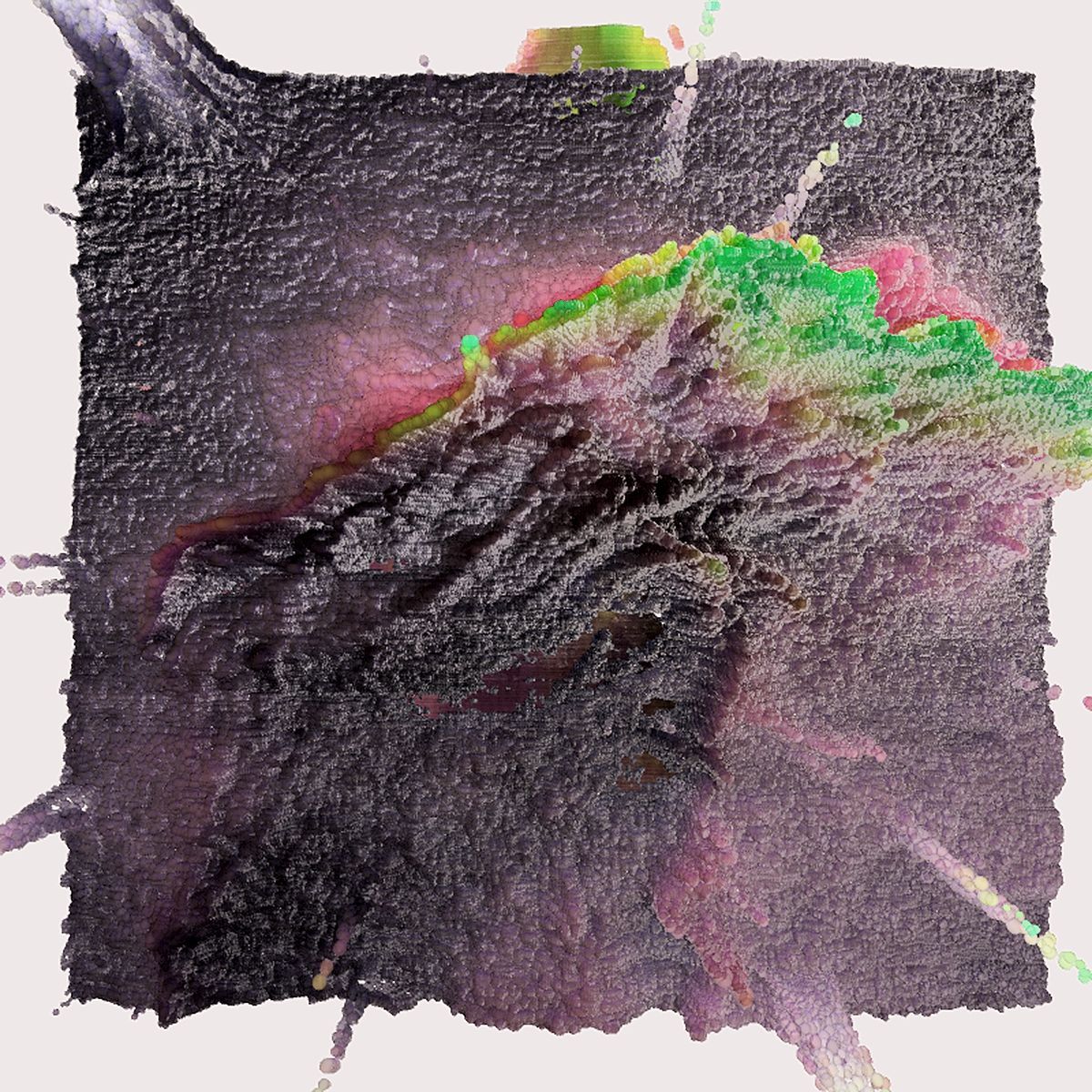

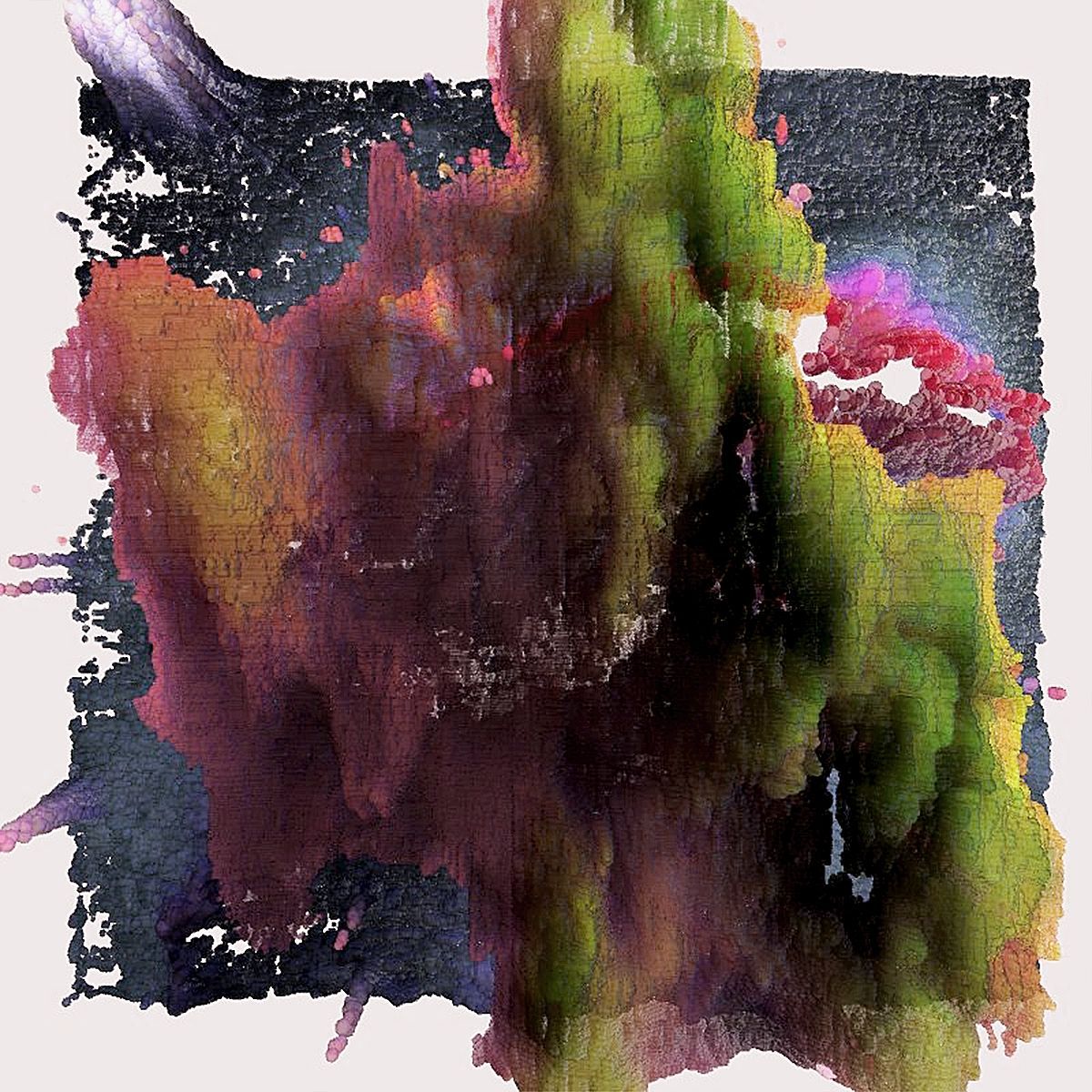

Current

Provides Ng, Eli Joteva, Ya Nzi and Artem Konevskikh

2019-20

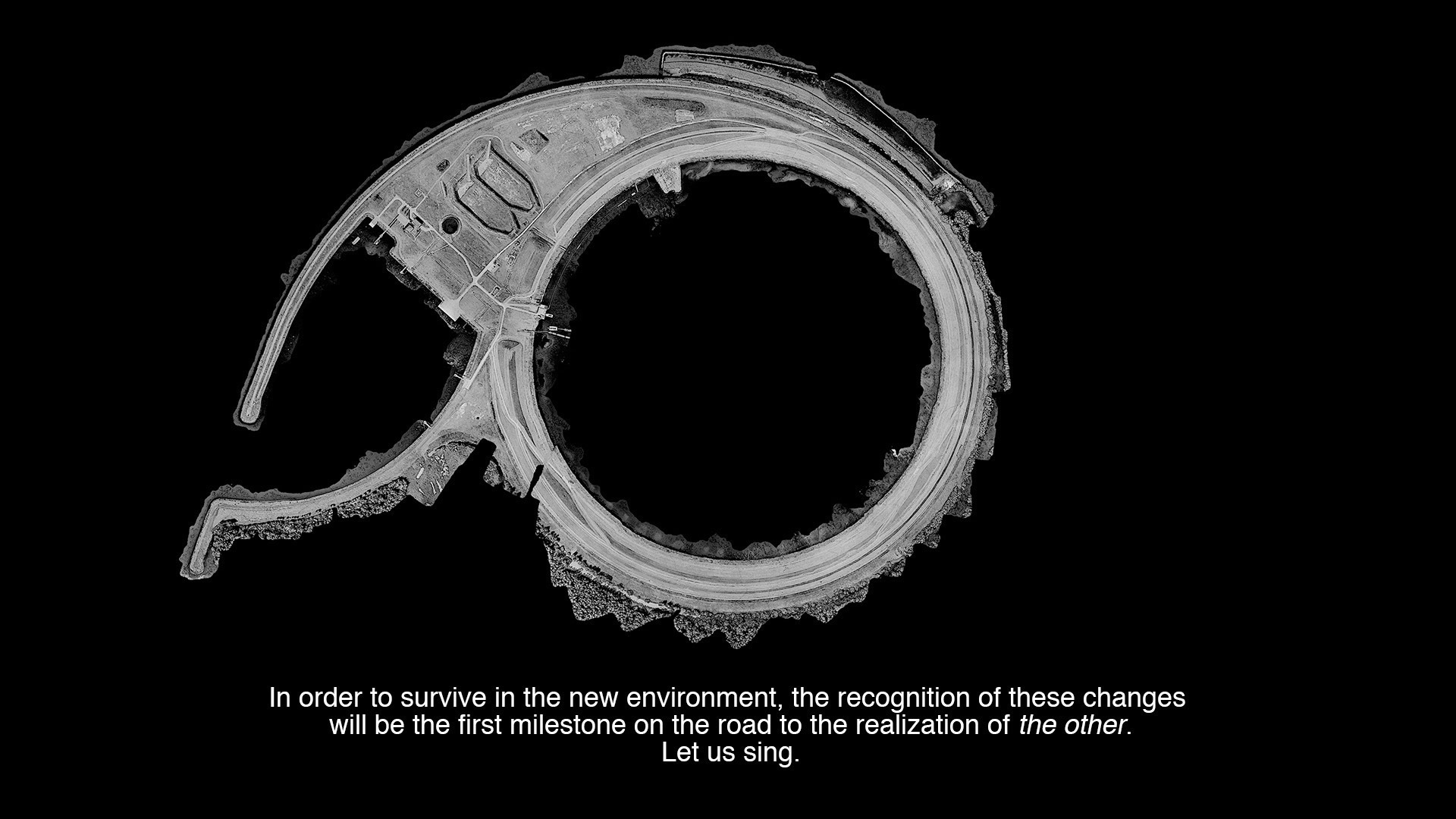

Current is a speculation on the future of broadcast cinema. It emerges from the intersection of contemporary trends in live streaming culture, volumetric cinema, AI deep fakes and personalized narratives. The film, Current, is an experiential example of what this cinema might look and feel like within a few years based on the convergence of these trends. AI increasingly molds the clay of the cinematic image, optimizing its vocabulary to project information in a more dynamic space, embedding data in visuals, and directing a new way of seeing: from planar to global, flat to volumetric, personal to planetary.

In the contemporary contestations of algorithmically recommended content, the screen time of scrolling between livestreams has become a form of new cinema. Current experiments with various AI image processing technologies and volumetric environment reconstruction techniques to depict a future where every past account has been archived into an endless stream. History, from the Latin “historia,” means the art of narrating past accounts as stories. What will be the future of our urban environment if every single event is archived in real time to such accuracy that there is no room for his-story? This implies an economy of values, which has potential in multiple streams beyond social media, as the content deep learns from itself.

Along these lines, Current seeks to configure a new aesthetic vocabulary of cinematology, expanding the spectrum of aesthetic semblance and intelligence, questioning truth and identity in contemporary urban phenomena. Current experimented with a range of digital technologies that are readily available to any individual (e.g. livestream data, machine learning, 3D environment reconstruction, ubiquitous computing, pointclouds and lidar scanning). Alongside the volumetric film, it developed a production pipeline using distributed technologies, which provide a means for individuals to reconstruct, navigate, and understand event landscapes that are often hidden from us, such as violence in protests, changes in Nordic animal behaviors, and the handling of trash.

Artist Statement

With the emergence of planetary computation, data analytics, and algorithmic governance, the Current Team seeks to bridge communication between digital technology, its folk ontology, and their impacts on urbanism. The team employs visual-driven analytics to investigate contemporaneity and speculate on our future. They consider visual aesthetics not as supplementary to happenings, but possessing intrinsic cultural values that are uncertainly measurable with existing institutional metrics.

The Current Team works with creatives worldwide. They seek understanding across cultural and disciplinary landscapes to knit a global network of connections. The team feels the urgency to amalgamate distributed social and technological capacities into an operable soft infrastructure that complements our physical architectures. Along these lines, the team serves to realize designs that may help to aggregate the efforts of the many, and delineate how this might transform our proximate futures.

Biography

Provides Ng, Eli Joteva, Ya Nzi, and Artem Konevskikh form the the Current Team, an interdisciplinary and intercultural collective, encompassing architects, researchers, artists, CG engineers, data analysts, and AI programmers from China, UK, Bulgaria, USA, and Russia. Apart from our four core members, the Current Team is also constituted from its broader network of creative commons and collaborators from all around the world (https://www.current.cam/evolutions), where virtual space is what has enabled their cross-territorial collaborations and constantly reminds them of the importance of treasuring the affective values which arise from physical interactions. Thus, the team focuses on the “work” as much as the “working”—the processes by which they can create, design, produce, research, and learn together across disciplinary and cultural boundaries. The Current Team and its works are not set apart from the rest of the world, but rather are embedded within it, setting a virtual mediated ground that enables such aggregation of efforts.

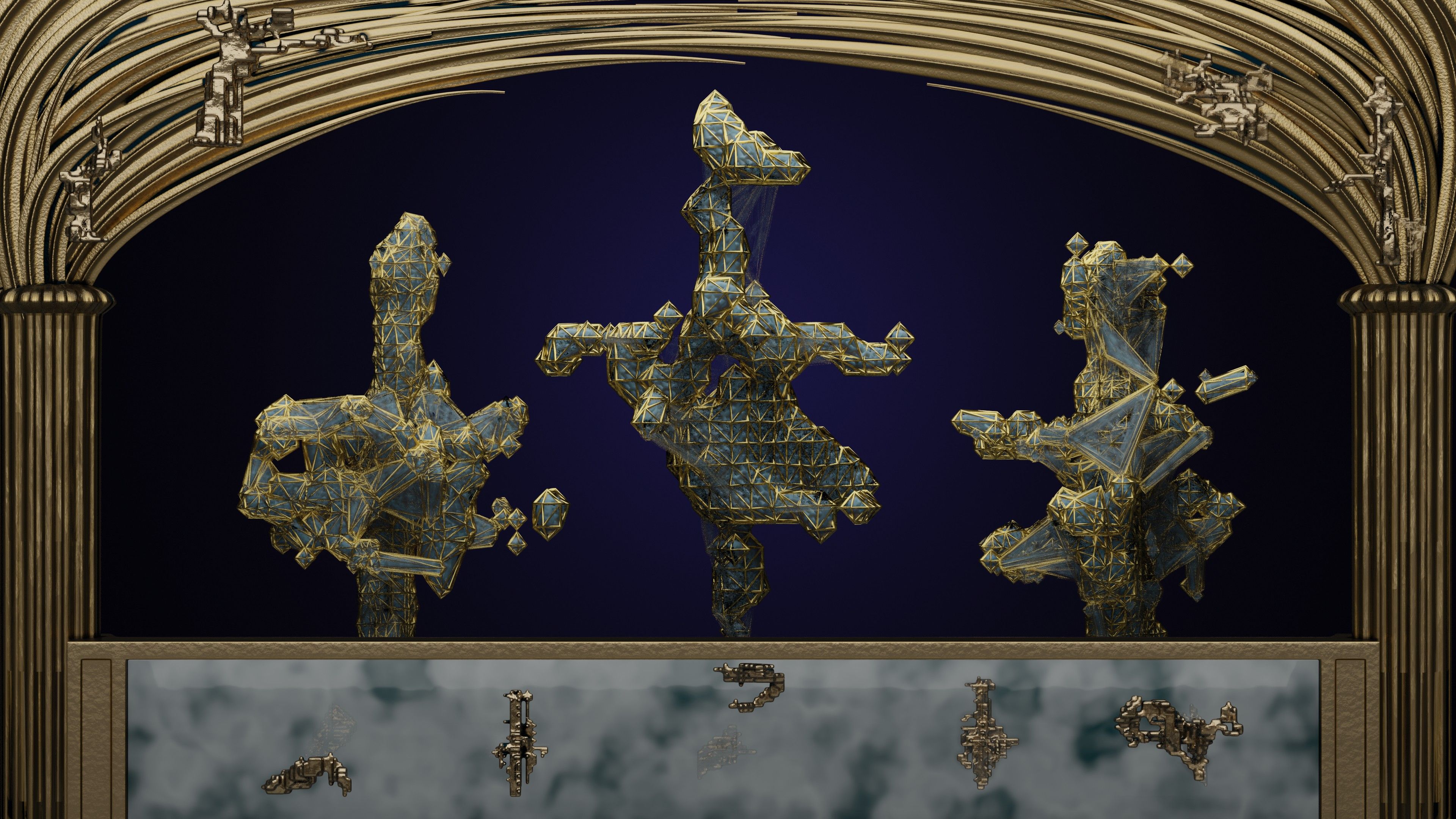

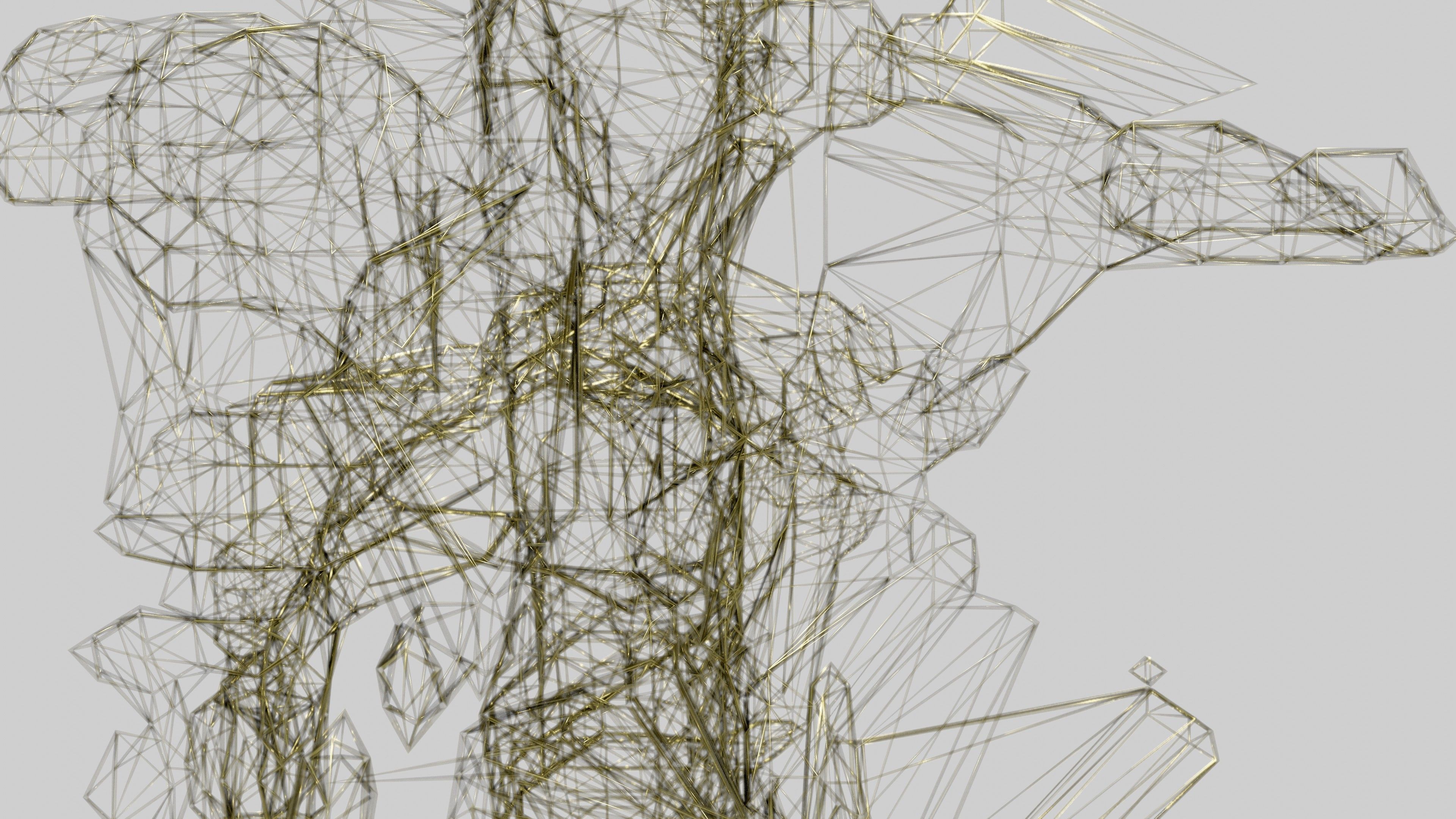

DïaloG

Maurice Benayoun & Refik Anadol

2021

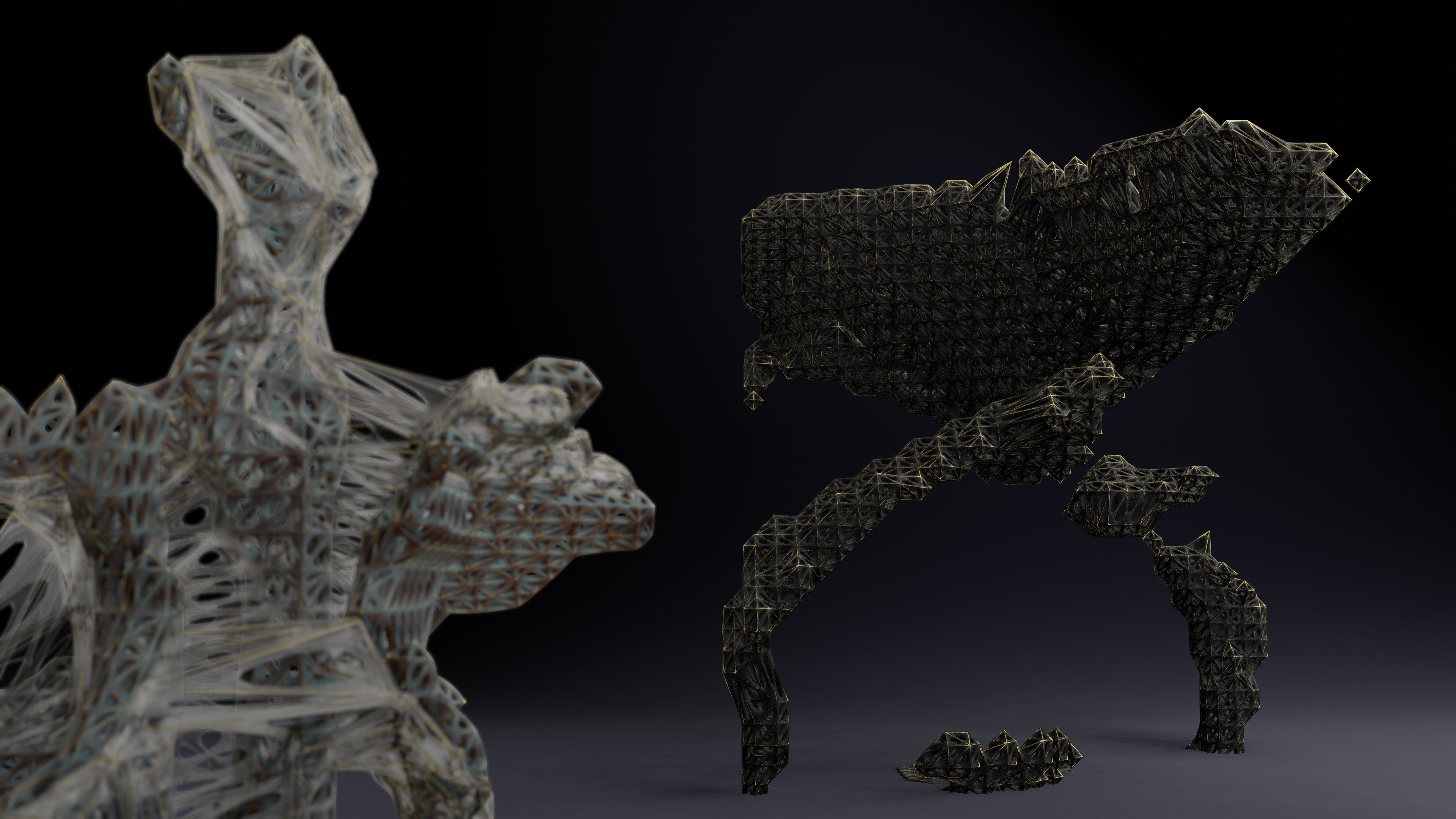

DïaloG is made of two “living” visual dynamic entities facing each other in the public space. They don’t look like the living beings we know. They don’t speak any language we know. They are aliens, strangers, immigrants. They are clearly, and desperately, trying to understand each other, to understand their new environment and their strange public. DïaloG reflects on the difficulty of building a mutual understanding beyond social and cultural differences. All that make living beings what they are: their morphology, their behavior, their ethology, and, beyond language, their cognitive functions, make the laborious process of learning from strangeness and alterity a reality. Dotted with ever-growing perceptive and cognitive capacities, aware of its environment, the artwork is now able to adapt itself, to evolve and to communicate as would a more advanced living entity do. DïaloG tries to epitomize the emergence of the artwork as a subject, not only able to learn from its environment but also to dialogue with its public, and even, it may be a bigger challenge, trying to understand other artworks.

Contributions and History of the Project

The DïaloG project stems from the authors, Refik Anadol and Maurice Benayoun respective practice in the field of generative art, which converge on this endeavor in the context of the MindSpaces collaborative research project, “Art Driven Adaptive Outdoor and Indoor Design,” together with 12 different international partners, supported by the EU Horizon2020, S+T+Arts Lighthouse program. These partners are:

Center for Research and Technology (CERTH, project leader)

Aristotle University of Thessaloniki

Universiteit Maastricht (Belgium)

Universidad Pompeu Fabra (Barcelona)

McNeel Europe (Barcelona)

UP2Metric (Barcelona)

Nurogame (Koln)

Zaha Hadid Architects (London)

MoBen (Maurice Benayoun, Tobias Klein, Paris/Hong Kong)

Analog Native (Refik Anadol, Germany /California)

L‘Hospitalet de Llobregat (City, Spain)

City University of Hong Kong, School of Creative Media (Hong Kong SAR)

The project is also supported by HK RGC/EC joint program, through MindSpaces HK: “Collective neuro-design applied to art, architecture and indoor design.” School of Creative Media, City University of Hong Kong.

DïaloG is also supported by the School of Creative Media and ACIM fellowship through the Neuro-Design Lab.

Most of the partners contribute to different aspects of the research. such as sensing technology and motion capture, architecture, neuroscience, data analysis and language treatment, space representation and design, and public behavior analysis.

The original project is to be presented on the specific site of Tecla Sala, under the umbrella of our institutional partner L’Hospitalet de Llobregat, Spain, (Marta Borregero), in collaboration with Espronceda (Alejandro Martin), McNeel Europe (Luis Fragada), Pompeu Fabra (Leo Wanner, Simon Mille, Alexander Shvets), Maasticht University (Beatrice de Gelder, Alexia Briassouli), Zaha Hadid (Tyson Hosmer), CERTH-ITI (Nefeli Geor, Evangelos Stathopoulos…), and Neuro design Lab SCM/CityU Hong Kong (Tobias Klein, Charlie Yip, Ann Mak, Sam Chan, Tim Leung, Tony Zhang). Many others contribute actively to the project and the Art Machine 2 conference will be the first opportunity to present, validate and test this collaboration in a concrete physical situation.

A real time version of both artworks at an early stage of development for the DïaloG project was presented during Ars Electronica 2020 during the opening discussion by Refik Anadol and Maurice Benayoun, A Dialog about DïaloG, within the framework of the Ars electronica Hong Kong Garden, and the prospective generative work, Alien Life in the Telescope, was presented in real time.

Artist Statement

DïaloG is an interactive urban media art installation exploring the themes of alterity, strangeness and immigration, in a performative way. The work presents two pieces that are at the same time artworks and aliens. They are confronted with a new environment where they don’t belong to… yet. They will have to adapt their language, to build a common knowledge, integrating all new artifacts and natural phenomena that constitute now their environment. This includes the other living beings moving around them, and, eventually, they will have to understand each other. While we use the concept of language very broadly to include speech, performance, gesture, utterance, and even data, we focus on strangeness from an ontological perspective, trying to mark a terrain of possibilities for the intersection of interpersonal and digital experiences in the urban sphere. MoBen and Refik Anadol take the notion of “dia-logos” (through-word, through speech) embedded in the etymological roots of the word “dialogue,” more understood as an informational thread processed through an iterative feedback loop between perception and expression, and push it to a level of transactional complexity by activating the potential difference between virtuality and visuality. In this way, we aim to create a unique, site-specific language between each of both works we are presenting as living entities and their unknown public, and also, between our works that are initially alien to each other—a language of unexpected and indefinable machine expressions that adapt themselves to the constant flow of data representing real-time environmental, societal, and human actions.

Biography

Maurice Benayoun. Artist, theorist and curator, Paris-Hong Kong, Prof. Maurice Benayoun (MoBen, 莫奔) is a pioneering figure of New Media Art. MoBen’s work freely explores media boundaries, from virtual reality to large-scale public art installations, from a socio-political and philosophical perspective. Moben is recipient of the Golden Nica and more than 25 international awards, and has exhibited in major international Museums of Contemporary Art, biennials and festivals in 26 different countries. He has also given over 400 lectures and keynotes around the World. With the Brain Factory and Value of Values:Transactional Art on the Blockchain, MoBen is now focusing on the “morphogenesis of thought,” between neuro-design and crypto currencies. Maurice Benayoun is professor at the School of Creative Media, CityU Hong Kong, and co-founder of the Neuro-Design Lab.

Refik Anadol (b. 1985, Istanbul, Turkey) is a media artist, director, and pioneer in the aesthetics of machine intelligence. He currently lives and works in Los Angeles, California and is also a lecturer and visiting researcher in UCLA’s Department of Design Media Arts. Anadol’s body of work locates creativity at the intersection of humans and machines. In taking the data that flows around us as his primary material and the neural network of a computerized mind as his collaborator, Anadol paints with a thinking brush, offering us radical visualizations of our digitized memories and expanding the possibilities of architecture, narrative, and the body in motion. He holds an MFA degree from UCLA in Media Arts, and an MFA degree from Istanbul Bilgi University in Visual Communication Design as well as a BA with summa cum laude in Photography and Video.

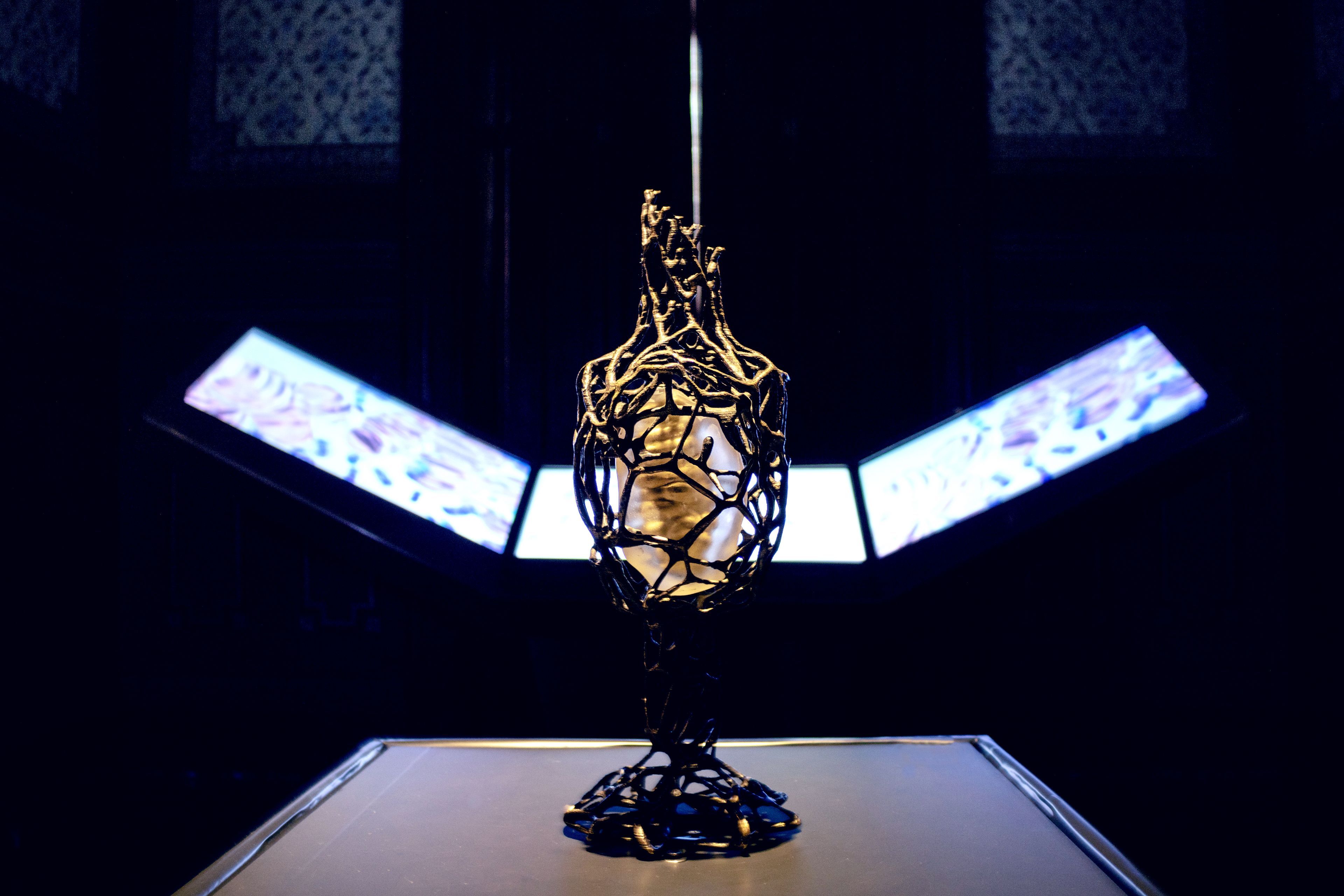

Ghost in the Cell—Synthetic Heartbeats

Georg Tremmel, BCL

2021

The project Ghost in the Cell—Synthetic Heartbeats is a development, extension and re-imagining of the work Ghost in the Cell, where we collaboratively created synthetic DNA for the virtual idol Hatsune Miku and introduced this digital, synthetic DNA into iPS-Cell derived, living cardiomyocytes, therefore giving the virtual, digital idol an actual, living and beating heart. Synthetic Heartbeats combines videos and images of the beating heart cells, combined with the digital synthetic DNA, to create fully synthetic, ongoing, observable heart beats by using Deep Learning and Generative Adversarial Networks (GANs).

This project builds upon the universality of the DNA as an information carrier and questions the difference and similarities between silicon- and carbon-based lifeforms. Synthetic Media, or the possible creation of life-like images, video and sound data, challenges society by creating doubt and suspicion not only of truthfulness, but also of the provenance of images and media. This work, Synthetic Heartbeats does not aim to create “fake” heart beats, but to synthesize heart beats, whose creation was not only informed by visual data, but also by the digital DNA data, which is also present in biological images of the heart cells.

Artist Statement

The virtual idol Hatsune Mike started life as Vocaloid software voice, developed by the company Crypton Future Media in 2007, amongst a range of other software voices. However, the manga-style cover illustration of the software package captured the imagination of the Japanese public, and, coupled with the decision by Crypton Future Media to encourage the production of derivative graphics, animations and videos by the general public, the software transformed itself into a virtual pop idol, producing records and staging life shows. While the voice was given a collective image, we decided to give her a body and a heart. We asked Hatsune Miku fans to create digital synthetic DNA that could contain not only biological data, but also encrypted messages, images and music. This digital DNA was synthesized into actual DNA, and inserted in IPS cells, which then were differentiated in cardiomyocytes (heart cells), which started beating spontaneously. The work was shown in the 21st Century Museum in Kanazawa, Japan, and the audience could see the living, beating heart of Hatsune Miku during the exhibition.

Biography

BCL is an artistic research framework, founded by Georg Tremmel and Shiho Fukuhara in 2007 with the goal of exploring the artistic possibilities of the nano-bio-info-cogno convergence. Other works by BCL include Common Flowers / Flower Commons, where GMO Blue Carnations are cultured, open-sourced and released, White Out, one of the first bio-art works that use CRISPR for artistic research, and Biopresence, which proposed, speculated and realized the encoding of human DNA within the DNA of a tree for hybrid afterlife.

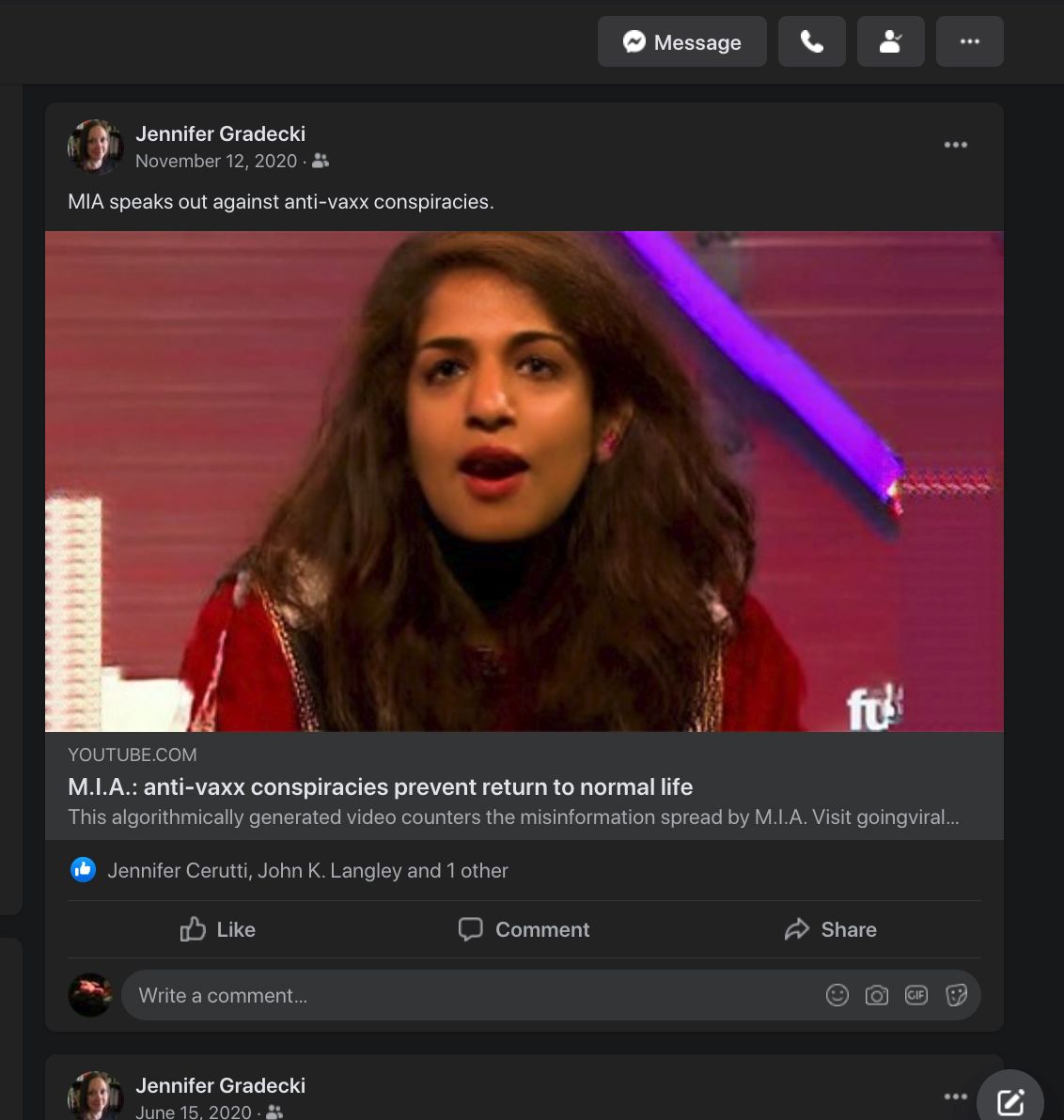

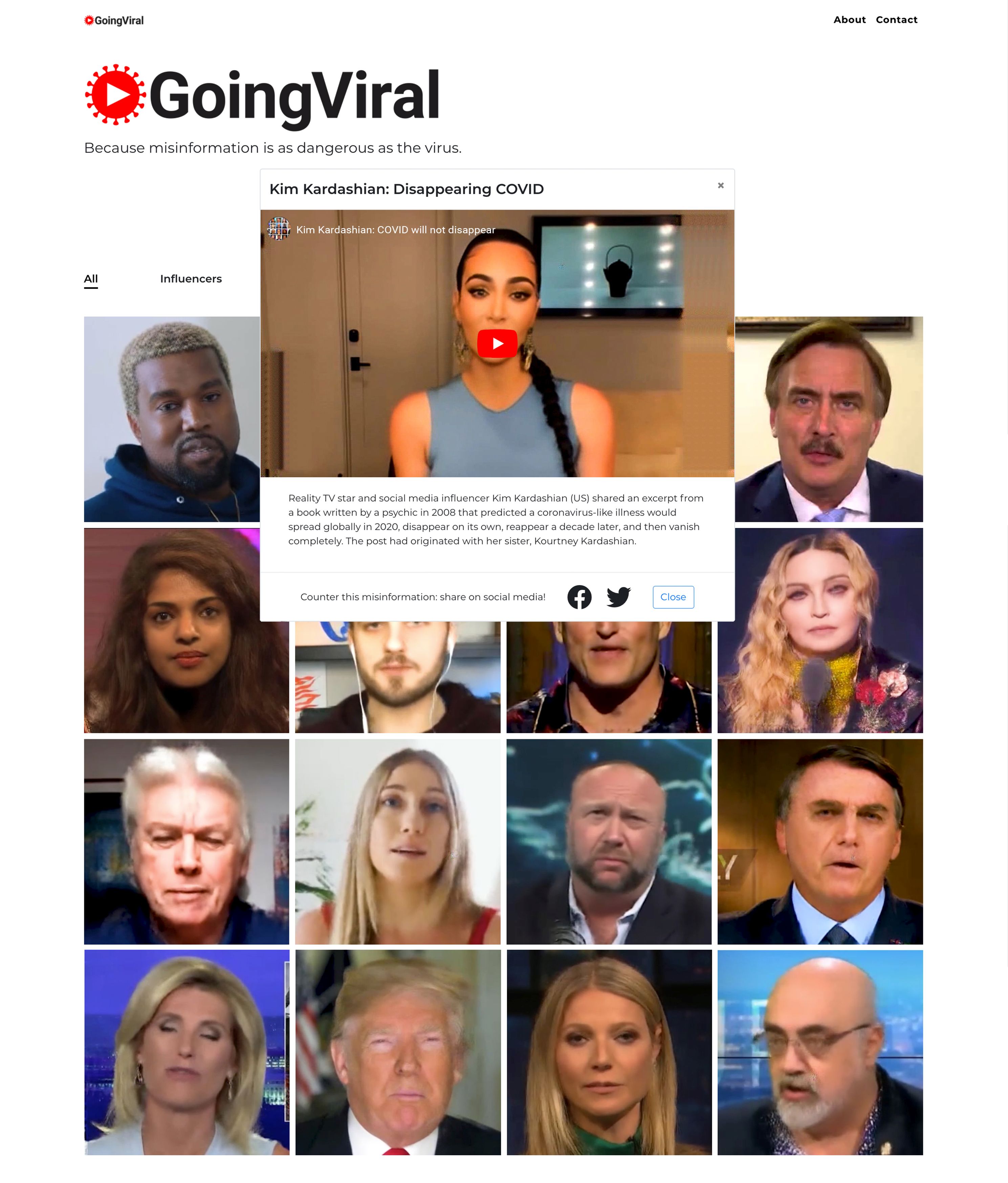

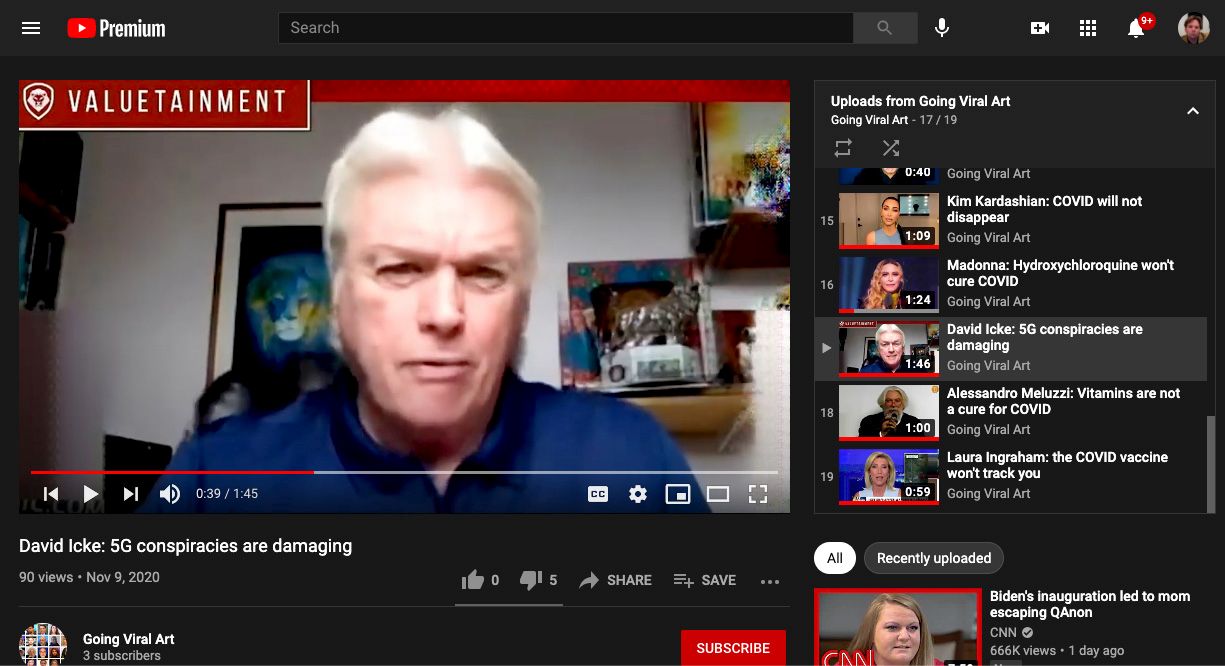

Going Viral

Derek Curry & Jennifer Gradecki

2020-21

Going Viral is an interactive artwork that invites people to intervene in the spreading of misinformation by sharing informational videos about COVID-19 that feature algorithmically generated celebrities, social media influencers, and politicians that have previously shared misinformation about coronavirus. In the videos, the influencers deliver public service announcements or present news stories that counter the misinformation they have promoted on social media. The videos are made using a conditional generative adversarial network (cGAN) that is trained on sets of two images where one image becomes a map to produce a second image.

Celebrities and social media influencers are now entangled in the discourse on public health, and are sometimes given more authority than scientists or public health officials. Like the rumors they spread, social media influencers, and the online popularity of celebrities are constructed through the neural network-based content recommendation algorithms used by online platforms. The shareable YouTube videos present a recognizable, but glitchy, reconstruction of the celebrities. The glitchy, digitally-produced aesthetic of the videos stops them from being classified as “deepfakes” and removed by online platforms and helps viewers reflect on the constructed nature of celebrity and question the authority of celebrities on issues of public health and the validity of information shared on social media.

Going Viral was commissioned by the NEoN Digital Arts Festival.

Artist Statement

Our collaborative artistic research combines the production of artworks with research techniques from the humanities, science and technology studies, and computer science. We use a practice-based method where research into a topic or a technology is used to generate an artwork. This often requires building or reverse-engineering specialized techniques and technologies. The built artifact, including the interactions it fosters, becomes a basis for theorization and critical reflection. Our practice is informed by institutional critique and tactical media and is often intended as an intervention into a social, political, or technological situation. Our projects take a critical approach to the tools and methods we use, such as social media platforms, machine learning techniques, or dataveillance technologies.

Biography

Jennifer Gradecki is a US-based artist-theorist who investigates secretive and specialized socio-technical systems. Her artistic research has focused on social science techniques, financial instruments, dataveillance technologies, intelligence analysis, artificial intelligence, and social media misinformation. www.jennifergradecki.com

Derek Curry is a US-based artist-researcher whose work critiques and addresses spaces for intervention in automated decision-making systems. His work has addressed automated stock trading systems, Open Source Intelligence gathering (OSINT), and algorithmic classification systems. His artworks have replicated aspects of social media surveillance systems and communicated with algorithmic trading bots. https://derekcurry.com/

Curry and Gradecki have presented and exhibited at venues including Ars Electronica (Linz), New Media Gallery (Zadar), NeMe (Cypress), Media Art History (Krems), ADAF (Athens), and the Centro Cultural de España (México). Their research has been published in Big Data & Society, Visual Resources, and Leuven University Press. Their artwork has been funded by Science Gallery Dublin and the NEoN Digital Arts Festival.

Gradation Descent

Nirav Beni

2020

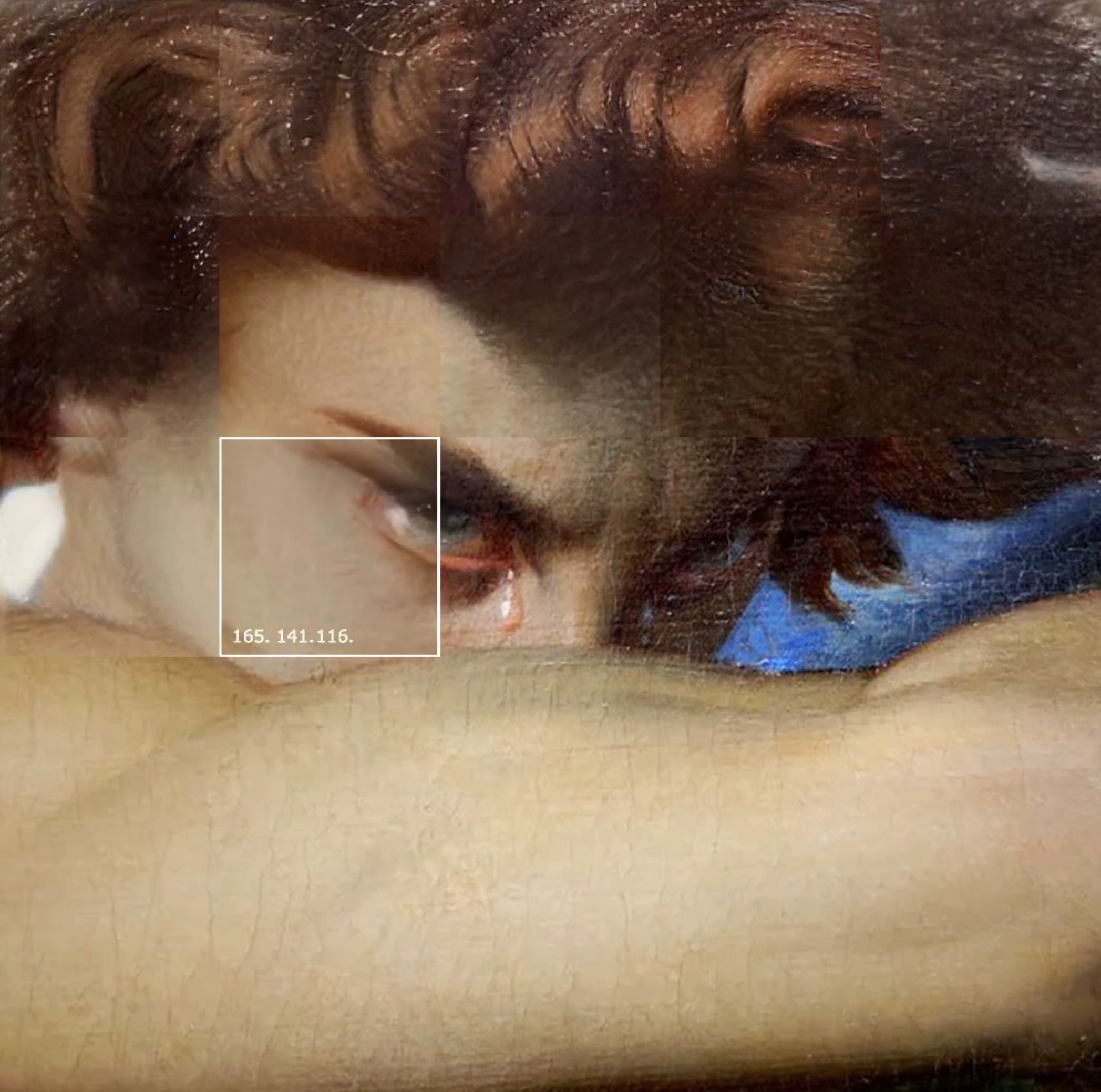

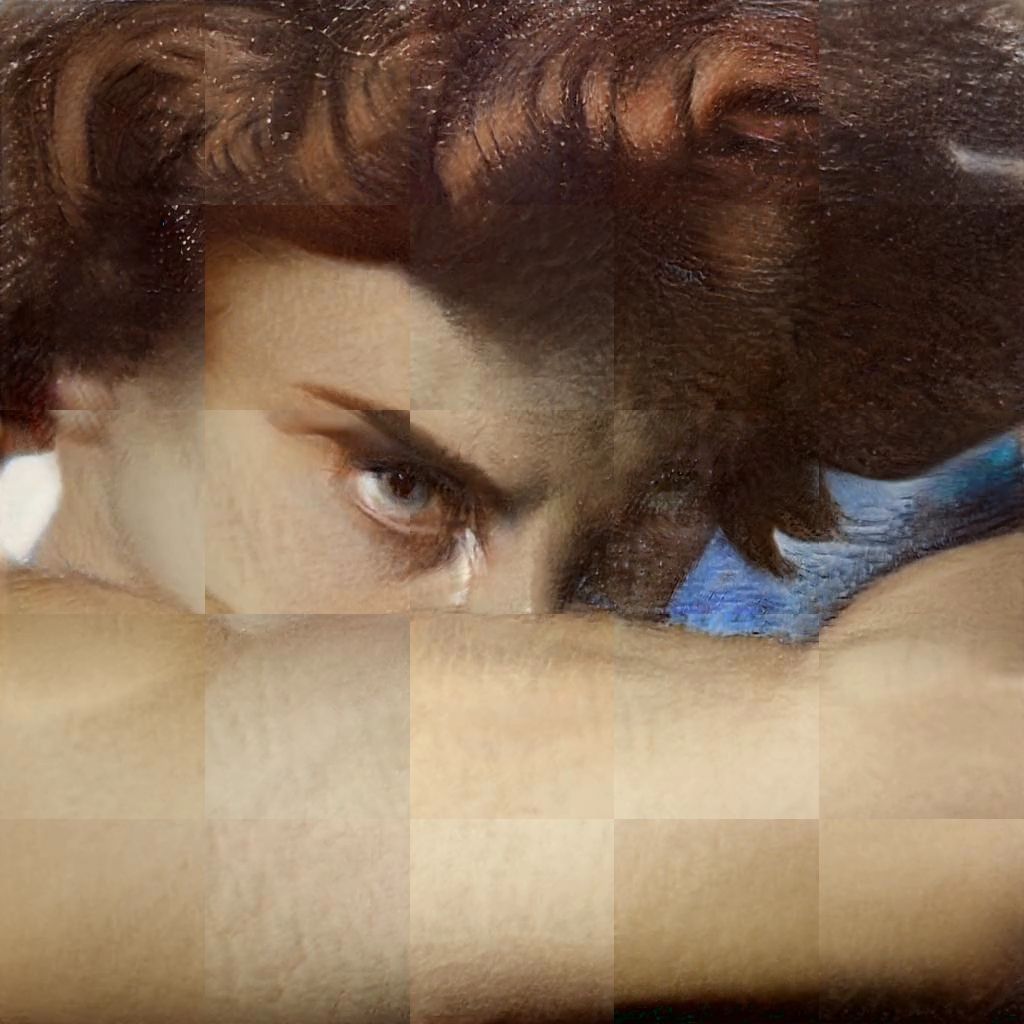

This work is a short audio-visual video that depicts a conceptual imitation or a visual reinterpretation of machine learning image processing algorithms acting on Alexandre Cabanel’s Fallen Angel painting.

Convolutional Neural Network algorithms (CNNs) comprise subdivided analyses of pixels, iterating and processing over multi-layered clustering of smaller blocks as they run. Pooling functions, convolutional image processing and feature reductions are common methods applied by these algorithms.

The moving images creating the video are generated from a Generative Adversarial Network (GAN), being trained on “real” images to produce its own “fake,” almost identical, but slightly inaccurate versions. After the algorithm slides across the original image and each block of the original image is processed, an AI generated frame is left in its place, creating this “fake” collage as the output image, emphasizing both similarities and discrepancies. The movement of the sliding motion is triggered by audio signals from a melancholic piano piece that was produced to accompany the teary visuals of the fallen angel.

The idea of the unseen, of algorithms being opaque and black boxed, inspired the idea of trying to unbox and interpret the operations of these AI machines in a way that is most familiar to us—through the human sense of sight. The human gaze is often crucial in our perception, and the Fallen Angel painting truly encapsulates this aesthetic.

Over time, as these algorithms become more ubiquitous, they become harder to decipher, either through the unpredictability of their operations, their general complexity or maybe just through intellectual property and propriety safeguarding. AI has the potential to expand into the realms of machine consciousness, agency and superintelligence. And as a potential technological singularity draws closer, our understanding or even control over these will weaken.

Artist Statement

I position myself at the intersection of art and technology, where I can incorporate AI, machine learning and other new media into my practice. I aim to create audio-visual, immersive and interactive installations that create shared and evocative experiences that touch on themes such as the human-machine dynamic.

Biography

Nirav Beni is a South African born engineer and new media artist. He has a BSc degree in Mechatronics Engineering from the University of Cape Town, and is now studying for an MA in Information Experience Design at the Royal College of Art in London.

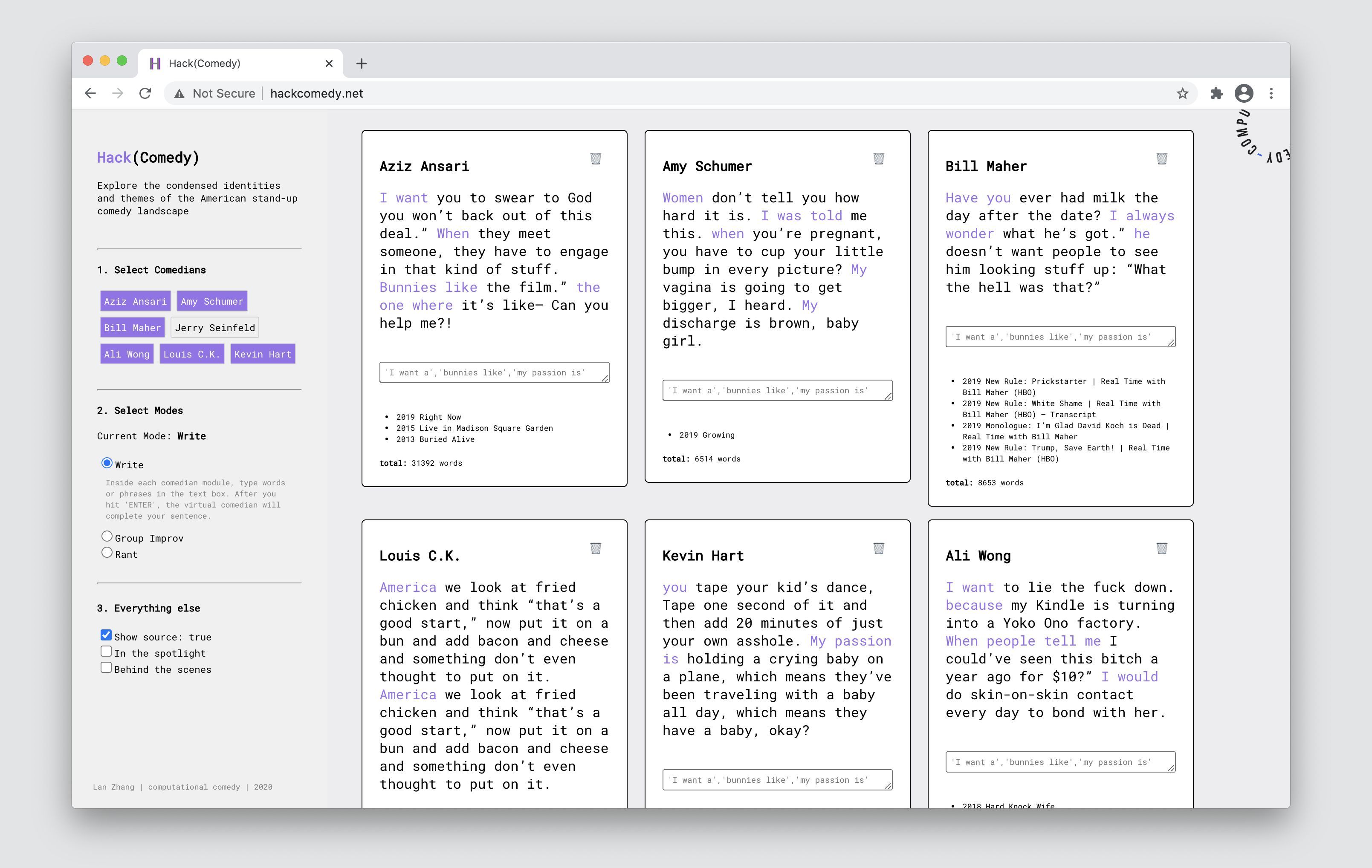

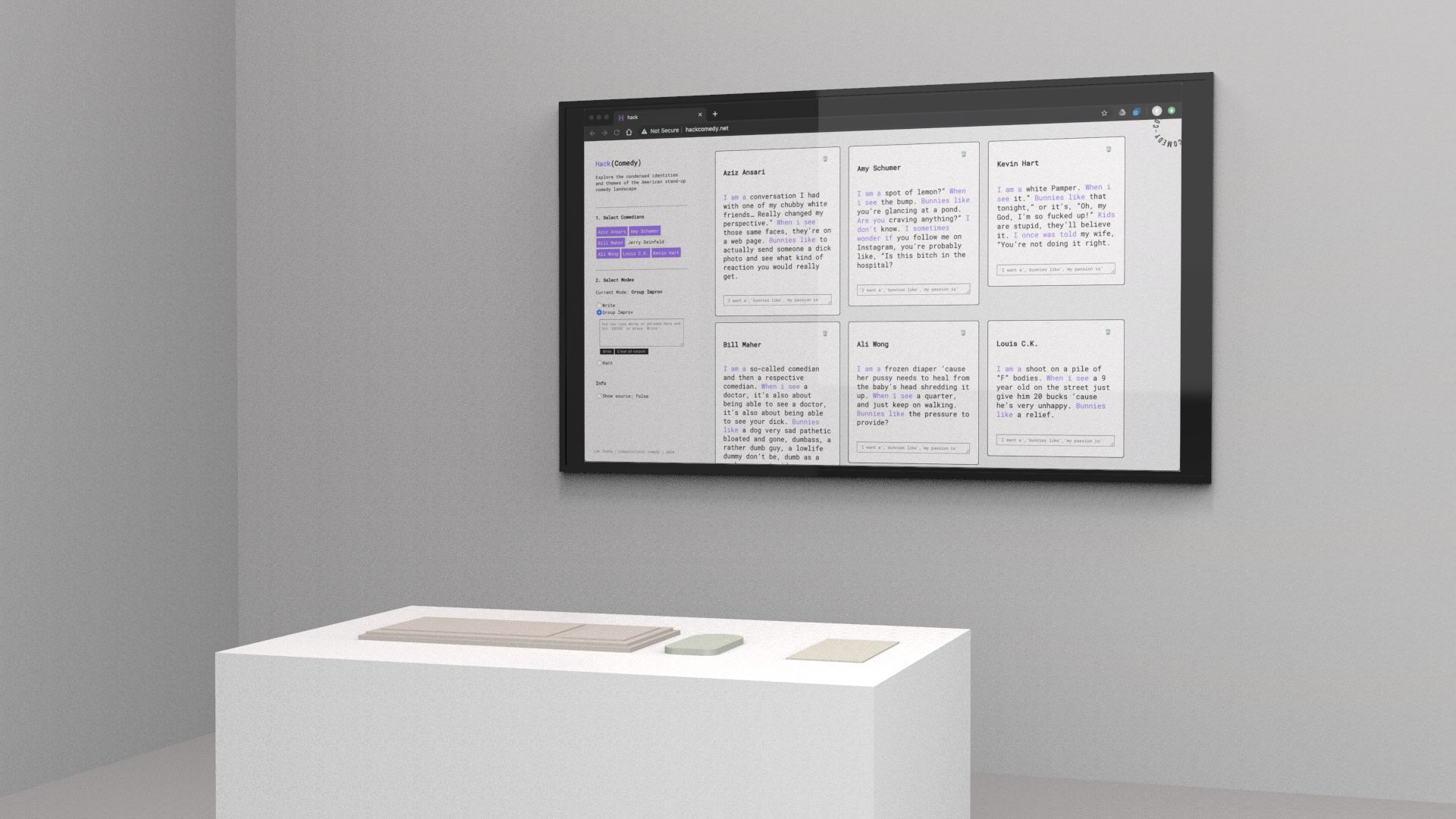

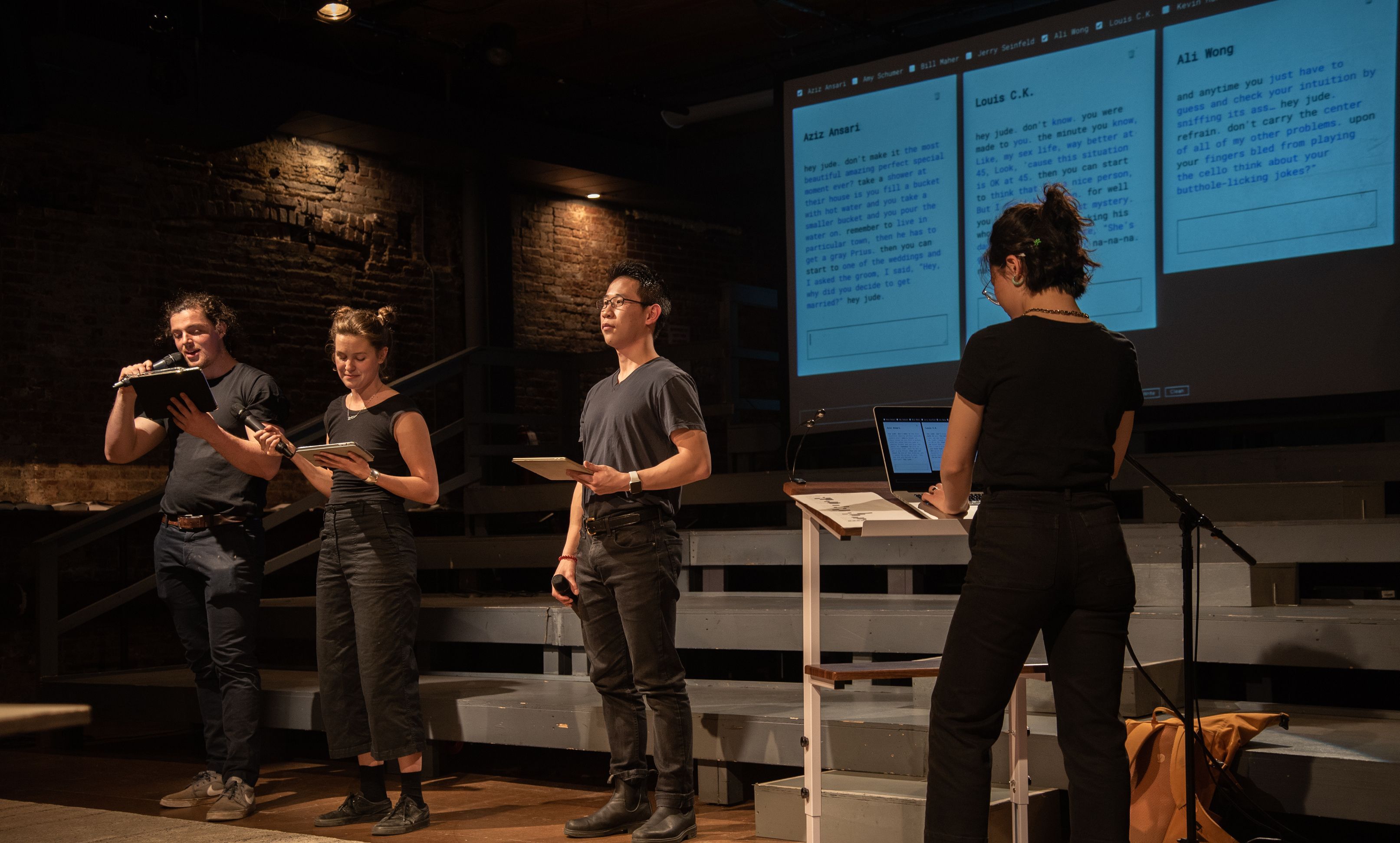

Hack(Comedy)

Lan Zhang

2020

Hack (Comedy) is a computational comedy net art interface as well as a performance. Hack (Comedy) aims to interrogate our perception of contextual humor through live procedural generations that reflect the condensed themes and identities in the American comedy landscape. With text input such as words and phrases, virtual comedian modules will complete writing sentences using word references from the transcript compilations scraped from the internet. In the performance, the generated outcomes will be delivered by human actors.

Computational comedy stands for producing comic text using procedural methods. “Hack” is a double entendre here: 1. Gaining unauthorized access to a network or a computer. 2. Copying joke bits from the original comedians.

Artist Statement

Lan Zhang, the artist, whose native language isn’t English and who has struggled to become culturally competent, wants to use this unexpected way of programming to reach the American humour pedestal. The process, however, reinforces the failure of the artist’s ideal pursuit of cultural competency and the comic absurdity of the pursuit itself.

Biography

Lan Zhang (she/her) is a Chinese creative developer and computational artist currently based in NYC in the United States. She enjoys creating tools on the web and experimenting with natural language processing, play experiences, and networked performances. Lan received her MFA in Design and Technology from Parsons School of Design. She has performed or showed her work at La Mama Experimental Theater NYC Culture Hub ReFest in NYC, Babycstles in NYC, Shanghai Power Station of Art, PRINT Magazine, Graphis New Talent, and Parsons 2020 Hindsight Festival.

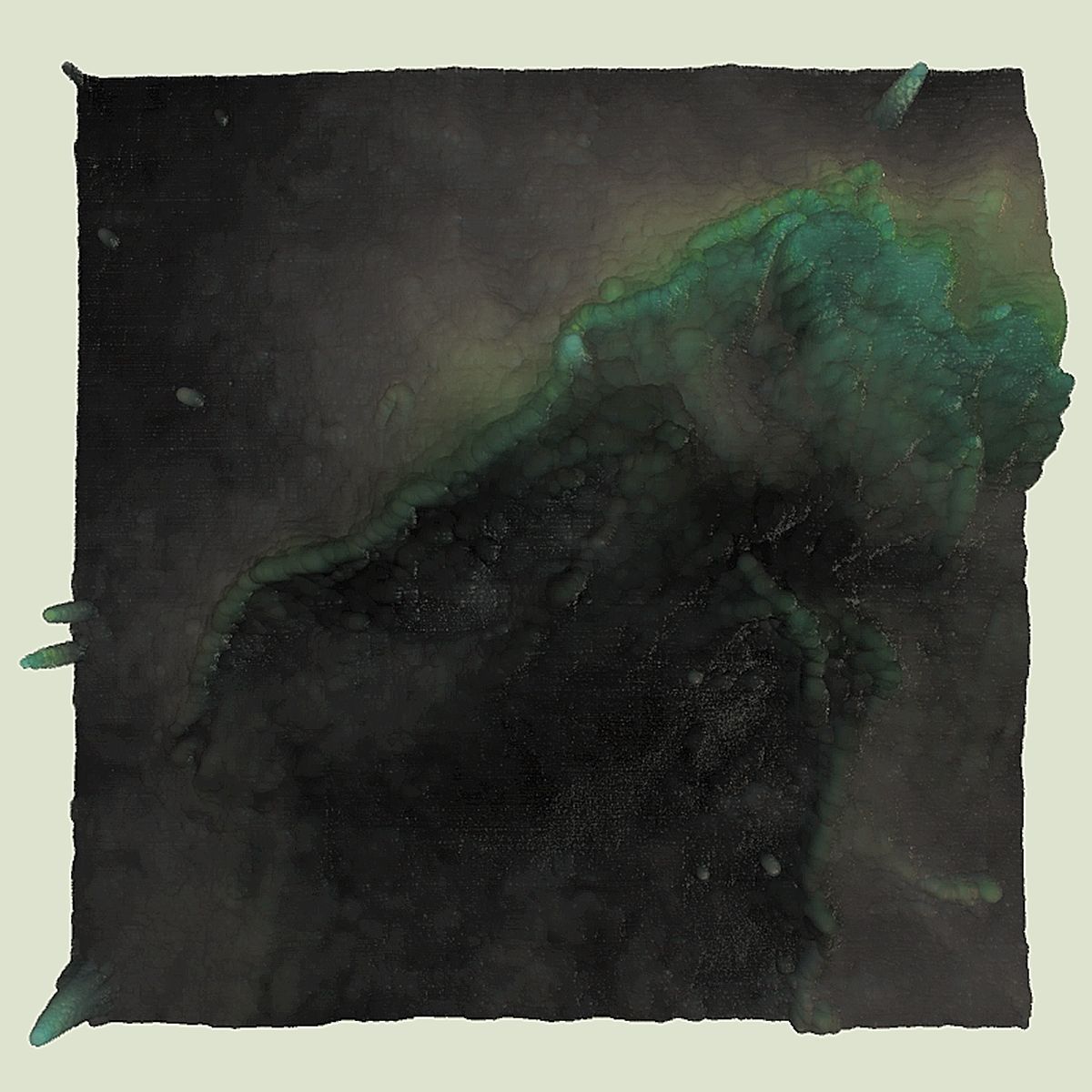

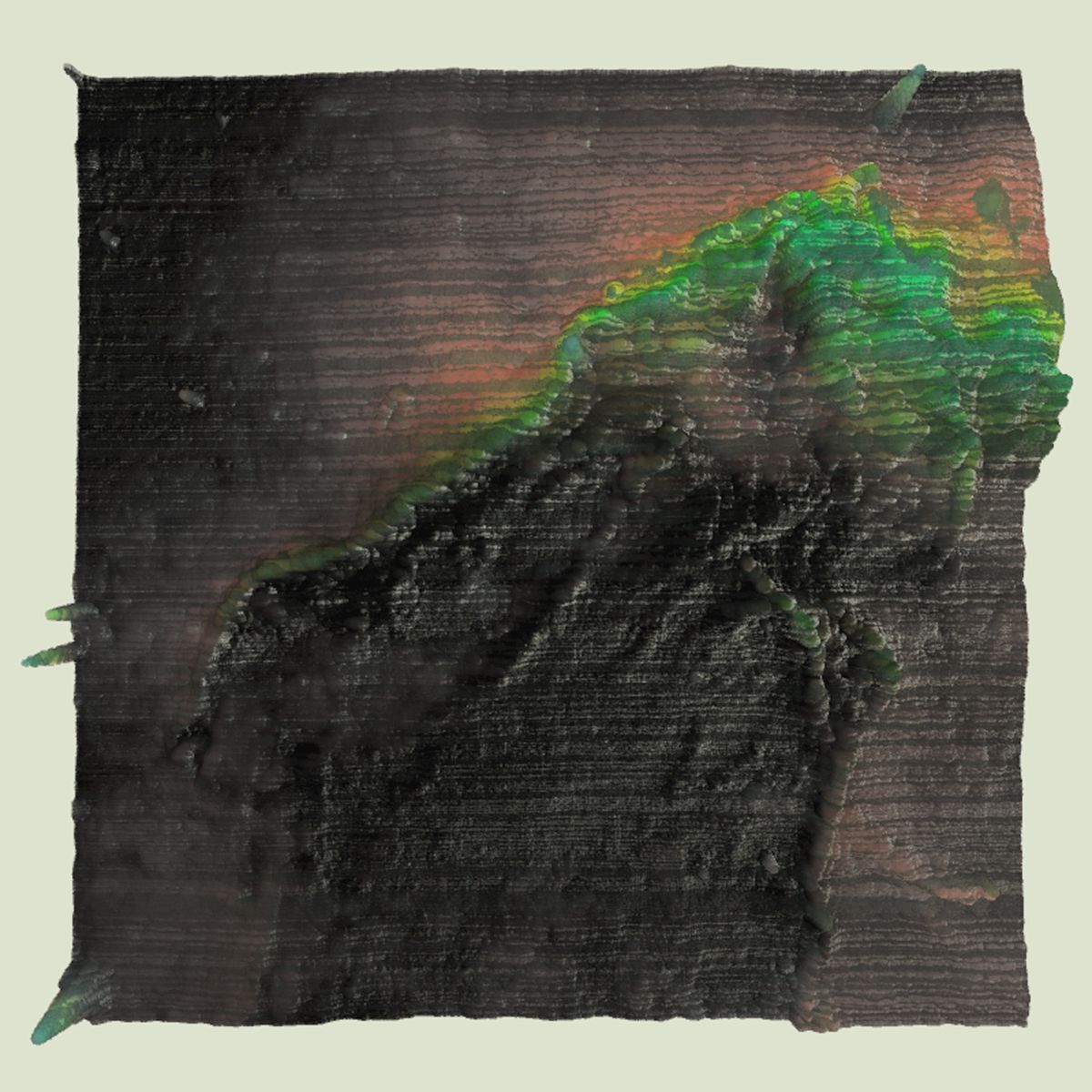

Heat, Grids Nos. 4 & 5

Angela Ferraiolo

2020

Heat is an example of an adaptive system. The artwork is made up of a collection of small computation units called “agents.” Agents compute their own states (color, size, location) based on inputs from their environment and on their relationship to other agents in their neighborhood. This means that an agent's state changes over time depending on its surroundings. The grids in these systems are made up of two layers of agents. Each layer is organized on its own network of links. Agents receive system input at regular time steps. As the “heat” entering a system increases, that energy is “absorbed” by agents and reflected as a change in color, size, or x, y, or z location. As a feature of each agent, certain properties can be more or less resistant to heat or, following the project’s design metaphor, more or less at risk for environmental stress.

Artist Statement

Heat continues my use of adaptive systems to explore economic, social, and political concerns. Two ideas came together to form the basis of the project: first, an imagination of the Anthropocene as an existential threat, an accumulating force so fundamentally damaging it moves towards the mythological and visual abstraction; second, the knowledge that global warming is an environmental risk that kills selectively. Instances of heat damage are local and can feel random or idiosyncratic even as they pose a threat to the whole. One way to visualize this phenomenon might be to build a disruption in a formal pattern at a specific location. Since any break in the formal pattern of a grid is easily legible, and because the form of the grid has particular resonances with the histories and economic strategies of capitalist and post-capitalist societies, the grid structure was chosen as a good candidate for algorithmic disruption.

Biography

Angela Ferraiolo is a visual artist working with adaptive systems, noise, randomness, and generative processes. Her work has been screened internationally including at the Nabi Art Center (Seoul), SIGGRAPH (Los Angeles), ISEA (Vancouver, Hong Kong), EVA (London), the New York Film Festival (New York), Courtisane Film Festival (Ghent), the Australian Experimental Film Festival (Melbourne), and the International Conference of Generative Art (Rome, Venice). Professionally she has worked for RKO Studios, H20, Westwood Productions, and Electronic Arts. She teaches at Sarah Lawrence College where she is the founder of the new genres program in visual arts.

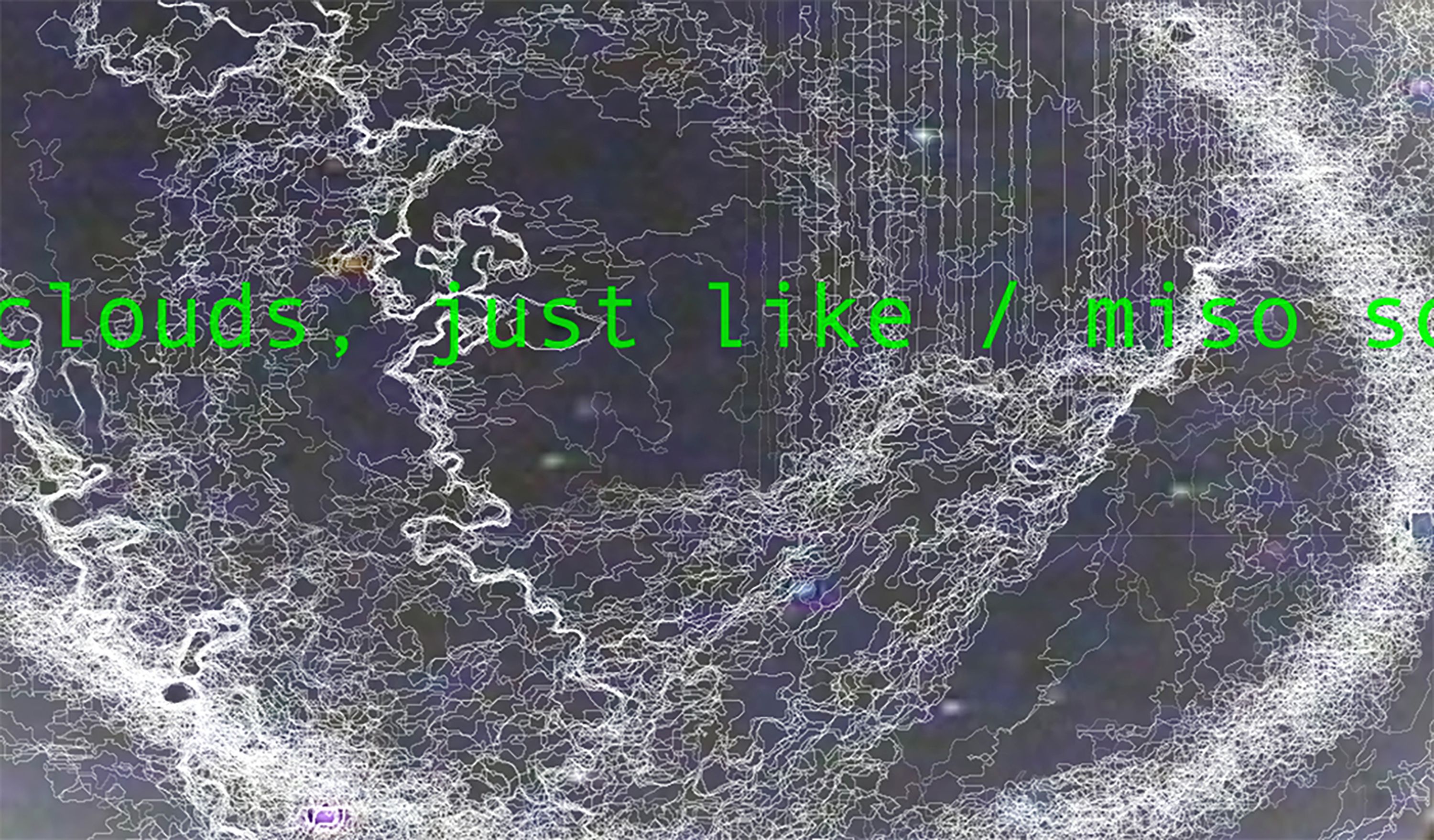

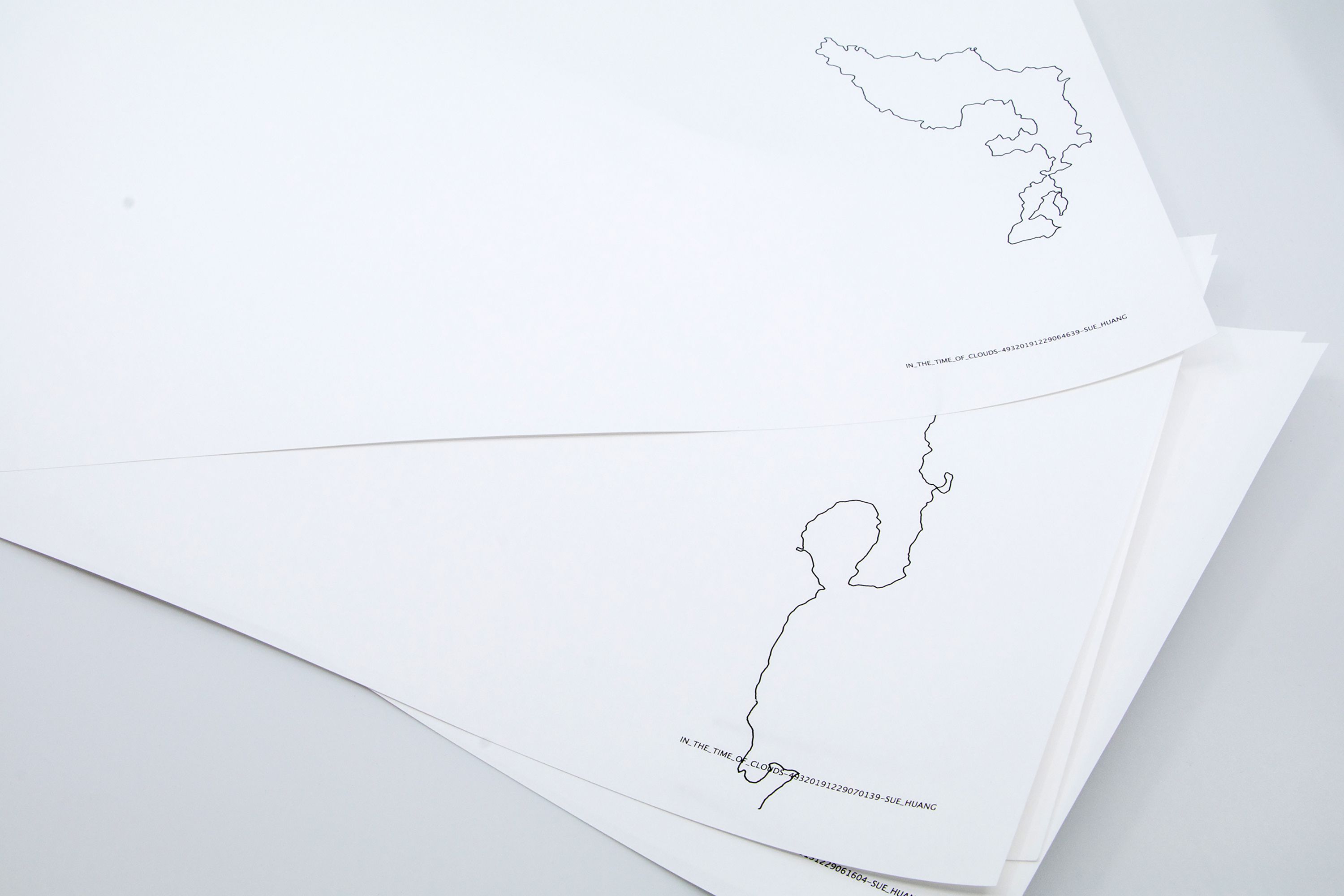

In the Time of Clouds

Sue Huang

2019

In the Time of Clouds is a mixed-media installation that utilizes the networked “cloud” to explore our collective sensory relationship to the sky. Responding to a February 2019 Nature Geoscience article that speculates about a possible future without clouds, the project attempts to archive cloud forms and document their influence on our collective imagination before they disappear from our atmosphere due to rising carbon dioxide concentrations. Utilizing both social media discourse about clouds and live video streams from public observatory cameras, the project amalgamates linguistic and visual data, mining this data to create an atmospheric triptych of poetry, ice cream, and ceramics.

The installation is composed of three intertwined parts: Part I (The Observatories), a series of videos that combine algorithmically generated poems and live streams from networked observatory webcam; Part II (Terracotta Clouds), a collection of hand built dessert wares based on unique cloud forms culled from the videos; and Part III (Cloud Ice Cream) (documented in this exhibition), an ice cream whose flavor profile is derived from social media discourse speculating about the taste of clouds, a “cloud ice cream.”

*This exhibition includes image documentation of the cloud ice cream.

Artist Statement

My new media and installation-based art practice addresses collective experience and engages with interwoven digital and analog processes. My current projects explore topics of ecological intimacy and speculative futures. Much of my work utilizes digital material from online public spaces including found social media text, found image and video media, and digitized archives. This work is processed through different computational/algorithmic techniques, most recently including artificial intelligence, natural language processing, and camera vision. I am interested in the social and cultural implications of utilizing new media technologies and programming to access and process collective materials and how the usage of these materials can open up new modes of conversation about our collective experiences as captured in online public spaces. These projects are created using a variety of media and formats depending on the concept, and I often draw upon site-specific and public engagement methodologies in the presentation of the work.

Biography

Sue Huang is a new media and installation artist whose work addresses collective experience. Her current projects explore ecological intimacies, human/nonhuman relations, and speculative futures. Huang has exhibited nationally and internationally, including at the Museum of Contemporary Art (MOCA), Los Angeles; the Contemporary Arts Center (CAC) in Cincinnati; ISEA in Montreal; Ars Electronica in Linz; the Beall Center for Art + Technology in Irvine; and Kulturhuset in Stockholm, among others. She received her MFA in Media Arts at the University of California, Los Angeles (UCLA) and her BS in Science, Technology, and International Affairs from the Walsh School of Foreign Service at Georgetown University. Huang is currently a member of the Creative Science track at NEW INC, supported by Science Sandbox, Simons Foundation, and is an assistant professor of Digital Media & Design at the University of Connecticut.

Microbial Emancipation

Maro Pebo, Malitzin Cortes & Yun W. Lam

2020

A reliquary holds mitochondria from the artist’s blood that used to be a free bacterium. Looking at the history of our cells, we can find traces of an ancient bacterium in our mitochondria. Mitochondria were at some point an independent bacterium, and are now organelles that among other fundamental tasks give us the energy to live.

Microbial Emancipation is the violent extraction of the mitochondria out of an animal cell to exist as an independent entity. A forcing out as an act of unwanted liberation, unrequested emancipation. A literal undoing of the unlikely yet fundamental collaboration that allowed for most known forms of life. It undoes in order to make it visible.

Artist Statement

Establishing an unlikely collaboration, the transdisciplinary team comprising an architect, a biochemist, and an artist worked together to materialize the sacrifice of the blood cells to carry out a mitochondrial extraction, a process that underscores symbiogenesis, and therefore, to contributes to a post-anthropocentric turn.

Biography

Maro Pebo. Weaving collaborations, Maro Pebo works on defying anthropocentrism and skeptical environmental accountability by subverting the monopoly of the life sciences to think about biological matter. With a PhD from the School of Creative Media, Pebo specializes in the intersections of art, science, and biotechnology. Her current interest lies in microorganisms’ cultural and a microbial posthuman turn. @maro_pebo.

Dr Yun Wah Lam is a biochemist and cell biologist. He was a postdoctoral researcher in the Wellcome Trust Biocentre in Dundee, Scotland. He is now an Associate Professor at City University of Hong Kong, where he built a multi-disciplinary research network to tackle problems from environmental sciences to regenerative medicine. Scientific advisor to a number of artworks, including “Magic Wands, Batons and DNA Splicers” by Wong Kit Yi (2018) and “CRISPR Seed Resurrection” by Ken Rindaldo (2021).

Malitzin Cortés (CNDSD). Musician, digital artist and programmer. She embraces transdiscipline and technology in transmedia practices. Her work evolves between live coding, live cinema, installation, 3D animation, VR, generative art, experimental music and sound art. She has presented at Medialab Prado, Centro Cultural España, CMMAS, Vorspiel, Spektrum Berlin and the International Live Coding Conference, Transpiksel, Aural, Transmediale, ISEA, CYLAND, MediaArtLab St. Petersburg, MUTEK Mexicao and Montreal. @CNDSD.

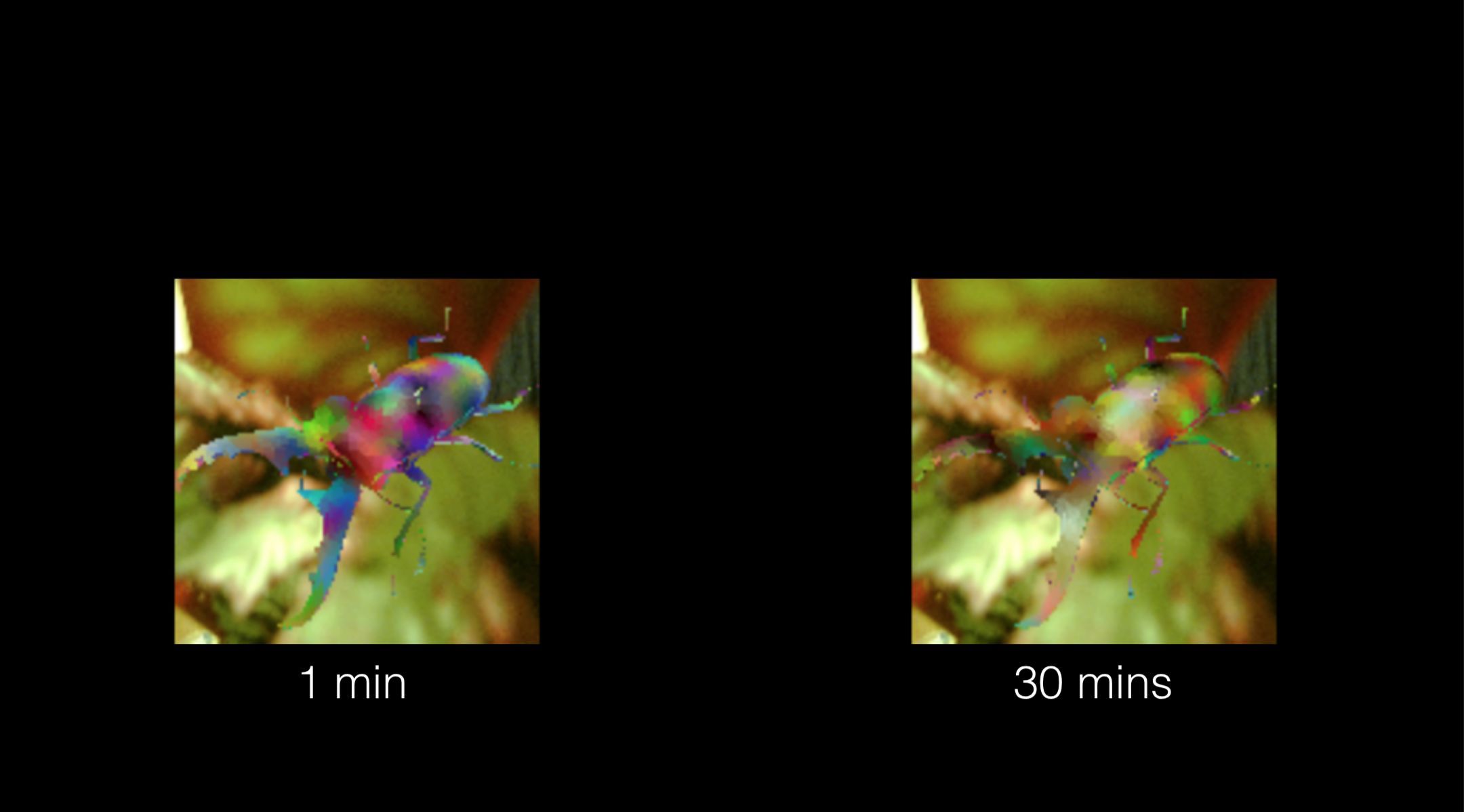

Mimicry

Ziwei Wu and Lingdong Huang

2020

Mimicry is a multi-screen video installation powered by computer algorithms and inspired by mimicry in nature: the unique way of which species protect themselves by changing color and pattern in response to environment.

In this experimental art piece, cameras will be recording plants in real-time, and through a genetic algorithm the color and shape of virtual insects will be generated and evolved over time, toward the ultimate goal of visually blending into the recorded background. This simulated breeding, selection, and mutation are visualized across the video monitors positioned in front of the aforementioned living plants as they progress.

In addition to exploring the intersection between nature and computation, we find that this work has relevance to the human society as well. As Walter Lippmann describes it in his book Public Opinion, people construct a pseudo environment that is a subjective, biased, and necessarily an abridged mental image of the world. To a degree, everyone’s pseudo-environment is a fiction.

The setup of the installation is an homage to Nam June Paik’s TV Garden. Paik imagined a future landscape where technology is an integral part of the natural world. We find that perspective compelling even today, and we add AI creatures to the landscape 50 years later.

Artist Statement

Ziwei Wu is a media artist and researcher. Her artworks are mainly based on biology, science and the influence in society. Using a range of media like painting, installation, Audio-Visual, 2D and 3D animation, VR, Mapping and so on.

Lingdong Huang is an artist and creative technologist specializing in software development for the arts. His fields of expertise include machine learning, computer vision and graphics, interaction design and procedural generation.

Biography

Ziwei Wu born in Shenzhen in 1996. She received a Bachelor of Inter media art at China academy of art and studied as an MFA student in Computational Arts at Goldsmiths. Now she is a PhD student in Computational Media and Arts, Hong Kong University of Science and Technology. She has won many awards, including Lumen prize, Batsford prize and funded by Ali Geek Plan. Her work exhibits internationally including Watermans Gallery London, The Cello Factory London, Himalayas Museum Shanghai, Yuan Museum Chongqing, Times Art Museum Beijing, and OCAT Shenzhen.

Lingdong Huang born in Shanghai in 1997, he recently received a Bachelor of Computer Science and Arts (BCSA) at Carnegie Mellon University in December 2019. His better-known works include wenyan-lang(2019), an esoteric programming language in Classical Chinese. {Shan, Shui}*(2018), an infinite procedurally generated Chinese landscape painting, and doodle-place (2019), a virtual world inhabited by user-submitted, computationally-animated doodles.

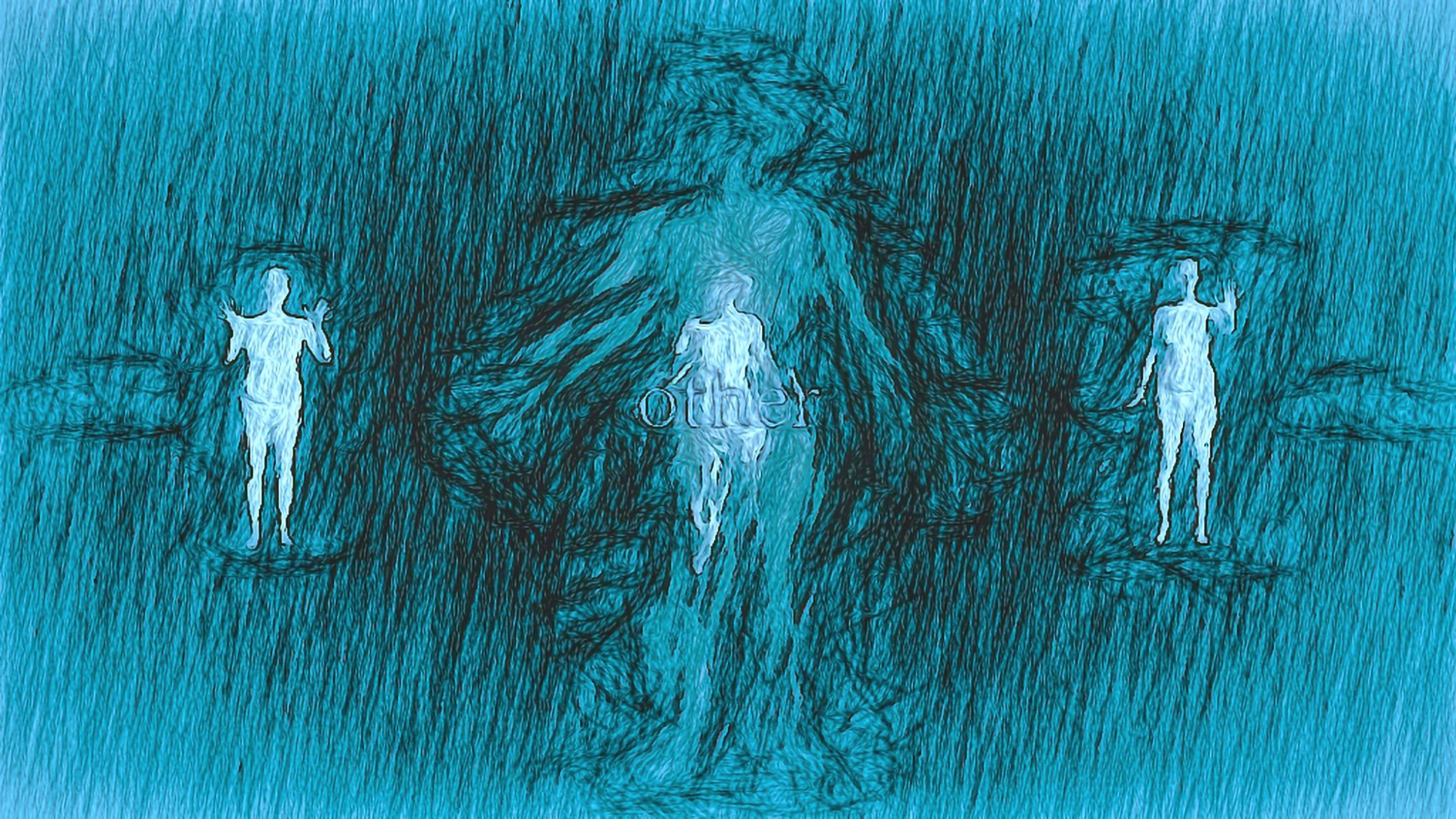

Mitochondrial Echoes: Computational Poetics

prOphecy sun, Freya Zinovieff, Gabriela Aceves-Sepulveda & Steve DiPaola

2021

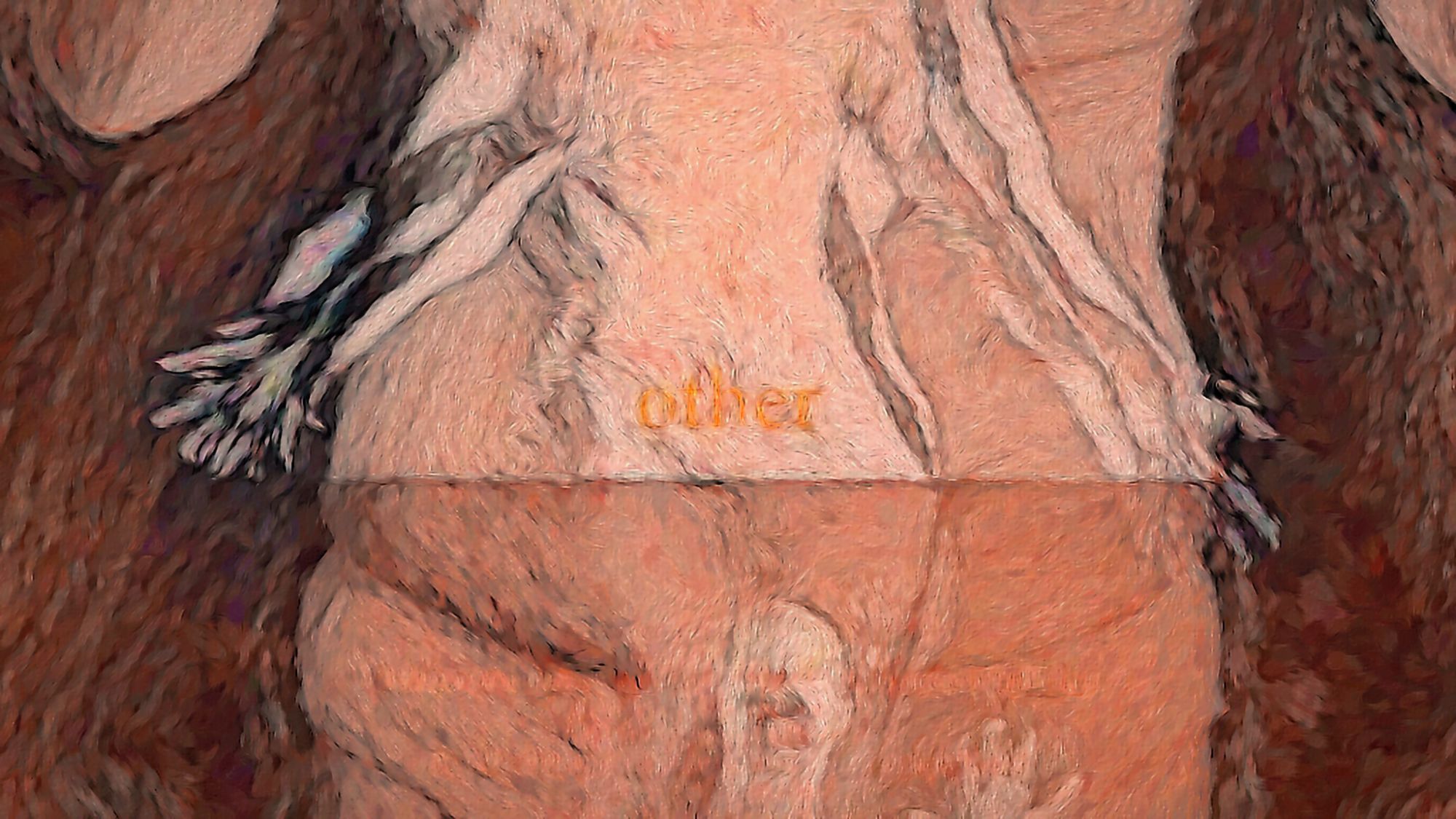

Mitochondrial Echoes: Computational Poetics, is an immersive audiovisual AI driven poetic score that explores computational poetics, sonic decay, and notions of place, attunement, figuration, and narrative iteration. This artwork makes a contribution to the Art Machines 2 conference and research-creation processes by presenting AI built poetic stanzas that were further translated through sonic decay, video editing, and collective machinic interactive processes. Exploring the agential, tangible, and expansive possibilities of machine learning algorithms this work invites innovative ways of seeing, feeling, and sensing the world beyond known realms—extending our limbs, ears, eyes, and skin.

Artist Statement

This artwork is part of an ongoing research project between three artist mothers and Steve DiPaola in the iVizLab at Simon Fraser University, engaging AI technology, Deep learning, bodies, systems, sonic encounters, and computational based art processes. Building on the work of Maria Puig de la Bellascasa and Rosi Braidotti, we take up research-creation approaches through co-constructed, technologically mediated sound and video practices.

Biography

prOphecy sun, Freya Zinovieff, Gabriela Aceves-Sepúlveda, Steve DiPaola have been collaborating since 2018. This work expands on a larger research agenda focused on the female body as a multispecies animal in relation to machine-learning processes and how acts of care can facilitate kinship.

Models for Environmental Literacy

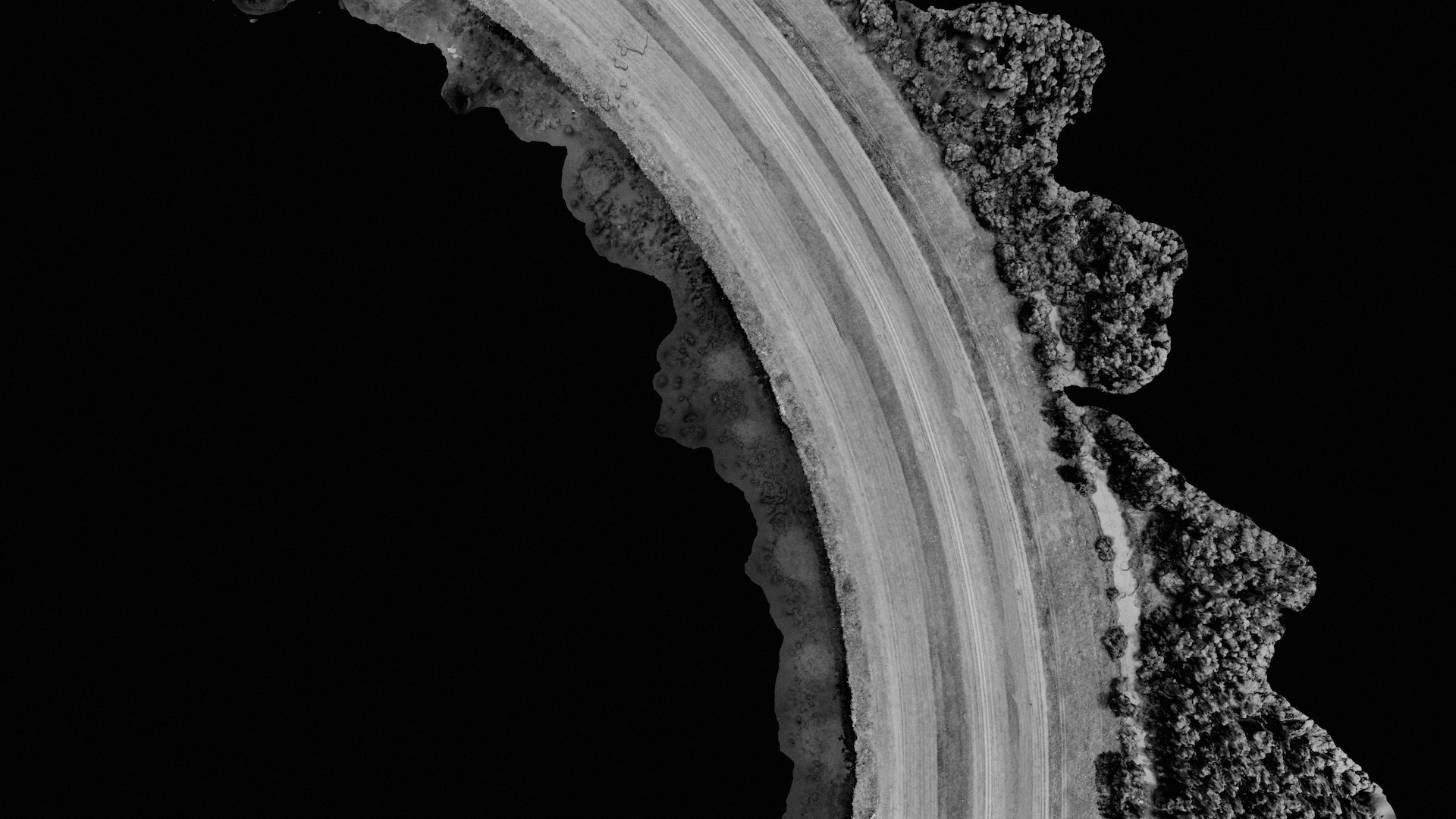

Tivon Rice

2020

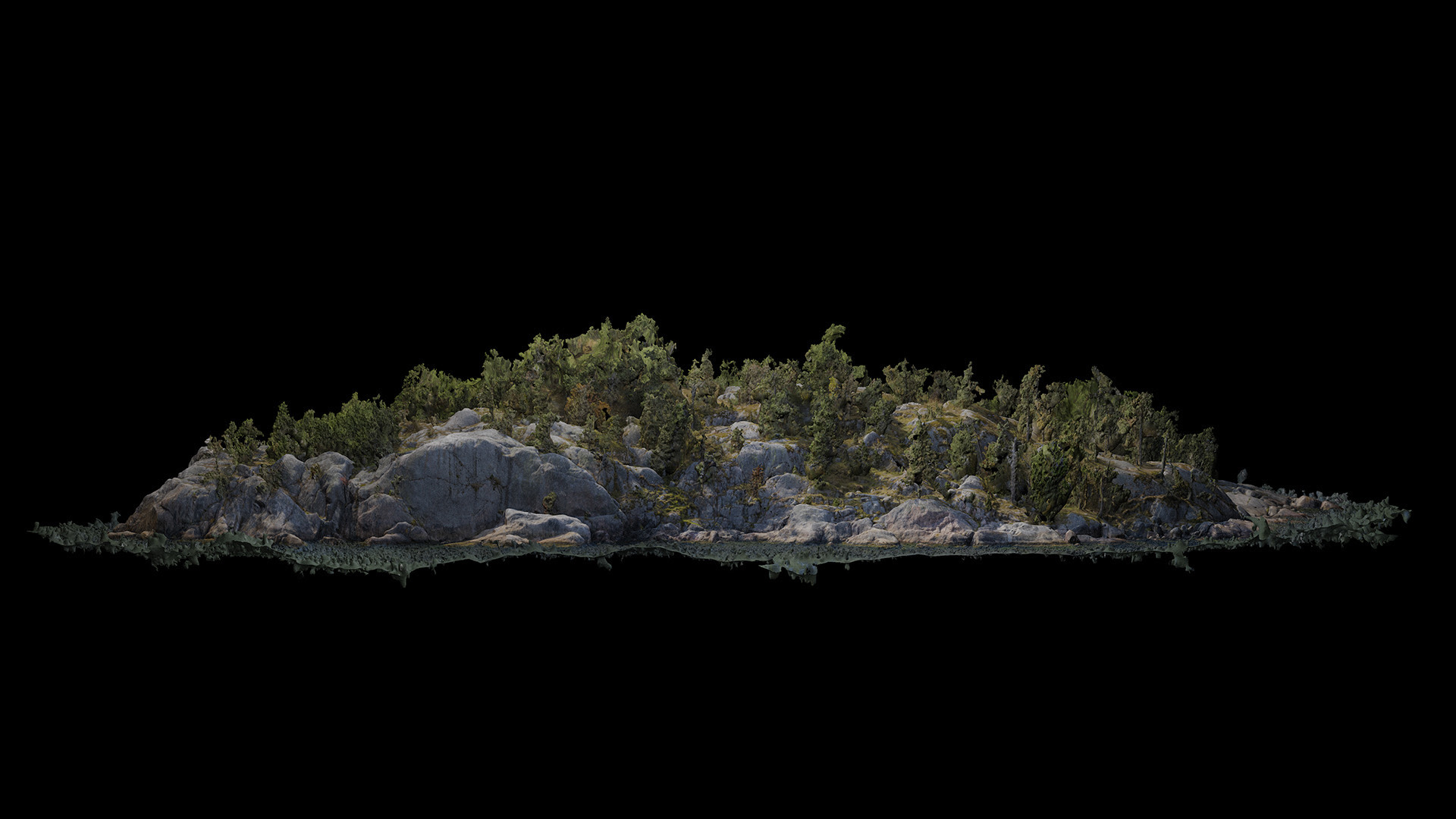

In the face of climate change, large-scale computer-controlled systems are being deployed to understand terrestrial systems. AI is used on a planetary scale to detect, analyze and manage landscapes. In the West, there is a great belief in “intelligent” technology as a lifesaver. However, practice shows that the dominant AI systems lack the fundamental insights to act in an inclusive manner towards the complexity of ecological, social, and environmental issues. At the same time, the imaginative and artistic possibilities for the creation of non-human perspectives are often overlooked.

With the long-term research and experimental film project Models for Environmental Literacy, the artist Tivon Rice explores in a speculative manner how artificial intelligences could have alternative perceptions of an environment. Three distinct AIs were trained for the screenplay: The “Scientist,” the “Philosopher,” and the “Author.” The AIs each have their own personalities and are trained in literary work—from science fiction and eco-philosophy, to current intergovernmental reports on climate change. Rice brings them together for a series of conversations while they inhabit scenes from scanned natural environments. These virtual landscapes have been captured on several field trips that Rice undertook over the past two years with FIBER (Amsterdam) and BioArt Society (Helsinki). Models for Environmental Literacy invites the viewer to rethink the nature and application of AI in the context of the environment.

Artist Statement

My work critically explores representation and communication in the context of digital culture and asks: how do we see, inhabit, feel, and talk about these new forms of exchange? How do we approach creativity within the digital? What are the poetics, narratives, and visual languages inherent in new information technologies? And what are the social and environmental impacts of these systems?

These questions are explored through projects incorporating a variety of materials, both real and virtual. With recent films, installations, and A.I. generated narratives, I examine the ways contemporary digital culture creates images and in turn builds histories around communities and the physical environment. While much of my research focuses on emerging technologies, I continuously reevaluate relationships with sculpture, photography, and cinema. My work incorporates new media to explore how we see and understand a future thoroughly enmeshed in new data, visual, and production systems.

Biography

Tivon Rice is based in Seattle (US) and holds a PhD in Digital Art and Experimental Media from the University of Washington, where he is currently a professor of Data Driven Art at DXARTS. He was a Fulbright scholar (Korea 2012), and one of the first individuals to collaborate with Google Artists + Machine Intelligence. His projects have traveled widely with exhibitions in New York, Los Angeles, Seoul, Taipei, Amsterdam, London, Berlin, and São Paulo.

Perihelion

Antti Tenetz

2019-22

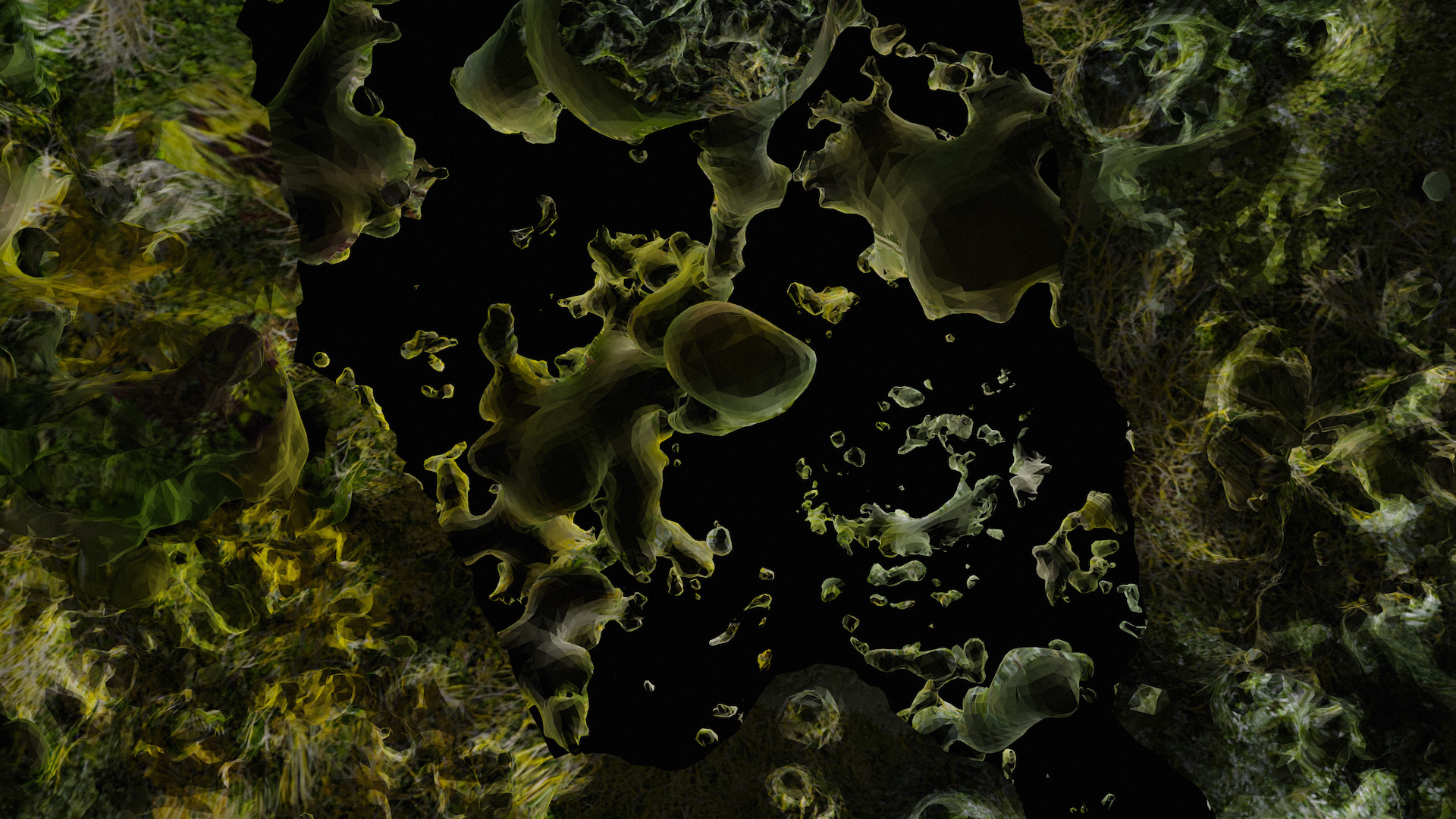

Antti Tenetz`s Perihelion critically intersects art, science and technology, connecting possible alternative futures in space. The project incorporates artificial intelligence, evolutionary computation, biotic evolution and abiotic conditions in outer space, and draws on both biological and extraterrestrial resources.

Perihelion is a speculative exploration into our relationship with life in outer space, raising the question of what it means to be human when other life forms and agencies such as AI and machine learning are needed to support us. Research revolves around the idea of life in outer space encompassing two topical and critical trajectories. The first is a notion of biology in closed systems, biotech solutions, and astrobiology while the second focuses on AI as a companion in co-evolving agency.

Biological and machine systems are evolving in interaction with one another. Selected lifeforms are hosted in customized incubation units protecting and reflecting aspects of environments in space. Metal clustering bacteria, Curvianus metalduransis, is placed into a forced evolution test with nano fluidic gold and technologies alongside different cyanobacterias producing oxygen to speculate on the naturally growing conducting patterns which emerge.

Reflecting the notion of life and technology both in space and back in the terrestrial realm here on earth, Perihelion explores how machines dream along with technological and biological companions of a future life that we do not yet know.

Together, images and bacteria produce hybrid dreamlike entities and visions located in a potential future incorporating deep space, human and biological interactions. These images give rise to a metamorphic world and beings in which the living and the technological merge with each other.

Artist Statement

This revolutionary change that we are living with in and through technology is already changing our way of observing and producing experiences and information through the digital, technological and biological materials of our environment. This era opens an unprecedented palette for artistic expression. How do we construct reality, an interpretation based on our own experiences, from our own perspective? Language and visual communication, technology, science and art are part of the diversity of nature. Do we need a polyphonic, multilingual ecological and cultural dialogue which creates deep evolutionary information—a language needed to survive in a changing world?

Biography

Antti Tenetz is a sub-arctic based artist. Through a practice of video, installation, interactive and biological arts he explores how humans, non-humans and machines envision, dream, perceive and relate to the changes in hybrid environment where the technosphere and biosphere merges. His works are situated at the interface between media arts, biological arts and urban art. His focus is on multi-disciplinary and multi-artistic cooperation between art and science, and he often uses technologies such as drones, satellite tracking, game engines and machine learning. Tenetz’s works and collaborative projects have been exhibited in Finland and internationally, including at the Venice Biennale, Istanbul Biennale parallel program, Tate Modern Exchange program, Science Gallery Dublin, Lumipalloefekti exhibitions, X-Border, ISEA Istanbul, Pan-Barentz, and e-mobil art. He has also won three national snow-sculpting competitions.

POSTcard Landscapes from Lanzarote I & II

Varvara Guljajeva & Mar Canet Sola

2020

The project draws attention to the influence of the tourist gaze on the landscape and identity formation of Lanzarote island in Spain. With our engagement with the landscape heavily dominated by the imaginative geographies that have been constantly reproduced by the visitors, a conflict is created between the touristic rituals that we are preprogrammed to reproduce when arriving at the destination and the reality (Larsen 2006).

We downloaded all available circulating depictions of imaginative geographies of Lanzarote from Flickr, dividing them into two: landscapes and tourism. After carefully preparing each pool of images, we applied the AI algorithm StyleGan2, which generated new images.

The project consists of two videos representing a journey of critical tourism through the latent space of AI-generated images using StyleGan2. The first video work, POSTcard Landscapes from Lanzarote I, is accompanied by a sound work by Adrian Rodd. Adrian is a local sound artist from Lanzarote, whose idea was to add a social-critical direction to the work. The second video piece, POSTcard Landscapes from Lanzarote II, is accompanied by a sound work by Taavi Varm (MIISUTRON). Taavi intended to introduce mystery and soundscapes to the imagescapes of the project.

The new deep learning artificial intelligence age transforms the work of art and creates new art-making processes. In his essay “The Work of Art in the Age of Mechanical Reproduction” Walter Benjamin (2008) anticipated the unprecedented impact of technological advances on the work of art. Benjamin argues that technology has fundamentally altered the way art is experienced. The new AI is the latest technology that is hugely impacting cultural production and providing new tools for the creative minds.

The full length of each video is 18min37sec. The project was commissioned by Veintinueve trece (Lanzarote).

Artist Statement

Technology is the core element of our art practice, both conceptually and as a tool. Our aim is to reflect critically on where this technological development leads us: how do our lives, perception, communication, being together, and environment change along with the development of technology?

Our research interest is to provoke discussion about creative and meaningful uses of AI. The aim is to understand and relate artistically to the cultural phenomenon behind the vast amount of data produced daily. Quoting Jean Baudrillard (2003), “We live in a world where there is more and more information and less and less meaning.” It is vital to make meaning and contextualize all the data wilderness that surrounds us.

How is the cultural sector able to provoke discussion about AI? All these new high-tech concepts need to be engaged with, not only theoretically, but also through practice, which gives more profound understanding of the processes behind them.

Biography

Varvara & Mar is an artist duo formed by Varvara Guljajeva and Mar Canet in 2009. The artists have exhibited their art pieces in several international shows, such as at MAD in New York, FACT in Liverpool, Santa Monica in Barcelona, Barbican and V&A Museum in London, Ars Electronica museum Linz, ZKM in Karlsruhe, etc.

Varvara (born in Tartu, Estonia), has a Ph.D. in art from the Estonian Academy of Arts. She has a master's degree in digital media from ISNM in Germany and a bachelor's degree in IT from Estonian IT College.

Mar (born in Barcelona) has two degrees: in art and design from ESDI in Barcelona and computer game development from University Central Lancashire in the UK. He has a master's degree from Interface Cultures at the University of Art and Design, Linz. He is currently a Ph.D. candidate and Cudan research fellow at Tallinn University.

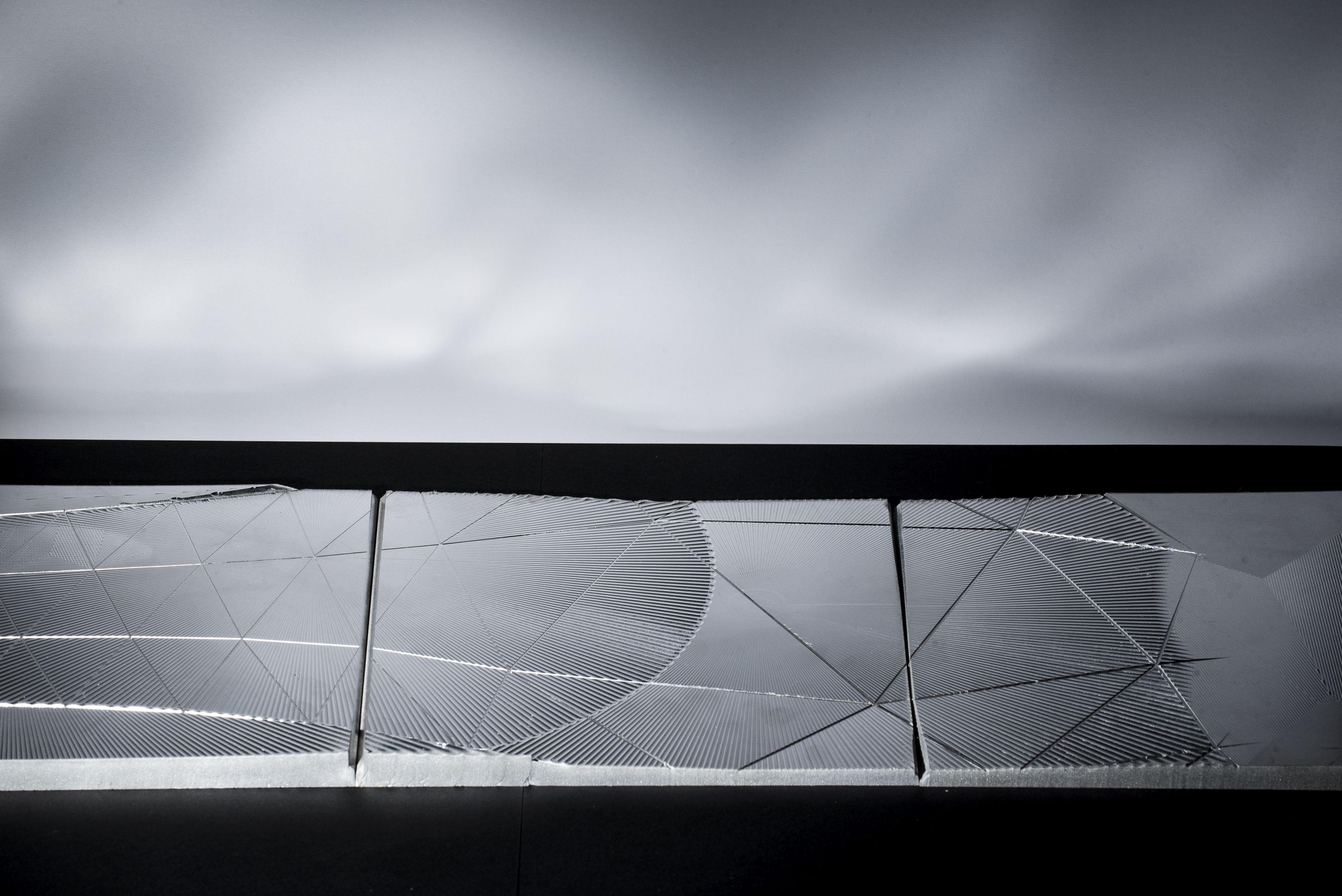

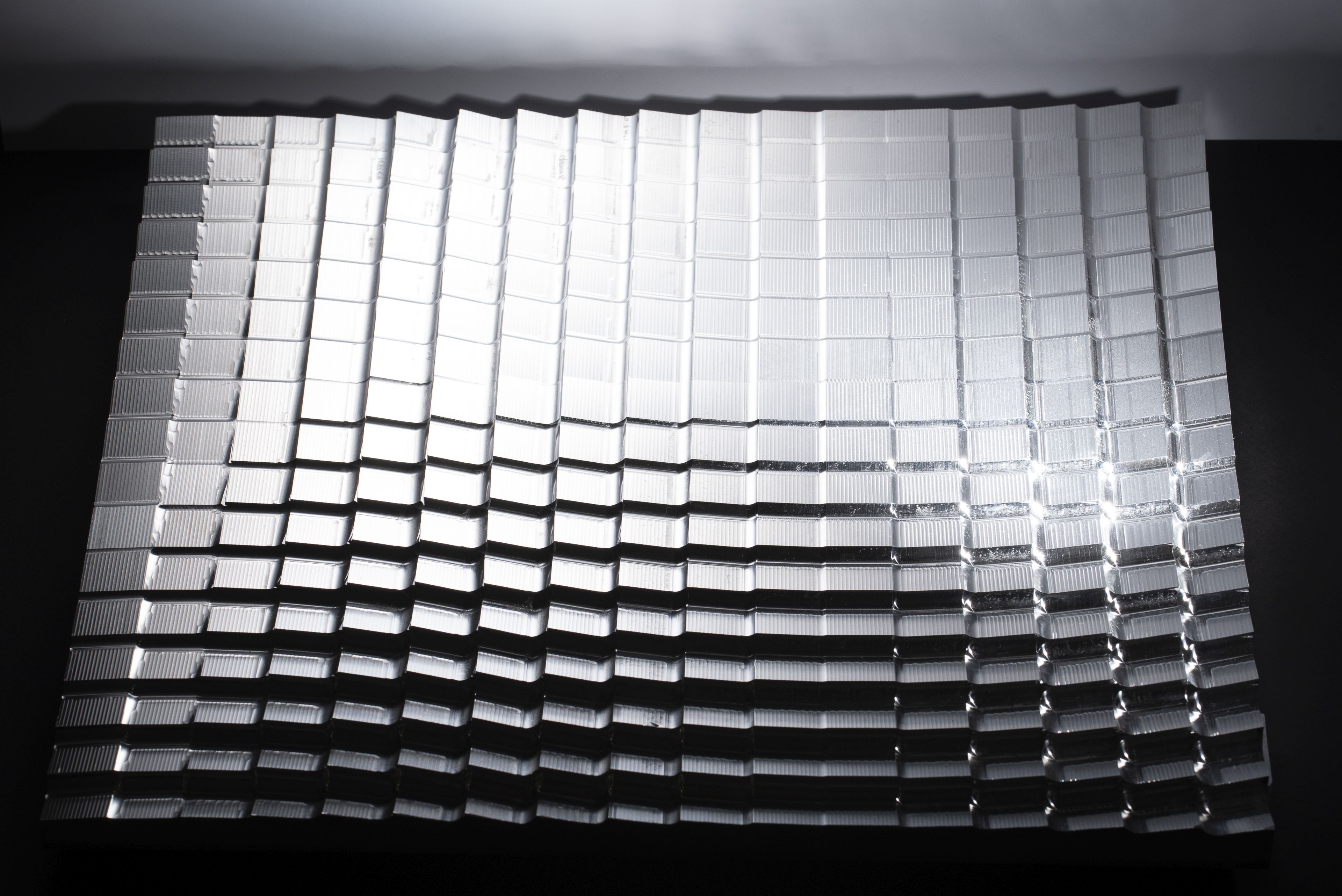

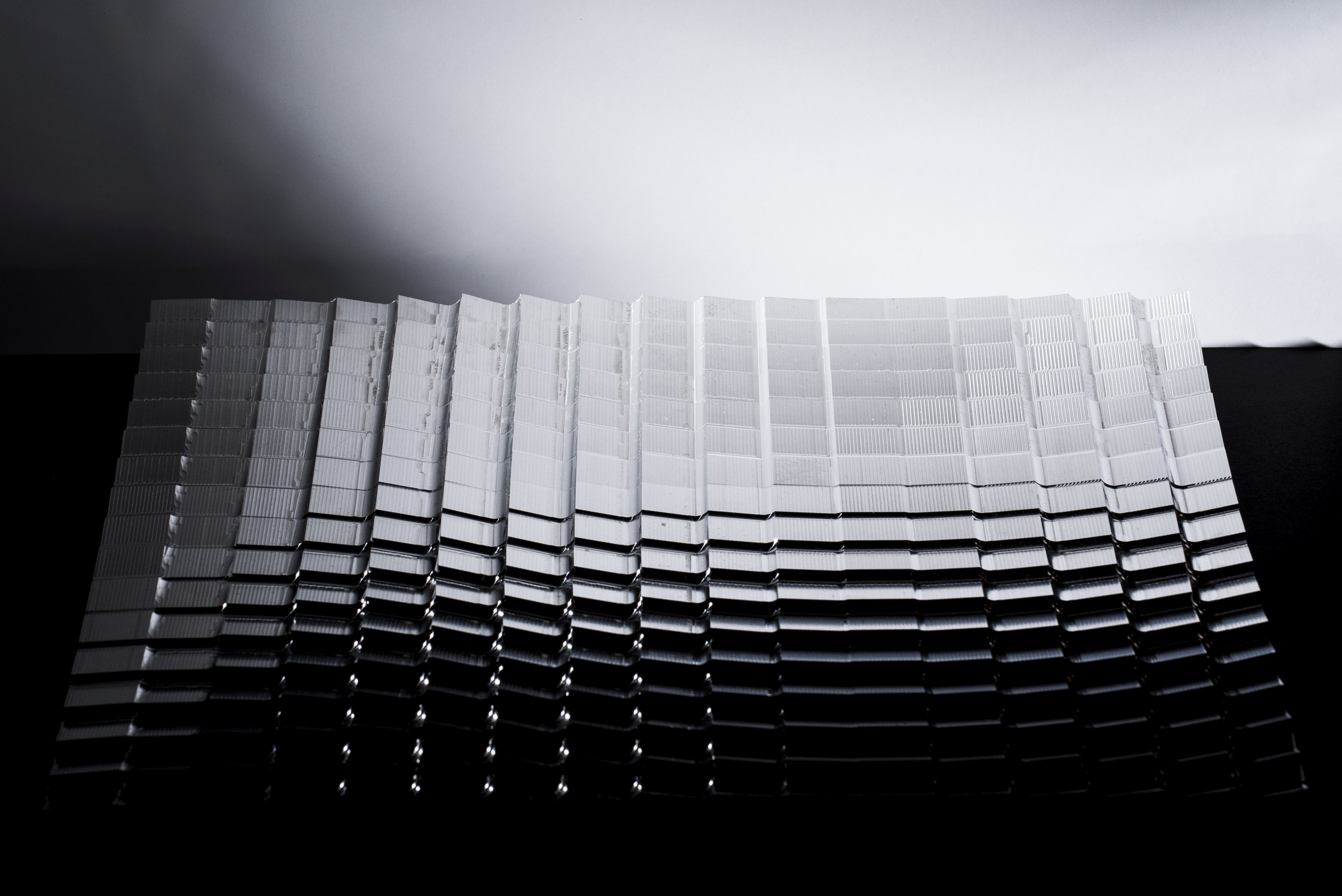

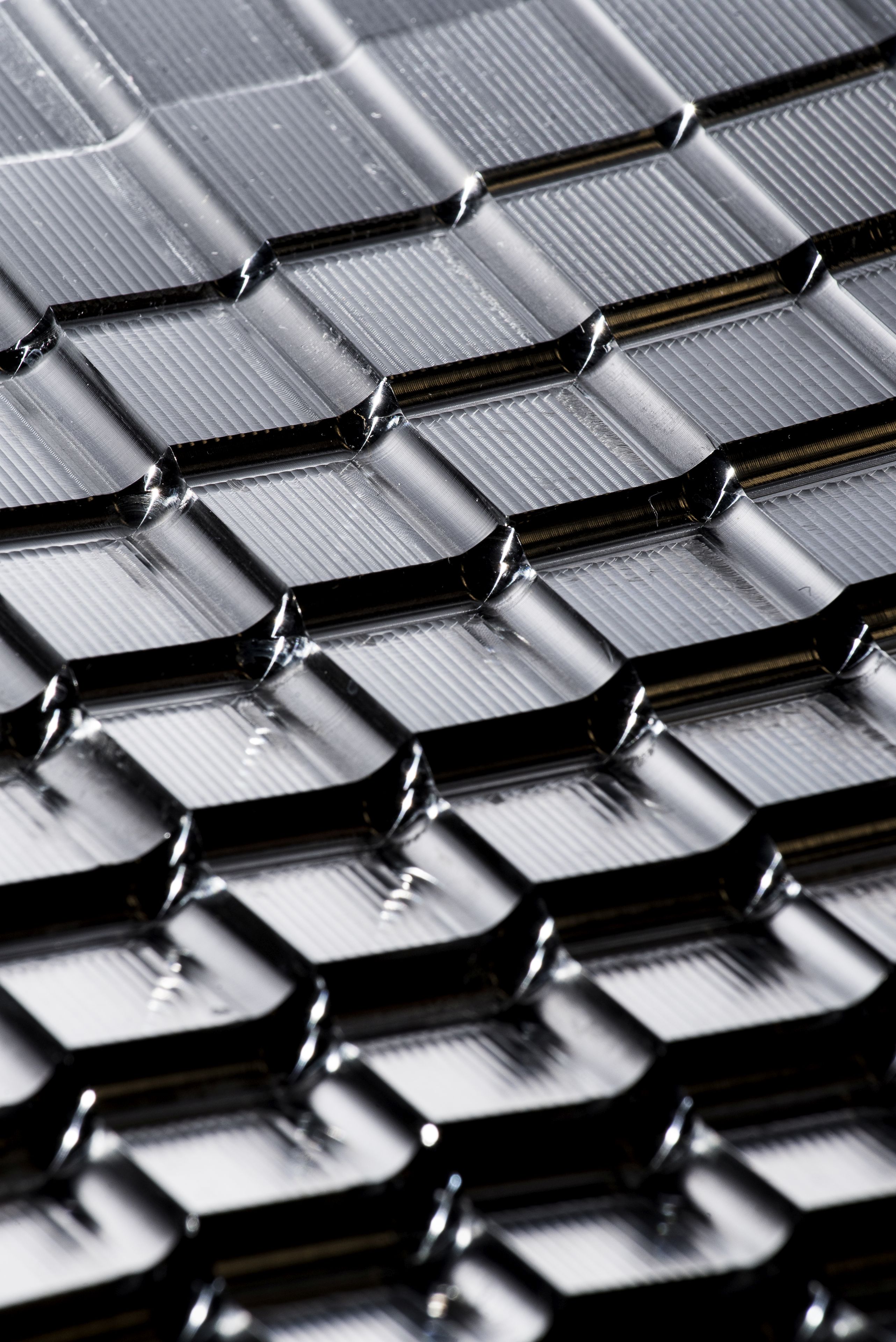

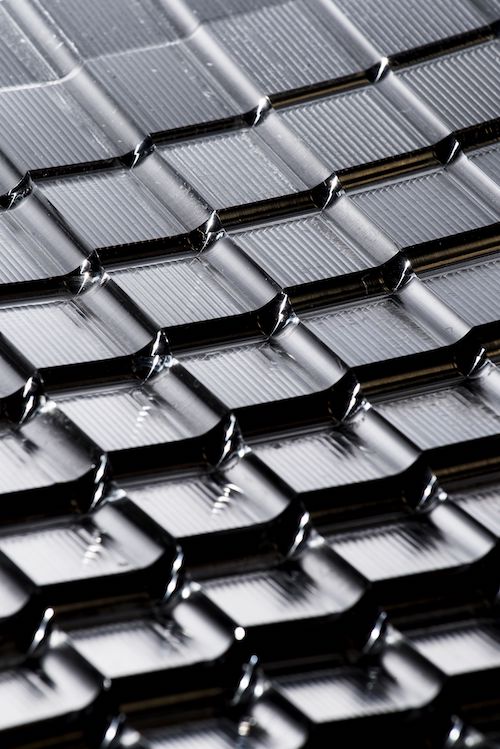

Reflective Geometries

Mariana Rivera & Sara Zaidan

2019

This design placeholder research focuses on the exploration of subtractive manufacturing technologies and their effects on material behaviour. Specifically on the large impact surface finishes have on aluminium’s optical properties and visual appearance when designed and controlled. Therefore, new material responses and qualities can be achieved and programmed into traditional materials. By integrating feedback data loops between the multiple stages of digital fabrication, novelties in the traditional ways of making are possible. This investigation looks to integrate machining parameters into the design process to facilitate an object production with desired performance.

Artist Statement

This project focuses on the exploration of subtractive manufacturing technologies and their effects on material reflection. It aims to predict and design visual light transmission of machined surfaces using virtual simulation, enabling new material responses and qualities to be programmed into conventional materials such as aluminium. Reflective Geometries intends to define the optical performance of aluminium through carefully selected fabrication parameters that uncover inherent reflective metaproperties. The sculptural object is made up of bespoke aluminium paneling, it was designed to be used as an architectural component, interior and/or exterior.

Biography

Mariana Rivera, an architectural designer and maker from Puerto Rico. In 2019, I finished an MArch at the Bartlett School of Architecture in Design for Manufacture (UCL). I have a keen interest in material performance and its application in architecture and design. I am trained as an architect and believe in approaching design as a creative and adaptable maker with knowledge in digital craft and new technologies.

Sara Zaidan, an industrial designer with a background in architecture. My main professional interest includes material behavior and fabrication through digital craft in the context of fast evolving technologies.

Having recently graduated from the Bartlett, School of Architecture, with a MArch in Design for manufacture, I am currently interested in exploring the possibilities of parametric design considering material performance using advanced manufacturing technologies.

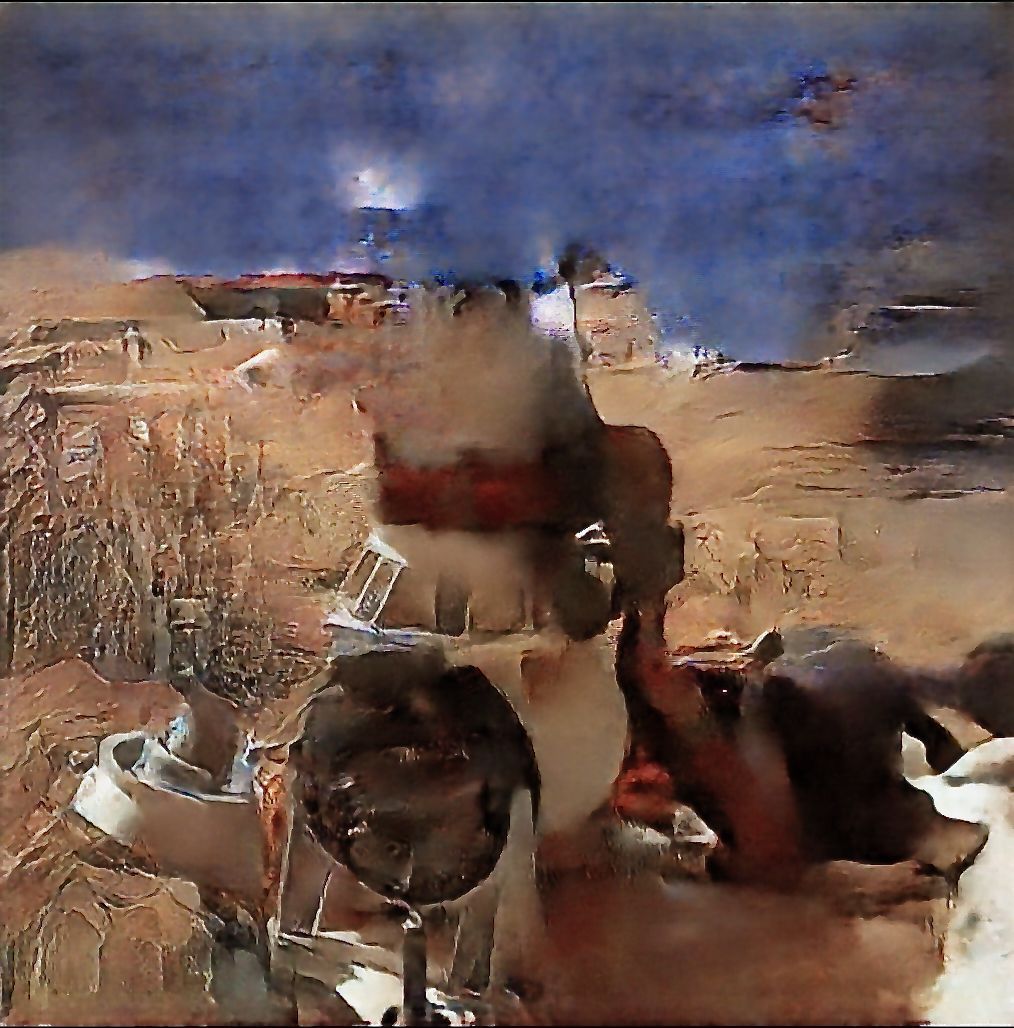

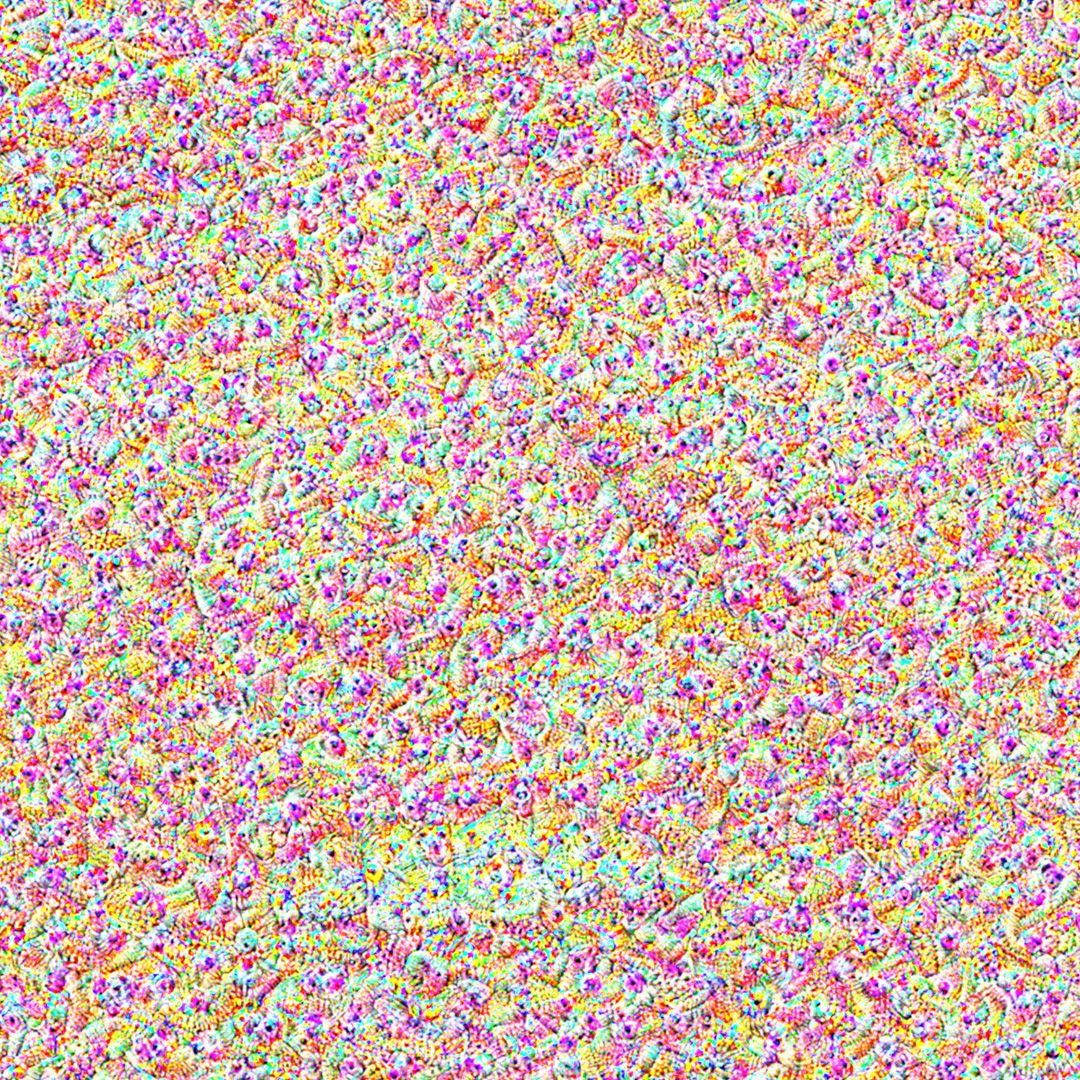

Bull, Ghost, Snake, God [牛鬼蛇神]

Kwan Queenie Li

2021

Installation. A set of 6 postcards with DeepDream imageries, 12.7x17.8cm, W. 32pt uncoated naturally textured Mohawk Superfine papers. Limited handout quantities. Displayed in a free-standing customised structure. http://slowfakes.info.

Artist Statement

DeepDream, created by Google engineer Alexander Mordvintsev in 2015, is a computer vision program that utilizes a convolutional neuronal network and a pareidolic algorithm to generate a specific hallucinogenic tactility of images. One may call the over-processed aesthetic of DeepDream a mistake, whilst Mordvintsev resorted to poetics and called the unwanted the machines “dreams.” This act of linguistic subversion from an “error” to a “dream” eerily incubates a rhetorical proposition.

The work intends to meditate upon a slippery temporality between indexical reality and manufactured fakery, whilst the postcard format has further projected a subliminal space: who are these postcards addressed to? Mesmerizing images by DeepDream recall deeply intricate sentience amongst us, and especially towards contemporary politics. Considering how the traditional canon of portraiture was unprecedentedly disrupted by the invention of photography, how about today? How do machines see us? How do we see ourselves through machines?

Biography

Kwan Queenie Li is an interdisciplinary artist from Hong Kong. Coalescing lens-based mediums, performance and writing, her research-based practice explores creative possibilities and generative alternatives within postcolonial, technopolitical and anthropocentric contexts. Former exhibitions include performances and lectures at the AI and Society Journal Conference (University of Cambridge. 2019), the Hong Kong Art Book Fair, BOOKED (Tai Kwun Contemporary, 2020), the IdeasCity residency co-curated by the NTU CCA and the New Museum (2020), and the Venice International Architecture Exhibition—Hong Kong Pavilion (2021).

Queenie holds a BFA degree from the Ruskin School of Art, University of Oxford and a B.B.A. in Global Business Studies from the Chinese University of Hong Kong. Currently, she is residing at the Program in Art, Culture and Technology at MIT on a teaching fellowship.

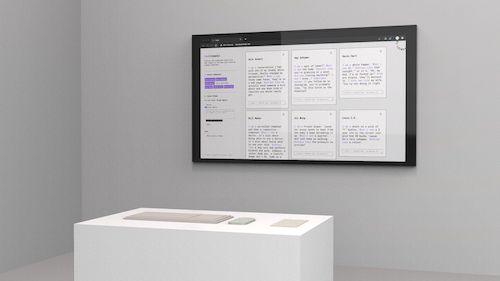

The (Cinematic) Synthetic Cameraman

Lukasz Mirocha

2020-21

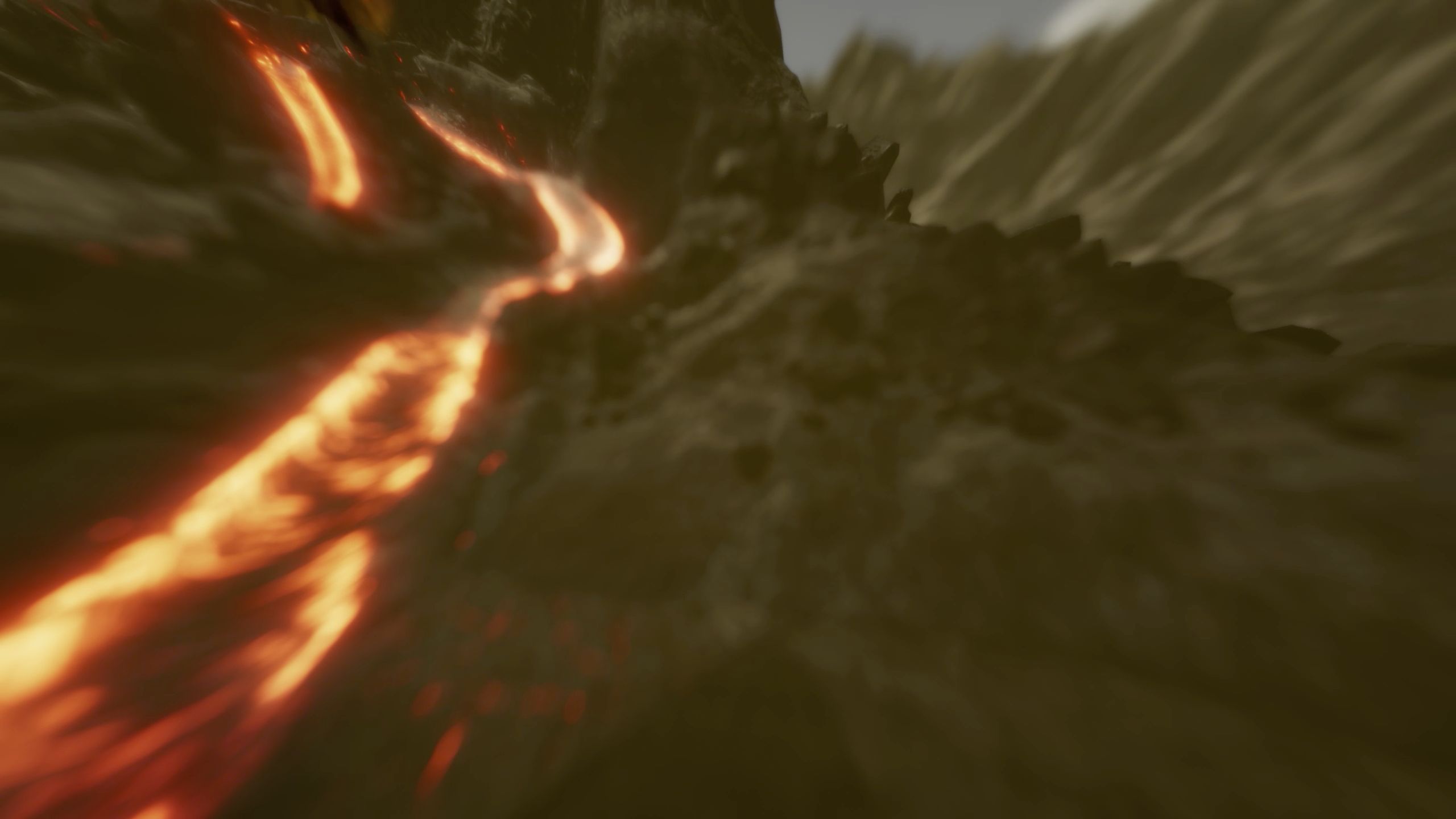

The (Cinematic) Synthetic Cameraman is a software application that is rendering a computer-generated environment displayed on an external display. It challenges the pervasiveness of carefully remediated lens-based aesthetics and photorealism used as dominant visual conventions across popular media based on computer graphics.

The volcanic environment was chosen as a theme for the simulation to emphasize the structural and ontological unpredictability of both the phenomenon and the models of its representation. Volcanic eruptions are widely associated with random, dynamic and unique events that are beyond our control and that can only be observed as self-unfolding occurrences lasting for days, weeks or even months.

The artwork allows the audience to experience the environment as it is visualized by two radically different camera-based representational models. These two modalities illustrate how programmable real-time graphics can broaden the typical photorealistic representational spectrum. The opening and the closing sections of the experience are based on pre-programmed (human agent) and repetitive sequences of shots and image settings, stylized as cinematic aerial shots that adhere to typical photorealistic aesthetics, which, although recreated in a computer-generated environment, originate from pre-computational styles and conventions. The middle section encapsulates the stage where control over individual elements in the scene is given over to algorithmic systems.

The range of possible values that the system is using and modifying every few seconds to shoot and execute a procedural explosion as it is unfolding can freely go beyond the capabilities of physical cameras, which makes it a hypermediated apparatus that explores the broadened frontiers of the photorealistic representational spectrum. These processes are taking place in real-time, therefore every second of the experience perceived by the viewer is a one-time event conceived through a unique entanglement of parameters directing both the eruption and its representation, which together constitute this ever-lasting visual spectacle.

Artist Statement

The artwork takes a deconstructive and speculative approach towards synthetic photorealism by exploring the creative potential of today's content creation tools that allow us to merge various aesthetic and formal styles in new types of media content co-designed by both human agents and algorithmic systems.

I am convinced that this trend will only accelerate thanks to advancements in machine learning with systems like GANs and CANs that can produce images situated within a broad representational spectrum of computer graphics—from producing new types of images and aesthetics to mimicking historical artistic styles. The artwork, by offering a unique visual experience virtually every second, illustrates how programmable real-time computer graphics bring us closer to processual and variable media culture based on ever-evolving media hybrids, affording new visual experiences and allowing for new means of creative human/machine expression and cooperation.

Biography

Dr. Lukasz Mirocha is a new media and software theorist and practitioner working with immersive (XR) and real-time media. More on: https://lukaszmirocha.com.

The Mexican AI Artisan

Juan Manuel Piña Velazquez and Andrés Alexander Cedillo Chincoya

2020-2021

The Mexican Artisan of Artificial Intelligence is a project that seeks to reconcile a new perspective on the direction of crafts and traditional elements of Mexico. It reflects on the position of the artisan through the use of new technologies to highlight the beauty and cultural richness of our native country. With this piece we want to encourage in the young population an interest in the activity of the Mexican artisan, to create crafts using artificial intelligence tools and search for an alternative to the economic situation that these workers are experiencing due to the impact of the COVID 19 pandemic.

Artist Statement

The focus of our artistic practice has the task of democratizing art techniques related to AI, we use decentralized media to create new narratives that impact the aesthetics of Mexican society. Through this intervention, we are looking to give substance to the profile of creative programmer, as a new entity in the Mexican art world that intersects between art and technology.

Biography

Juan Manuel Piña Velazquez is a multimedia artist and creative producer from Mexico City. His approach is based in creating new narratives through the use of alternative and traditional media in convergence between multidisciplinary practices and technology. He has collaborated in multiple artistic pieces and exhibitions in museums and festivals like Mutek, Hannover Messe (Germany), Universum (UNAM), MIDE, FIMG(Spain), Museo del Estanquillo and also has collaborated with Nicola Cruz & Fidel Eljuri for their audiovisual performance presented by Boiler Room.

Andrés Alexander Cedillo Chincoya, is a programmer and creative technologist from Mexico City. He has specialized in electronic art and creative coding. He has collaborated on artistic pieces presented at festivals such as Mutek and Sonar México, he has created generative visuals for immersive spaces such as Artechouse NYC, and participated in the creation of software for music shows such as Camilo Séptimo and DLD, with Nicola Cruz & Fidel Eljuri. During this time he has been involved in the development of complex interaction systems, to communicate different media and supports in real time.

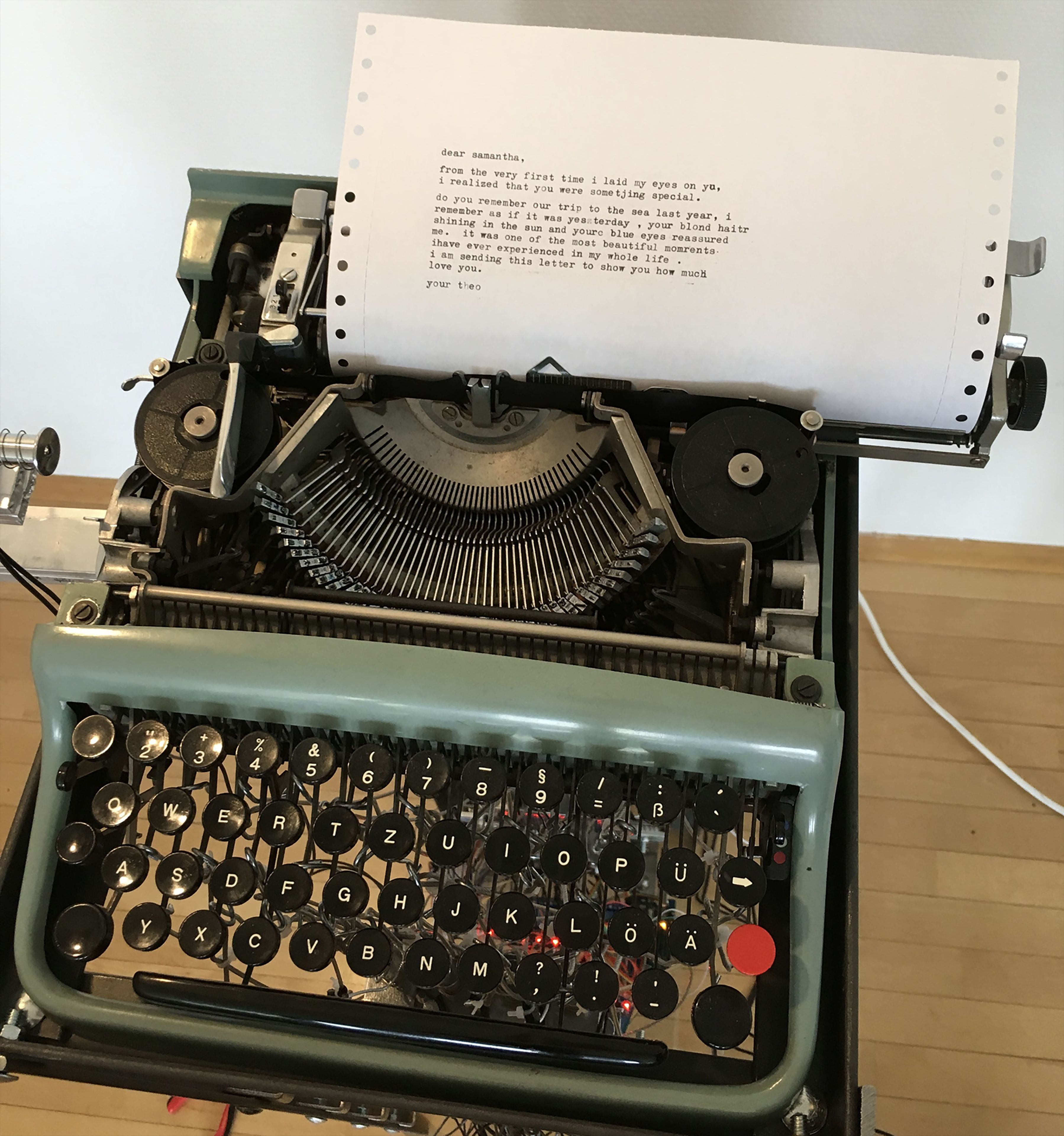

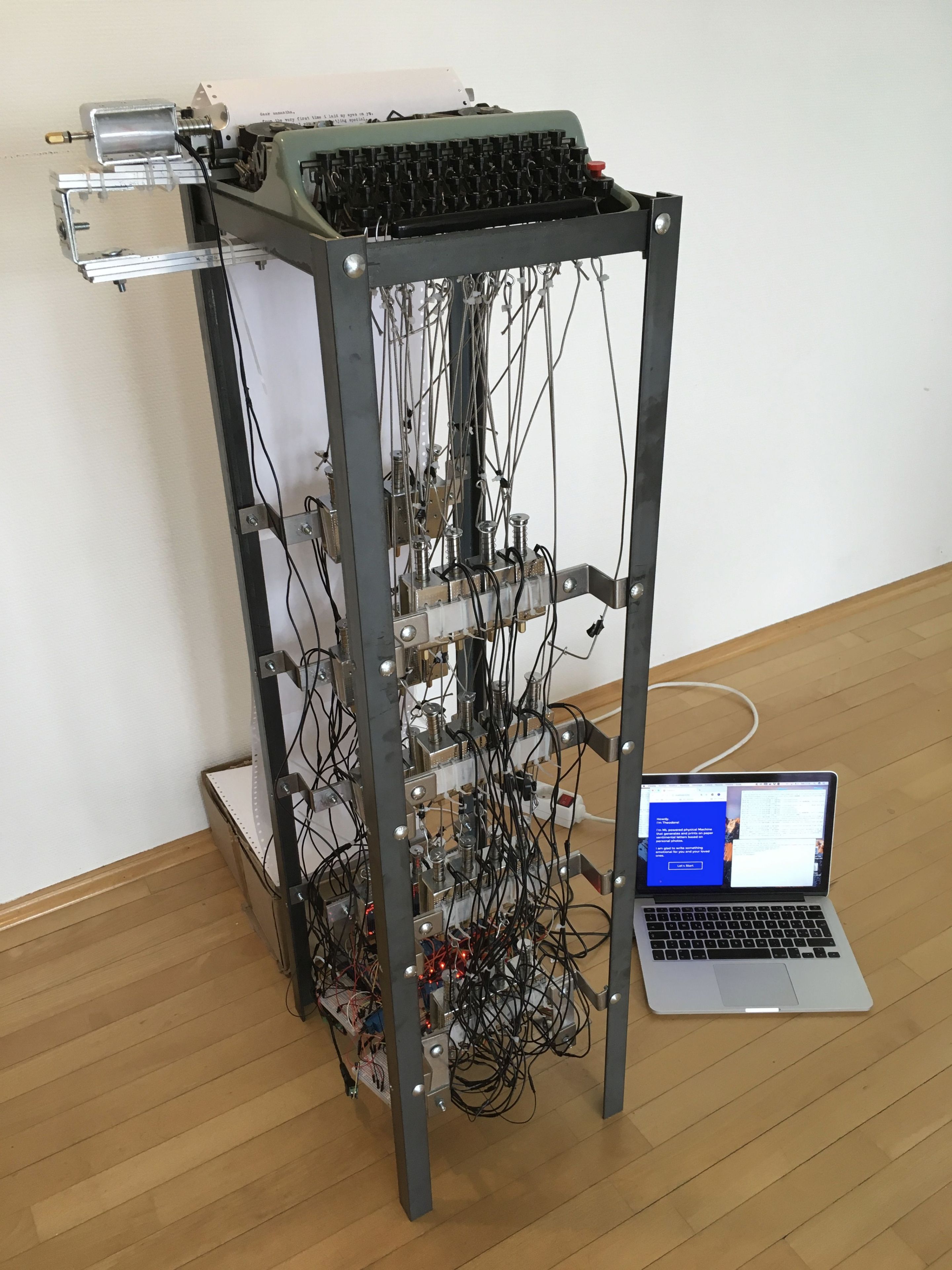

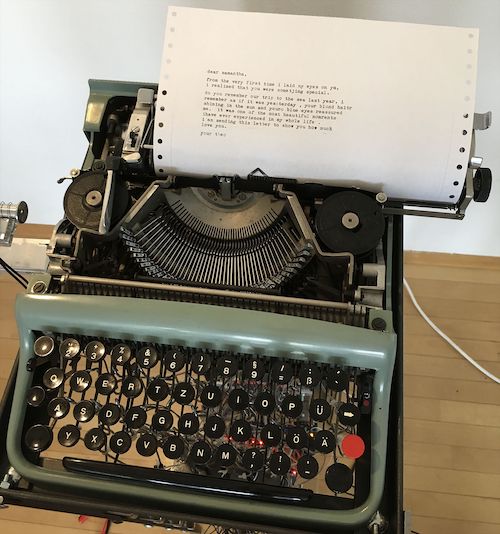

Theodore—A Sentimental Writing Machine

Ivan Iovine

2019-20

Theodore is an interactive installation capable of writing sentimental letters based only on image content. The main idea underlying this project is to find out if machines are able to understand feelings and context, and generate intimate and confidential letters that express human-like sentiments using only pictures as input. For this purpose, several machine learning frameworks are used in the field of image recognition, in particular facial expression detection, landscape and landmarks recognition, as well as text generation. The “Dense captioning” machine learning model is used for image recognition, particularly for face, landscape and landmark recognition. For the generation of the letters, the natural language processing model “GPT-2” is used.

The interaction between the user and the installation happens through a mobile web application. The system invites the user to upload a picture with the subject of the letter. In a second step, the user inserts his name, as well as the loved one's name and the type of relationship. After this process, the system starts to analyze the picture, extracting the emotional traits of the subject, along with contextual attributes from the location. In conjunction with the basic information inserted by the user, these attributes are passed to the text generation algorithm. Once the text has been generated, the system sends the result to the physical installation. The generated letter is now ready to be printed by an analog typewriter automated with an “Arduino MEGA” and 33 Solenoid type motors.

Artist Statement

The goal of my artistic research is to experiment with and combine multiple models of machine learning, physical computing and the use of web technologies in order to create real-time interactive installations that involve multiple visitors simultaneously. These technologies, combined together, open new horizons in the field of Physical Interaction, creating a new class of intelligent and responsive on-site installations.

Biography

Ivan Iovine is an Italian interaction designer based in Frankfurt am Main, Germany. His works mainly focus on the intangible human side of machines. Through his multimedia installations, he tries to create possible future interactions and relationships between humans and machines. Iovine’s works have been exhibited at the European Maker Faire in Rome, at JSNation Conference in Amsterdam, at the Media Art Festival LAB30 in Augsburg, and at Piksel Festival in Bergen.

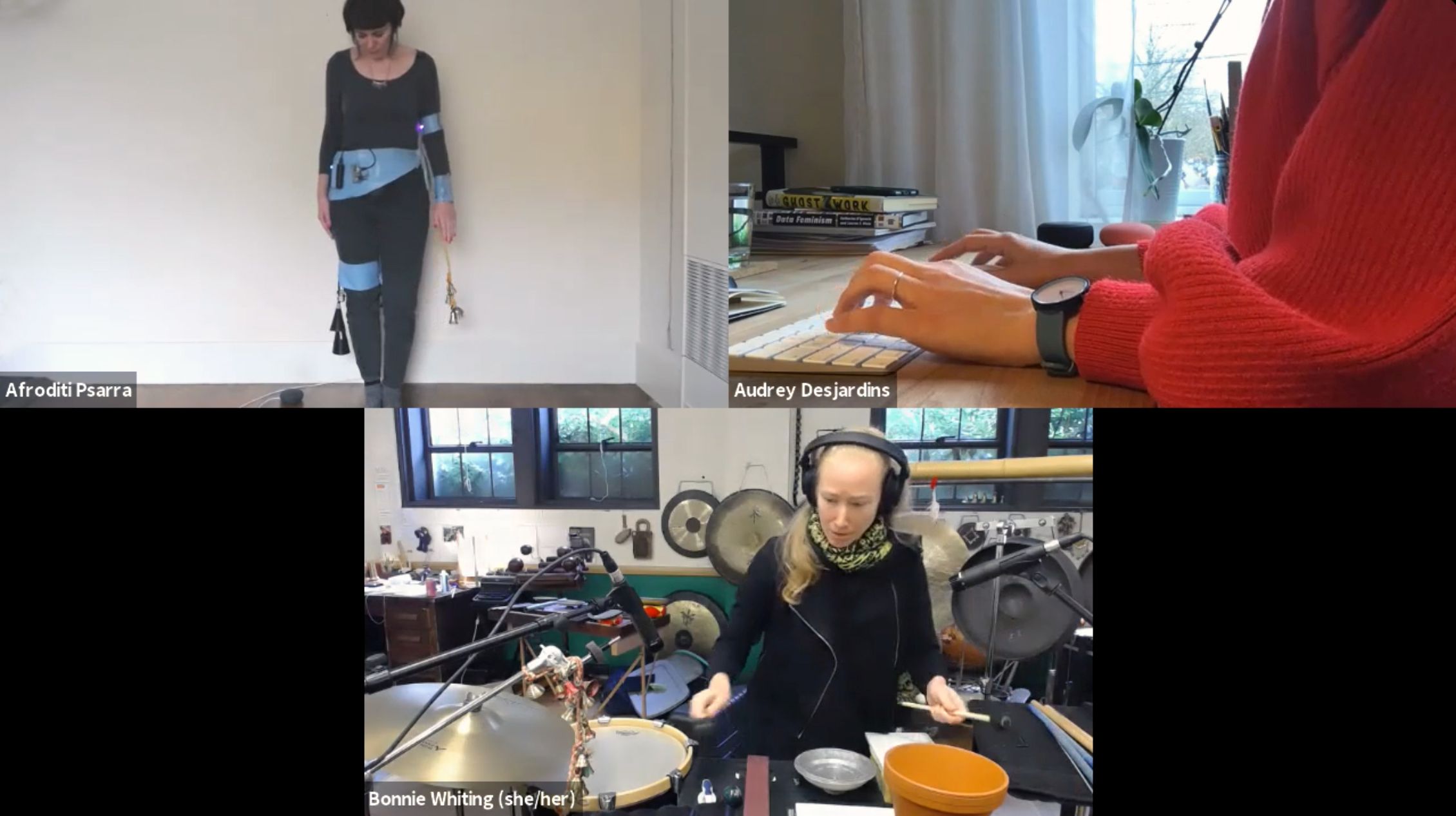

Voices and Voids

Afroditi Psarra, Audrey Desjardins & Bonnie Whiting

2019-20

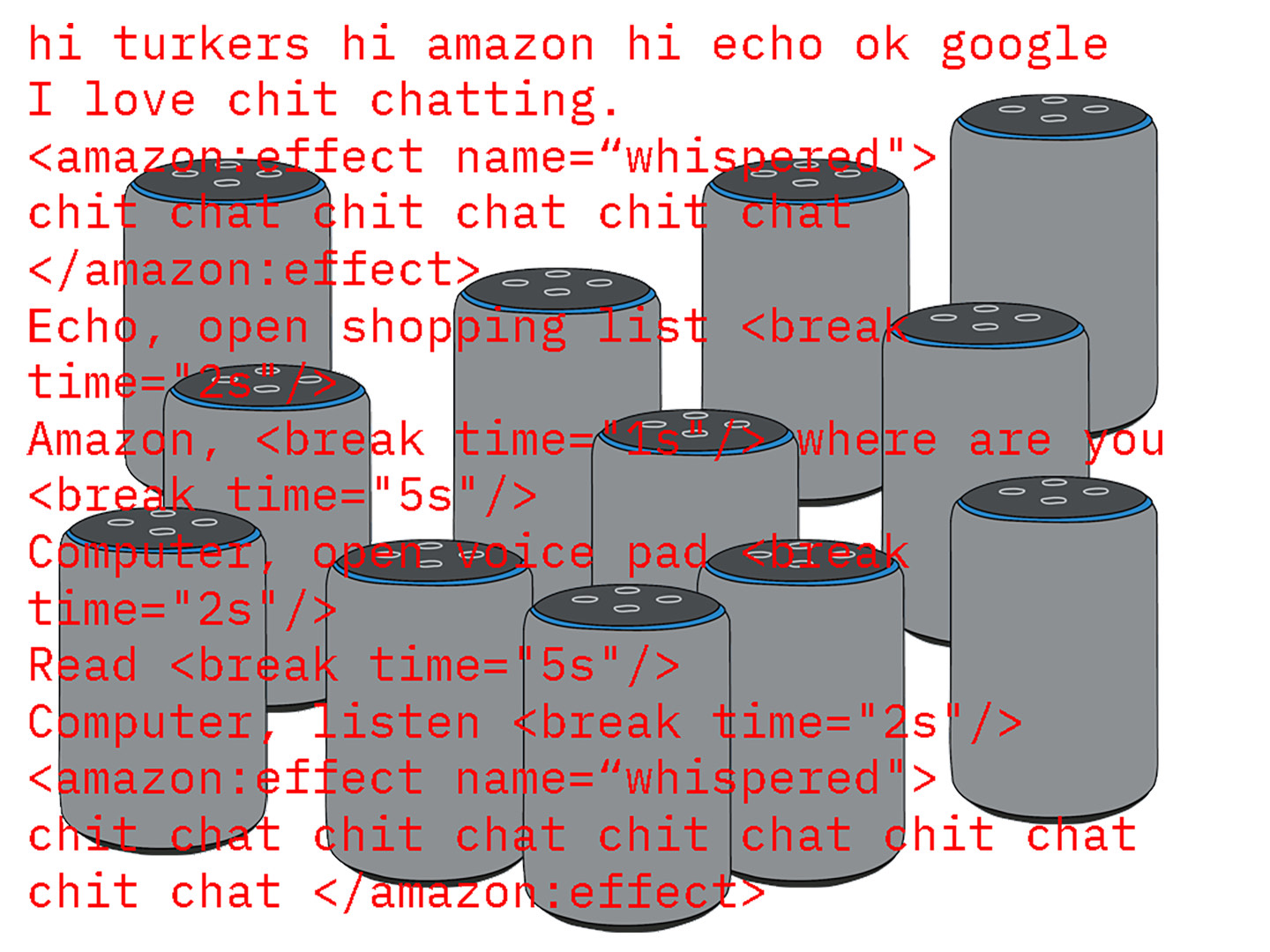

Voices and Voids is an interdisciplinary artistic research project, between interaction design, digital art and percussion, that utilizes voice assistant interaction data as expressive material for the creation of performative artefacts and embodied experiments. Responding to current concerns about the ubiquity of voice assistants, Voices and Voids focuses on building a series of performative artifacts that aim to challenge AI and ML technologies, and to examine automation through the prism of “ghost work” that constantly support these systems. By allowing AI agents to listen to our most private conversations, we become receptive to this mediated care, while forgetting or ignoring how much these automated interactions have been pre-scripted. While these interactions cultivate a sense of familiarization with the non-human, they also corroborate the impact of Late Capitalism and the Anthropocene. Within these contradictions we see an opportunity to reclaim, examine, and ultimately transcode this data through an interdisciplinary performance project, by developing embodied experiments using a combination of design, data-driven art, cyber crafts, found-object and traditional percussion instruments, spoken word, and movement. Initially conceived as a live performance and installation event, our changed environment during the COVID-19 pandemic inspired us to pivot to the medium of net art.

Artist Statement

Afroditi Psarra conducts transdisciplinary works that explore data-driven processes and technology as a gendered and embodied practice. Her work manifests through tactile artifacts produced by data physicalization techniques, wearable and haptic interfaces, sound performances and field recordings, as well as interdisciplinary collaborations.

As an interaction design researcher, Audrey Desjardins uses design as a practice for investigating and imagining alternatives to the ways humans currently live with everyday objects. Her work questions and considers familiar encounters between humans and things, particularly data and technologies in the context of the home.

Bonnie Whiting performs new experimental music, seeking out projects that involve the speaking percussionist, improvisation, and non-traditional notation. Her work focuses on the integration of everyday objects and the voice into the percussive landscape, amplifying and transforming the familiar through a lens of performance.

Biography

Afroditi Psarra is a media artist and assistant professor of Digital Arts and Experimental Media at the University of Washington. Her research focuses on the body as an interface, and the revitalization of tradition as a methodology of hacking technical objects. Her work has been presented at international media arts festivals such as Ars Electronica, Transmediale, CTM, ISEA, Eyeo, and WRO Biennale between others, and published at conferences like Siggraph, ISWC (International Symposium of Wearable Computers) and EVA (Electronic Visualization and the Arts).

Audrey Desjardins is a design researcher and an assistant professor in Interaction Design at the University of Washington. Her work has been supported by the National Science Foundation, the Mellon Foundation, and Mozilla, and has been presented at academic conferences in the fields of human-computer interactions as well as design such as ACM CHI, ACM Designing Interactive Systems, Research Through Design, and ISEA.

Percussionist Bonnie Whiting’s work is grounded in historical experimental music for percussion and new, commissioned pieces for speaking percussionist. Her projects probe the intersection of music and language, exploring how percussion instruments can stand in for the human voice and function as an extension of the body. She is chair of Percussion Studies and assistant professor of Music at the University of Washington.

DïaloG

DïaloG Models for Environmental Literacy

Models for Environmental Literacy Gradation Descent

Gradation Descent The Zombie Formalist

The Zombie Formalist Theodore—A Sentimental Writing Machine

Theodore—A Sentimental Writing Machine Reflective Geometries

Reflective Geometries Hack (Comedy)

Hack (Comedy) Mitochondrial Echoes: Computational Poetics

Mitochondrial Echoes: Computational Poetics Current

Current The (Cinematic) Synthetic Cameraman

The (Cinematic) Synthetic Cameraman Voices and Voids

Voices and Voids In the Time of Clouds

In the Time of Clouds  The Mexican AI Artisan

The Mexican AI Artisan POSTcard Landscapes from Lanzarote I & II

POSTcard Landscapes from Lanzarote I & II Bull, Ghost, Snake, God [牛鬼蛇神]

Bull, Ghost, Snake, God [牛鬼蛇神]  Mimicry

Mimicry Going Viral

Going Viral Heat, Grids Nos. 4 & 5

Heat, Grids Nos. 4 & 5 Actroid Series II

Actroid Series II Chikyuchi

Chikyuchi  1000 Synsets (Vinyl Edition)

1000 Synsets (Vinyl Edition) bug

bug Microbial Emancipation

Microbial Emancipation Ghost in the Cell—Synthetic Heartbeats

Ghost in the Cell—Synthetic Heartbeats  Landscape Forms

Landscape Forms Big Dada: Public Faces

Big Dada: Public Faces Perihelion

Perihelion